BLOG POST

AI 日报

AI ‘Workslop’ Is Killing Productivity and Making Workers Miserable

AI slop is taking over workplaces. Workers said that they thought of their colleagues who filed low-quality AI work as "less creative, capable, and reliable than they did before receiving the output."

Florida Sues Hentai Site and High-Risk Payment Processor for Not Verifying Ages

Florida's attorney general claims Nutaku, Spicevids, and Segpay are in violation of the state's age verification law.

CBP Flew Drones to Help ICE 50 Times in Last Year

The drone flight log data, which stretches from March 2024 to March 2025, shows CBP flying its drones to support ICE and other agencies. CBP maintains multiple Predator drones and flew them over the recent anti-ICE protests in Los Angeles.

Steam Hosted Malware Game that Stole $32,000 from a Cancer Patient Live on Stream

Scammers stole the crypto from a Latvian streamer battling cancer and the wider security community rallied to make him whole.

We’re Suing ICE for Its $2 Million Spyware Contract

404 Media has filed a lawsuit against ICE for access to its contract with Paragon, a company that sells powerful spyware for breaking into phones and accessing encrypted messaging apps.

AI-Generated YouTube Channel Uploaded Nothing But Videos of Women Being Shot

YouTube removed a channel that posted nothing but graphic Veo-generated videos of women being shot after 404 Media reached out for comment.

How Creatio Is Redefining CRM for Financial Institutions

Two New England banks switched from Salesforce to Creatio, highlighting what executives say is the importance of personalized relationships between vendor and customer.

Nvidia Will Invest $100B in OpenAI

The deal highlights Nvidia's dominant financial position but also raises questions about timelines and how partners of those vendors might react.

Public trust deficit is a major hurdle for AI growth

While politicians tout AI’s promise of growth and efficiency, a new report reveals a public trust deficit in the technology. Many are deeply sceptical, creating a major headache for governments’ plans. A deep dive by the Tony Blair Institute for Global Change (TBI) and Ipsos has put some hard numbers on this feeling of unease. […]

The post Public trust deficit is a major hurdle for AI growth appeared first on AI News.

How BMC can be the ‘orchestrator of orchestrators’ for enterprise agentic AI

Agentic AI is, in the opinion of McKinsey, the way to ‘break out of the gen AI paradox.’ Nearly four in five companies are using generative AI, according to the consultancy giant’s research, but comparatively few are getting any bottom-line value from it. The answer to the question of value, therefore, may be in orchestration. […]

The post How BMC can be the ‘orchestrator of orchestrators’ for enterprise agentic AI appeared first on AI News.

Karnataka CM seeks Wipro’s support to cut ORR congestion by 30%

“Your support will go a long way in easing bottlenecks, enhancing commuter experience, and contributing to a more efficient and livable Bengaluru,” CM wrote.

The post Karnataka CM seeks Wipro’s support to cut ORR congestion by 30% appeared first on Analytics India Magazine.

TikTok’s US Future Shaped by Trump, Powered by Oracle and Murdoch

“Oracle will operate, retrain and continuously monitor the US algorithm to ensure content is free from improper manipulation or surveillance.”

The post TikTok’s US Future Shaped by Trump, Powered by Oracle and Murdoch appeared first on Analytics India Magazine.

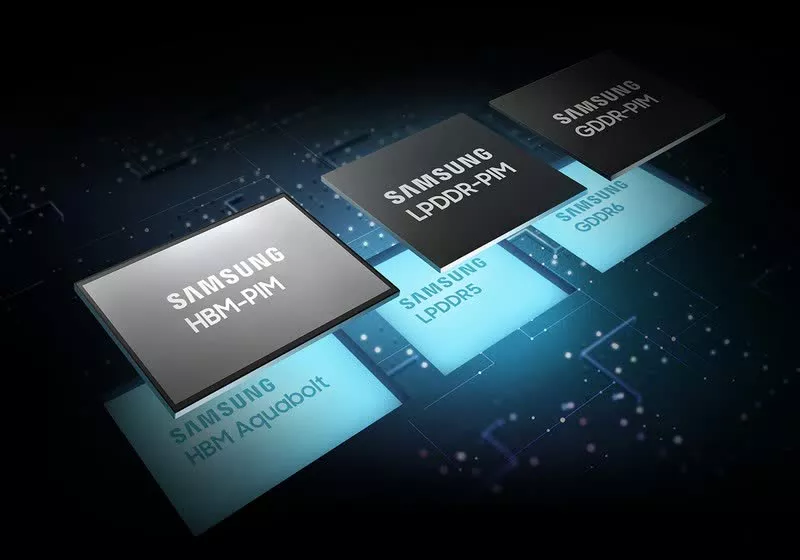

TCS Expands AI Services with NVIDIA Partnership, Deepens Vodafone Idea Ties

The NVIDIA partnership centres on advancing global retail, whereas the collaboration with the telecom company aims to enhance customer experience.

The post TCS Expands AI Services with NVIDIA Partnership, Deepens Vodafone Idea Ties appeared first on Analytics India Magazine.

The $100k H-1B Gamble for Big Tech

Firms may either go the remote work way or just pay up when they really want an employee in US.

The post The $100k H-1B Gamble for Big Tech appeared first on Analytics India Magazine.

Satellites That ‘Think’ Could Change How India Responds to Disasters

SkyServe is building onboard processing for satellites, shortening the time between capturing an image and turning it into usable insights.

The post Satellites That ‘Think’ Could Change How India Responds to Disasters appeared first on Analytics India Magazine.

Developer Experience: The Unsung Hero Behind GenAI and Agentic AI Acceleration

DevEx is emerging as the invisible force that accelerates innovation, reduces

friction and translates experimentation into enterprise-grade outcomes.

The post Developer Experience: The Unsung Hero Behind GenAI and Agentic AI Acceleration appeared first on Analytics India Magazine.

Indian IT Majors Cut Visa Petitions by 44% in Four Years

A steep new US visa fee could reshape the global tech talent landscape while also bolstering India’s tech hubs.

The post Indian IT Majors Cut Visa Petitions by 44% in Four Years appeared first on Analytics India Magazine.

Healthtech Startup Zealthix Secures $1.1 Mn in Seed Funding Led by Unicorn India Ventures

The funding will fuel Zealthix’s expansion and technology enhancements aimed at digitising India’s healthcare ecosystem.

The post Healthtech Startup Zealthix Secures $1.1 Mn in Seed Funding Led by Unicorn India Ventures appeared first on Analytics India Magazine.

Cloudflare Pledges 1,111 Internship Spots for 2026

Beginning January 2026, select startups will be able to work from Cloudflare offices on certain days to collaborate with teams and peers.

The post Cloudflare Pledges 1,111 Internship Spots for 2026 appeared first on Analytics India Magazine.

Agnikul Cosmos Opens India’s First Large-Format Rocket 3D Printing Hub

This Chennai startup aims to speed up engine production and strengthen India’s private space ecosystem.

The post Agnikul Cosmos Opens India’s First Large-Format Rocket 3D Printing Hub appeared first on Analytics India Magazine.

OpenTelemetry Is Ageing Like Fine Wine

Enterprises and AI frameworks are embracing OpenTelemetry to standardise data, cut integration costs, and build trust in AI systems.

The post OpenTelemetry Is Ageing Like Fine Wine appeared first on Analytics India Magazine.

IT Minister Ashwini Vaishnaw Switches to Zoho, Backs PM Modi’s Swadeshi Push

"I urge all to join PM Shri @narendramodi Ji’s call for Swadeshi by adopting indigenous products & services,” Vaishnaw posted on X.

The post IT Minister Ashwini Vaishnaw Switches to Zoho, Backs PM Modi’s Swadeshi Push appeared first on Analytics India Magazine.

OpenAI, NVIDIA Sign $100 Billion Deal to Deploy 10 GW of AI Systems

The first gigawatt of capacity is scheduled for deployment in the second half of 2026 on NVIDIA’s Vera Rubin platform.

The post OpenAI, NVIDIA Sign $100 Billion Deal to Deploy 10 GW of AI Systems appeared first on Analytics India Magazine.

How BharatGen Took the Biggest Slice of IndiaAI’s GPU Cake

BharatGen has secured 13,640 H100 GPUs and ₹988.6 crore in funding to pursue India’s first trillion-parameter AI model initiative.

The post How BharatGen Took the Biggest Slice of IndiaAI’s GPU Cake appeared first on Analytics India Magazine.

H-1B Visa Fee Hike Could Hit Remittances, Telangana Warns

The state seeks exemptions to protect IT professionals and families dependent on overseas income.

The post H-1B Visa Fee Hike Could Hit Remittances, Telangana Warns appeared first on Analytics India Magazine.

India’s GCCs Could Add $200 Billion by 2030, Says CII

At present, nearly 95% of India’s 1,800 GCCs are concentrated in six tier-1 cities.

The post India’s GCCs Could Add $200 Billion by 2030, Says CII appeared first on Analytics India Magazine.

H-1B Shockwave: What a $100,000 Visa Fee Means for Indian AI Startups

“The proposed fee could act as a catalyst in strengthening India’s AI talent ecosystem.”

The post H-1B Shockwave: What a $100,000 Visa Fee Means for Indian AI Startups appeared first on Analytics India Magazine.

The Generation That Refused to Log Off in Nepal

“The backlash made it evident that Nepali citizens do not tolerate digital authoritarianism disguised as governance.”

The post The Generation That Refused to Log Off in Nepal appeared first on Analytics India Magazine.

Assessli’s AI-led Behavioural Model Could Eclipse Language Models

The Kolkata-based company’s patented methodology is in use across sectors like education, healthcare, and financial services.

The post Assessli’s AI-led Behavioural Model Could Eclipse Language Models appeared first on Analytics India Magazine.

Trump’s H-1B Fee Alarms Industry, But India Sees GCC Opportunity

“For India, this could actually mean more jobs, more investment, and more GCCs”

The post Trump’s H-1B Fee Alarms Industry, But India Sees GCC Opportunity appeared first on Analytics India Magazine.

Indian IT Giants vs Startups: Who Will Script India’s AI Enterprise Story?

At Cypher 2025, leaders debated India’s enterprise AI future, concluding that it won’t be giants versus startups, but rather collaborative ecosystems.

The post Indian IT Giants vs Startups: Who Will Script India’s AI Enterprise Story? appeared first on Analytics India Magazine.

How Confluent Helped Notion Scale AI and Productivity for 100M+ Users

Notion, as a top productivity tool, utilised Confluent’s solutions to handle its operations efficiently.

The post How Confluent Helped Notion Scale AI and Productivity for 100M+ Users appeared first on Analytics India Magazine.

Should India Build Its Own AI Foundational Models?

At Cypher 2025, industry leaders debate whether India should invest in building its own AI foundational models or adapt global ones.

The post Should India Build Its Own AI Foundational Models? appeared first on Analytics India Magazine.

Broadcom’s prohibitive VMware prices create a learning “barrier,” IT pro says

Public schools ran to VMware during the pandemic. Now they're running away.

Supreme Court lets Trump fire FTC Democrat despite 90-year-old precedent

Kagan dissent: Majority is giving Trump "full control" of independent agencies.

Google Play is getting a Gemini-powered AI Sidekick to help you in games

Here comes another screen overlay.

EU investigates Apple, Google, and Microsoft over handling of online scams

EU looks at Big Tech groups over handling of fake apps and search results.

Volvo says it has big plans for South Carolina factory

The Ridgeville plant will add a new hybrid by 2030 in addition to next year's XC60.

US intel officials “concerned” China will soon master reusable launch

"They have to have on-orbit refueling because they don’t access space as frequently as we do."

How to fight censorship, one Disney+ cancellation at a time

Thuggish government behavior is not stopped by capitulation.

NASA names 24th astronaut class, including prior SpaceX crew member

NASA's 24th astronaut class since selecting the Mercury astronauts in 1959.

Anti-vaccine groups melt down over RFK Jr. linking autism to Tylenol

"THIS WAS NOT CAUSED BY TYLENOL" Kennedy's anti-vaccine group retweeted.

iFixit tears down the iPhone Air, finds that it’s mostly battery

Design that puts the logic board at the top helps stave off a second Bendgate.

Disney reinstates Jimmy Kimmel after backlash over capitulation to FCC

Disney says "thoughtful conversations with Jimmy" led to show's return.

DOJ aims to break up Google’s ad business as antitrust case resumes

The remedy phase of Google's adtech antitrust case begins.

Rand Paul: FCC chair had “no business” intervening in ABC/Kimmel controversy

"Absolutely inappropriate. Brendan Carr has got no business weighing in on this."

OpenAI and Nvidia’s $100B AI plan will require power equal to 10 nuclear reactors

"This is a giant project," Nvidia CEO said of new 10-gigawatt AI infrastructure deal.

DeepMind AI safety report explores the perils of “misaligned” AI

DeepMind releases version 3.0 of its AI Frontier Safety Framework with new tips to stop bad bots.

Here’s how potent Atomic credential stealer is finding its way onto Macs

LastPass warns it's one of the latest to see its well-known brand impersonated.

Three crashes in the first day? Tesla’s robotaxi test in Austin.

Tesla's crash rate is orders of magnitude worse than Waymo's.

Our fave Star Wars duo is back in Mandalorian and Grogu teaser

There is almost no dialogue and little hint of the plot, but the visuals should delight fans.

F1 in Azerbaijan: This sport is my red flag

Baku is a mashup of Monaco and Monza, 90 feet below sea level.

What climate targets? Top fossil fuel producing nations keep boosting output

Top producers are planning to mine and drill even more of the fuels in 2030.

The US-UK tech prosperity deal carries promise but also peril for the general public

The deliberate alignment of AI systems with the values of corporations and individuals could sour the investment.

Air quality analysis reveals minimal changes after xAI data center opens in pollution-burdened Memphis neighborhood

Analysis of the air quality data available for southwest Memphis finds that pollution has long been quite bad, but the turbines powering an xAI data center have not made it much worse.

What happens when AI comes to the cotton fields

AI can help farmers be more effective and sustainable, but its use varies from state to state. A project in Georgia aims to bring the technology to the state’s cotton farmers.

AI and credit: How can we keep machines from reproducing social biases?

Can we move from algorithmic discrimination to inclusive finance?

AI use by UK justice system risks papering over the cracks caused by years of underfunding

The justice system is suffering from underfunding and AI won’t solve all the problems.

How US-UK tech deal could yield significant benefits for the British public – expert Q&A

The £150bn package could yield real benefits in public health, as well as other areas of technology.

How users can make their AI companions feel real – from picking personality traits to creating fan art

The strong bonds that users are forming with their AI chatbots rest on the human imagination at work.

AI ‘carries risks’ but will help tackle global heating, says UN’s climate chief

Simon Stiell insists it is vital governments regulate the technology to blunt its dangerous edges

Harnessing artificial intelligence will help the world to tackle the climate crisis, but governments must step in to regulate the technology, the UN’s climate chief has said.

AI is being used to make energy systems more efficient, and to develop tools to reduce carbon from industrial processes. The UN is also using AI as an aid to climate diplomacy.

‘Tentacles squelching wetly’: the human subtitle writers under threat from AI

Artificial intelligence is making steady advances into subtitling but, say its practitioners, it’s a vital service that needs a human to make it work

Is artificial intelligence going to destroy the SDH [subtitles for the deaf and hard of hearing] industry? It’s a valid question because, while SDH is the default subtitle format on most platforms, the humans behind it – as with all creative industries – are being increasingly devalued in the age of AI. “SDH is an art, and people in the industry have no idea. They think it’s just a transcription,” says Max Deryagin, chair of Subtle, a non-profit association of freelance subtitlers and translators.

The thinking is that AI should simplify the process of creating subtitles, but that is way off the mark, says Subtle committee member Meredith Cannella. “There’s an assumption that we now have to do less work because of AI tools. But I’ve been doing this now for about 14-15 years, and there hasn’t been much of a difference in how long it takes me to complete projects over the last five or six years.”

Nvidia to invest $100bn in OpenAI, bringing the two AI firms together

Deal will involve two transactions – OpenAI will pay Nvidia for chips, and the chipmaker will invest in the AI start-up

Nvidia, the chipmaking company, will invest up to $100bn in OpenAI and provide it with data center chips, the companies said on Monday, a tie-up between two of the highest-profile leaders in the global artificial intelligence race.

The deal, which will see Nvidia start delivering chips as soon as late 2026, will involve two separate but intertwined transactions, according to a person close to OpenAI. The startup will pay Nvidia in cash for chips, and Nvidia will invest in OpenAI for non-controlling shares, the person said.

If Anyone Builds it, Everyone Dies review – how AI could kill us all

If machines become superintelligent we’re toast, say Eliezer Yudkowsky and Nate Soares. Should we believe them?

What if I told you I could stop you worrying about climate change, and all you had to do was read one book? Great, you’d say, until I mentioned that the reason you’d stop worrying was because the book says our species only has a few years before it’s wiped out by superintelligent AI anyway.

We don’t know what form this extinction will take exactly – perhaps an energy-hungry AI will let the millions of fusion power stations it has built run hot, boiling the oceans. Maybe it will want to reconfigure the atoms in our bodies into something more useful. There are many possibilities, almost all of them bad, say Eliezer Yudkowsky and Nate Soares in If Anyone Builds It, Everyone Dies, and who knows which will come true. But just as you can predict that an ice cube dropped into hot water will melt without knowing where any of its individual molecules will end up, you can be sure an AI that’s smarter than a human being will kill us all, somehow.

More Britons view AI as economic risk than opportunity, Tony Blair thinktank finds

TBI says poll data threatens Keir Starmer’s ambition for UK to become artificial intelligence ‘superpower’

Nearly twice as many Britons view artificial intelligence as a risk to the economy than regard it as an opportunity, according to Tony Blair’s thinktank.

The Tony Blair Institute warned that the poll findings threatened Keir Starmer’s ambition for the UK to become an AI “superpower” and urged the government to convince the public of the technology’s benefits.

Racists Are Using AI to Spread Diabolical Anti-Immigrant Slop

Welcome to the future.

The post Racists Are Using AI to Spread Diabolical Anti-Immigrant Slop appeared first on Futurism.

Users Are Saying ChatGPT Has Been Lobotomized by a Secret New Update

"I just want it to stop lying."

The post Users Are Saying ChatGPT Has Been Lobotomized by a Secret New Update appeared first on Futurism.

ChatGPT Has a Stroke When You Ask It This Specific Question

A question that should quite literally be as easy as ABC -- but isn't.

The post ChatGPT Has a Stroke When You Ask It This Specific Question appeared first on Futurism.

xAI Workers Leak Disturbing Information About Grok Users

"It actually made me sick."

The post xAI Workers Leak Disturbing Information About Grok Users appeared first on Futurism.

Hospitals Deploying Robot Programmed to Act Like Child to Comfort Pediatric Patients

"Imagine a pure emotional intelligence like WALL-E."

The post Hospitals Deploying Robot Programmed to Act Like Child to Comfort Pediatric Patients appeared first on Futurism.

Woman Asks ChatGPT for Powerball Numbers, Wins $150,000

"I’m like, ChatGPT, talk to me... Do you have numbers for me?"

The post Woman Asks ChatGPT for Powerball Numbers, Wins $150,000 appeared first on Futurism.

Using AI Increases Unethical Behavior, Study Finds

"Using AI creates a convenient moral distance between people and their actions."

The post Using AI Increases Unethical Behavior, Study Finds appeared first on Futurism.

Why One VC Thinks Quantum Is a Bigger Unlock Than AGI

Venture capitalist Alexa von Tobel is ready to bet on quantum computing—starting with hardware.

Louisiana Hands Meta a Tax Break and Power for Its Biggest Data Center

Mark Zuckerberg’s company faces backlash after rowing back promises to create between 300 and 500 new jobs to man its subsidiary’s new data center.

I Thought I Knew Silicon Valley. I Was Wrong

Tech got what it wanted by electing Trump. A year later, it looks more like a suicide pact.

Setting Up LLaVA/BakLLaVA with vLLM: Backend and API Integration

Table of Contents Setting Up LLaVA/BakLLaVA with vLLM: Backend and API Integration Why vLLM for Multimodal Inference The Challenges of Serving Image + Text Prompts at Scale Why Vanilla Approaches Fall Short How vLLM Solves Real-World Production Workloads Configuring Your…

The post Setting Up LLaVA/BakLLaVA with vLLM: Backend and API Integration appeared first on PyImageSearch.

Nexstar Joins Sinclair in Boycotting Jimmy Kimmel’s Show

Nexstar Media Group Inc. said it would join Sinclair Inc., another large owner of ABC TV stations, in not airing Jimmy Kimmel Live! on Tuesday night.

Mamdani Draws NYC Investors to Advise Him If He Wins Mayor Race

Members of New York City’s business community are forming an advisory group in an effort to guide mayoral candidate Zohran Mamdani if he wins the election in November.

Microsoft Is Turning to the Field of Microfluidics to Cool Down AI Chips

Small amounts of fluid passed through channels etched on chips can save energy and boost AI systems.

Trump Plans H-1B Lottery Overhaul to Prioritize Higher Earners

The Trump administration is proposing major changes to the selection process for H-1B visas heavily used by the tech industry, basing allocation on skill-level required and wages offered for a position instead of the current randomized lottery.

Micron Needs a Rosy Outlook to Justify Its Soaring Stock Price

Micron Technology Inc.’s earnings after the bell Tuesday will shed light on whether the chipmaker’s high-flying stock has gotten ahead of itself after a 40% gain in September.

Google Courts Gamers With Mobile AI Gaming Agent, Leagues

Google is introducing an artificial intelligence assistant that can offer live coaching to mobile gamers, part of a larger effort to boost engagement on its Android platform.

Amazon Heads Into FTC Jury Trial Over Prime Cancellation Claims

Amazon.com Inc. and three executives are facing a federal regulator’s allegations in a Seattle court that they duped customers into signing up for the company’s Prime subscription service and then made it too hard to cancel.

Taiwan Curbs Chip Exports to South Africa in Rare Power Move

Taiwan has imposed restrictions on the export of chips to South Africa over national security concerns, taking the unusual step of using its dominance of the market to pressure a country that’s closely allied itself with China.

Biotech Real Estate Slump Has MIT’s Hometown Seeking Backups

Kendall Square, a major biopharmaceutical hub that borders the Massachusetts Institute of Technology’s campus, bills itself as the most innovative square mile on the planet. These days, there’s plenty of room for innovation in the Cambridge, Massachusetts neighborhood.

Market Skepticism Is Growing for Morgan Stanley’s Shalett

“Sometimes the bull case is the lack of a credible bear case, that’s where we are at this very minute,” says Lisa Shalett, CIO at Morgan Stanley Wealth Management, as she sees the Mag 7 stocks as “probably over-owned and a little sizzly.” (Source: Bloomberg)

Nintendo’s Switch 2 Sales Boom Fails to Ease Game Developers’ Gloom

Welcome to Tech In Depth, our daily newsletter about the business of tech from Bloomberg’s journalists around the world. Today, Takashi Mochizuki reports on the sluggish sales for independent game makers despite consumer enthusiasm for Nintendo’s Switch 2 console.

Binance-Linked Token Hits Record With Zhao Pardon Buzz

A cryptocurrency with ties to Binance Holdings Ltd. struck an all-time high as speculation builds that the digital-asset exchange’s co-founder Changpeng Zhao will be granted a US presidential pardon.

VCs to AI Startups: Please Take Our Money

Private jets, box seats and big checks. Investors are doing whatever it takes to get into top AI deals.

Apple’s iPhone 17 Line Wins By Returning the Focus to Hardware

Apple Intelligence takes a backseat to fresh designs, a very thin phone and upgraded cameras.

Kenya Yet to Engage Safaricom Board on Splitting Firm, CEO Says

Kenya’s government is yet to engage the board of Safaricom Plc about a potential plan to split its biggest company into three separate firms, according to Chief Executive Officer Peter Ndegwa.

Unity Advisory Co-Founder Set to Exit Consulting Firm Months After Launch

The managing partner and co-founder of Unity Advisory is set to leave the new consultancy business, which launched in June with backing from private equity firm Warburg Pincus.

ASM International Cuts Outlook After Chip Demand Disappoints

ASM International NV cut its sales outlook for the second half of the year, citing lower-than-anticipated demand from some of the semiconductor equipment maker’s clients.

Defense Startup Auterion Raises $130 Million to Become Microsoft for Drones

Auterion, a startup that provides software to military drones, has raised $130 million to expand its operations abroad, including in geopolitical hotspots Ukraine and Taiwan, a further sign that private investors are pouring money into defense.

Trump Trade War Is Helping Jumia Access Chinese Goods, CEO Says

Jumia Technologies AG’s Chief Executive Officer Francis Dufay said the global trade war is benefiting Africa’s biggest e-commerce company by increasing its access to Chinese goods.

Nigerian Fintech Aims at US Market With Eye on African Diaspora

A Nigerian fintech firm is partnering with a US bank to enable customers to link their bank accounts in both countries via a single digital wallet.

New Thai Government Plans Stimulus, Baht Action Before Polls

Thai Prime Minister Anutin Charnvirakul’s new government will unveil plans for short-term economic stimulus to boost consumption and help those struggling with heavy debt, racing to broaden support in its next four months in power.

Huawei Plans Three-Year Campaign to Overtake Nvidia in AI Chips

Huawei Technologies Co. openly admits its silicon can’t match Nvidia Corp.’s in raw power and speed. So to pack the same punch, China’s national champion is counting on its traditional strengths: brute force, networking, and policy support.

Alibaba Tries to Draw Brands on Amazon to Its Global Site

Alibaba Group Holding Ltd. is trying to attract established brand names on Amazon.com Inc. to its global e-commerce site AliExpress, stepping up efforts to expand its footprint on the Seattle-based firm’s home turf.

Wall Street to Tap Engineers in India After $100,000 Fee

Wall Street banks are set to rely more on their Indian business support centers following President Donald Trump’s shock move to impose $100,000 fees on new applications to the widely used H-1B visa program.

Walmart-Backed Flipkart Invests $30 Million in Fintech Arm Supermoney

Flipkart India Pvt., backed by Walmart Inc., is investing $30 million in its fintech unit Supermoney as the e-commerce giant accelerates its push into lending and stock broking, according to people familiar with the matter.

An $800 Billion Revenue Shortfall Threatens AI Future, Bain Says

Artificial intelligence companies like OpenAI have been quick to unveil plans for spending hundreds of billions of dollars on data centers, but they have been slower to show how they will pull in revenue to cover all those expenses. Now, the consulting firm Bain & Co. is estimating the shortfall could be far larger than previously understood.

Social Media Giants Lose Challenge to Experts Testifying on Harm

Jurors will be allowed to hear expert testimony about the impact of social media on young users during coming trials against tech companies over alleged harm caused by their platforms, a Los Angeles judge ruled.

Nvidia, OpenAI Make $100 Billion Deal to Build Data Centers

Nvidia Corp. will invest as much as $100 billion in OpenAI to support new data centers and other artificial intelligence infrastructure, a blockbuster deal that underscores booming demand for AI tools like ChatGPT and the computing power needed to make them run.

Nvidia Says All Customers Will Be ‘Priority’ Despite OpenAI Deal

Nvidia Corp. assured customers that its landmark deal with OpenAI to invest $100 billion and expand AI infrastructure together won’t affect the chipmaker’s relationship with other clients.

Why the US is barring Iranian diplomats from shopping at Costco

As the United Nations gathers in New York, the US State Department says Iranian diplomats are barred from joining wholesale clubs.

I've had an executive Costco membership for 10 years. It pays for itself — and then some.

I've had a Costco executive membership for 10 years. The perks, like the 2% cash back reward and extra shopping hours, make it worth the $130 price.

Family-owned American diners are a dying breed. I visited 2 in New Jersey to see if they can survive.

Abby Narishkin visited two New Jersey diners to see if the dying breed American institution can survive. She left feeling more optimistic than ever.

I charge $25,000 to help students get into Ivy League colleges. Most teens are making the same mistake.

Parents pay me to help students find their core values and act on them in the real world — instead of just joining a long list of extracurriculars.

TV station owner Nexstar joins Sinclair, says it will continue not to air Jimmy Kimmel

Nexstar Media Group, one of the nation's largest local TV station owners, said it will continue to preempt "Jimmy Kimmel Live!"

Kamala Harris says Biden should've invited Elon Musk to the White House in 2021

The former VP writes in her new book that Musk set off her "spidey senses" long before his MAGA pivot in 2024.

Spirit has already axed flights and asked pilots to take a pay cut. Now it's furloughing 1,800 flight attendants.

Spirit Airlines is cutting costs wherever it can as it battles through its second bankruptcy in less than a year.

I moved to Los Angeles to live on a boat. The past 2 years haven't been all smooth sailing, but life on the water is worth it.

I live on a boat full-time in LA. There are some cons, like less space, but it's cheaper than I expected and I like the community and ocean access.

Why this startup founder scrapped her dating app to build a LinkedIn rival powered by AI

Clara Gold is the French founder behind Gigi, a new AI-powered professional social network. The startup has raised a total of $8 million.

As a psychologist, I know it's normal for parents to get angry. Here's how caregivers can handle their own big feelings.

Anger is not a character flaw in parents. As a psychologist, I know it's an emotion that's necessary for our survival.

The Chicago hotel that invented the brownie is still serving the original 1893 recipe. I've never had a brownie like it.

The brownie, inspired by the Gilded Age socialite Bertha Palmer, was created as part of the preparations for Chicago's 1893 World's Fair.

$12 billion Walleye will back a new $500 million hedge fund from a former Millennium and Citadel healthcare portfolio manager

Soren Gandrud's Jones Hill is expected to begin trading in the first quarter of 2026 with at least $500 million.

Ukraine is ready to export its weapons, like the fearsome sea drones that helped it cripple Russia's Black Sea Fleet

Ukraine sees exporting surplus weapons — like naval drones — as a way to raise funds for the weapons in short supply.

I visited Scotland for the first time. My trip was great, but it would've been better if I'd known these 5 things beforehand.

From what to pack to how to pronounce the names of certain cities, there are a few things I wish I knew before visiting Scotland for the first time.

Google's senior director of product explains how software engineering jobs are changing in the AI era

With AI shifting the role of software engineers, Google's senior director of product says more developers will be involved in deploying products.

Jimmy Kimmel's return doesn't mean the end of Disney's problems

Now that Jimmy Kimmel's show has become a lightning rod, any decision about it going forward will be viewed with a magnifying glass.

Diddy cites his 'extraordinary life' in long shot bid for freedom next month

Sean "Diddy" Combs is hoping to be sprung at next month's sentencing. A Manhattan jury convicted the hip-hop mogul of 2 prostitution-related counts.

I've been on more than 20 cruises. Here are the 9 things I never buy on board.

After going on over 20 cruises with different lines, I know to avoid spending money on unlimited drink packages and overpriced spa products.

Palmer Luckey says founders should look beyond the Bay Area to avoid hiring 'mercenary-minded' tech workers

Palmer Luckey said Bay Area hiring bred "mercenary-minded" workers. He now recruits nationwide, especially veterans, to build Anduril.

I've lived in New England my whole life. There's one town in this region I swear by visiting every fall.

My favorite fall travel location is Stowe, Vermont. I love checking out the corn mazes and visiting neighboring towns like Killington and Waterbury.

Why One VC Thinks Quantum Is a Bigger Unlock Than AGI

Venture capitalist Alexa von Tobel is ready to bet on quantum computing—starting with hardware.

How Signal’s Meredith Whittaker Remembers SignalGate: ‘No Fucking Way’

The Signal Foundation president recalls where she was when she heard Trump cabinet officials had added a journalist to a highly sensitive group chat.

$3,800 Flights and Aborted Takeoffs: How Trump’s H-1B Announcement Panicked Tech Workers

President Trump’s sudden policy shift sent tech firms scrambling to get immigrant workers back to the US and avoid $100,000 fees.

Louisiana Hands Meta a Tax Break and Power for Its Biggest Data Center

Mark Zuckerberg’s company faces backlash after rowing back promises to create between 300 and 500 new jobs to man its subsidiary’s new data center.

Palantir Wants to Be a Lifestyle Brand

Defense tech giant Palantir is selling T-shirts and tote bags as part of a bid to encourage fans to publicly endorse it.

I Thought I Knew Silicon Valley. I Was Wrong

Tech got what it wanted by electing Trump. A year later, it looks more like a suicide pact.

WIRED’s Politics Issue Cover Is Coming to a City Near You

We’re turning our latest cover into posters, billboards, and even a mural in New York, Los Angeles, Austin, San Francisco, and Washington, DC. Here’s how to find it. (Pics or it didn’t happen.)

Elon Musk Is Out to Rule Space. Can Anyone Stop Him?

With SpaceX and Starlink, Elon Musk controls more than half the world’s rocket launches and thousands of internet satellites. That amounts to immense geopolitical power.

Why OpenAI May Never Generate ROI

Unless infrastructure costs or compute requirements somehow plummet, writes guest author Eugene Malobrodsky, managing partner at One Way Ventures, the billions of realized profits are going into the pockets of the providers of GPUs, energy and other resources, not the foundation model providers.

What We’ve Learned Investing In Challenger Banks Across The Globe

Guest author Arjuna Costa of Flourish Ventures shares what he learned on his journey toward reshaping financial systems by scaling neobanks globally, and why Chime's successful Nasdaq debut proves that building consumer-first financial institutions is not only viable but necessary.

Distyl AI Raises $175M Series B At $1.8B Valuation, Up 9x From Last Funding

Distyl AI, a startup that aims to help Fortune 500 companies become “AI-native,” has raised $175 million in a Series B funding round at a $1.8 billion valuation.

Navan Is Finally Going Public For Real

On Friday, Navan (formerly TripActions) filed its first public IPO prospectus. It comes almost precisely three years after the company first reportedly submitted confidential paperwork for a planned offering.

Nvidia To Invest Up To $100B In OpenAI

Chipmaker Nvidia announced on Monday that it is investing up to $100 billion in OpenAI, but the investment reportedly comes with conditions.

With Amazon And Salesforce As Customers, Agentic AI Startup AppZen Lands $180M To ‘Transform’ Finance Teams

AppZen, which has built an agentic AI platform for finance teams, has raised $180 million in a Series D round, the company told Crunchbase News. Riverwood Capital led the growth financing round.

These Are The Speediest Companies To Go From Series A To Series C

Per Crunchbase data, the past couple of years have seen a sizable cohort of companies that have gone all the way from Series A to Series C between 2023 and this year, with several managing to scale all three stages in less than 12 months.

Market Liquidity And Middle-Market M&A: Waiting For The Breakthrough

As we enter Q4 2025, the long-awaited middle-market M&A boom many in the industry have forecasted has yet to materialize, writes guest author Michael Mufson. He questions if the Fed’s interest rate cuts will be enough to restore equilibrium between capital supply and deal demand.

Discovering Software Parallelization Points Using Deep Neural Networks

arXiv:2509.16215v1 Announce Type: new Abstract: This study proposes a deep learning-based approach for discovering loops in programming code according to their potential for parallelization. Two genetic algorithm-based code generators were developed to produce two distinct types of code: (i) independent loops, which are parallelizable, and (ii) ambiguous loops, whose dependencies are unclear, making them impossible to define if the loop is parallelizable or not. The generated code snippets were tokenized and preprocessed to ensure a robust dataset. Two deep learning models - a Deep Neural Network (DNN) and a Convolutional Neural Network (CNN) - were implemented to perform the classification. Based on 30 independent runs, a robust statistical analysis was employed to verify the expected performance of both models, DNN and CNN. The CNN showed a slightly higher mean performance, but the two models had a similar variability. Experiments with varying dataset sizes highlighted the importance of data diversity for model performance. These results demonstrate the feasibility of using deep learning to automate the identification of parallelizable structures in code, offering a promising tool for software optimization and performance improvement.

Comparison of Deterministic and Probabilistic Machine Learning Algorithms for Precise Dimensional Control and Uncertainty Quantification in Additive Manufacturing

arXiv:2509.16233v1 Announce Type: new Abstract: We present a probabilistic framework to accurately estimate dimensions of additively manufactured components. Using a dataset of 405 parts from nine production runs involving two machines, three polymer materials, and two-part configurations, we examine five key design features. To capture both design information and manufacturing variability, we employ models integrating continuous and categorical factors. For predicting Difference from Target (DFT) values, we test deterministic and probabilistic machine learning methods. Deterministic models, trained on 80% of the dataset, provide precise point estimates, with Support Vector Regression (SVR) achieving accuracy close to process repeatability. To address systematic deviations, we adopt Gaussian Process Regression (GPR) and Bayesian Neural Networks (BNNs). GPR delivers strong predictive performance and interpretability, while BNNs capture both aleatoric and epistemic uncertainties. We investigate two BNN approaches: one balancing accuracy and uncertainty capture, and another offering richer uncertainty decomposition but with lower dimensional accuracy. Our results underscore the importance of quantifying epistemic uncertainty for robust decision-making, risk assessment, and model improvement. We discuss trade-offs between GPR and BNNs in terms of predictive power, interpretability, and computational efficiency, noting that model choice depends on analytical needs. By combining deterministic precision with probabilistic uncertainty quantification, our study provides a rigorous foundation for uncertainty-aware predictive modeling in AM. This approach not only enhances dimensional accuracy but also supports reliable, risk-informed design strategies, thereby advancing data-driven manufacturing methodologies.

SubDyve: Subgraph-Driven Dynamic Propagation for Virtual Screening Enhancement Controlling False Positive

arXiv:2509.16273v1 Announce Type: new Abstract: Virtual screening (VS) aims to identify bioactive compounds from vast chemical libraries, but remains difficult in low-label regimes where only a few actives are known. Existing methods largely rely on general-purpose molecular fingerprints and overlook class-discriminative substructures critical to bioactivity. Moreover, they consider molecules independently, limiting effectiveness in low-label regimes. We introduce SubDyve, a network-based VS framework that constructs a subgraph-aware similarity network and propagates activity signals from a small known actives. When few active compounds are available, SubDyve performs iterative seed refinement, incrementally promoting new candidates based on local false discovery rate. This strategy expands the seed set with promising candidates while controlling false positives from topological bias and overexpansion. We evaluate SubDyve on ten DUD-E targets under zero-shot conditions and on the CDK7 target with a 10-million-compound ZINC dataset. SubDyve consistently outperforms existing fingerprint or embedding-based approaches, achieving margins of up to +34.0 on the BEDROC and +24.6 on the EF1% metric.

Stabilizing Information Flow Entropy: Regularization for Safe and Interpretable Autonomous Driving Perception

arXiv:2509.16277v1 Announce Type: new Abstract: Deep perception networks in autonomous driving traditionally rely on data-intensive training regimes and post-hoc anomaly detection, often disregarding fundamental information-theoretic constraints governing stable information processing. We reconceptualize deep neural encoders as hierarchical communication chains that incrementally compress raw sensory inputs into task-relevant latent features. Within this framework, we establish two theoretically justified design principles for robust perception: (D1) smooth variation of mutual information between consecutive layers, and (D2) monotonic decay of latent entropy with network depth. Our analysis shows that, under realistic architectural assumptions, particularly blocks comprising repeated layers of similar capacity, enforcing smooth information flow (D1) naturally encourages entropy decay (D2), thus ensuring stable compression. Guided by these insights, we propose Eloss, a novel entropy-based regularizer designed as a lightweight, plug-and-play training objective. Rather than marginal accuracy improvements, this approach represents a conceptual shift: it unifies information-theoretic stability with standard perception tasks, enabling explicit, principled detection of anomalous sensor inputs through entropy deviations. Experimental validation on large-scale 3D object detection benchmarks (KITTI and nuScenes) demonstrates that incorporating Eloss consistently achieves competitive or improved accuracy while dramatically enhancing sensitivity to anomalies, amplifying distribution-shift signals by up to two orders of magnitude. This stable information-compression perspective not only improves interpretability but also establishes a solid theoretical foundation for safer, more robust autonomous driving perception systems.

Architectural change in neural networks using fuzzy vertex pooling

arXiv:2509.16287v1 Announce Type: new Abstract: The process of pooling vertices involves the creation of a new vertex, which becomes adjacent to all the vertices that were originally adjacent to the endpoints of the vertices being pooled. After this, the endpoints of these vertices and all edges connected to them are removed. In this document, we introduce a formal framework for the concept of fuzzy vertex pooling (FVP) and provide an overview of its key properties with its applications to neural networks. The pooling model demonstrates remarkable efficiency in minimizing loss rapidly while maintaining competitive accuracy, even with fewer hidden layer neurons. However, this advantage diminishes over extended training periods or with larger datasets, where the model's performance tends to degrade. This study highlights the limitations of pooling in later stages of deep learning training, rendering it less effective for prolonged or large-scale applications. Consequently, pooling is recommended as a strategy for early-stage training in advanced deep learning models to leverage its initial efficiency.

Robust LLM Training Infrastructure at ByteDance

arXiv:2509.16293v1 Announce Type: new Abstract: The training scale of large language models (LLMs) has reached tens of thousands of GPUs and is still continuously expanding, enabling faster learning of larger models. Accompanying the expansion of the resource scale is the prevalence of failures (CUDA error, NaN values, job hang, etc.), which poses significant challenges to training stability. Any large-scale LLM training infrastructure should strive for minimal training interruption, efficient fault diagnosis, and effective failure tolerance to enable highly efficient continuous training. This paper presents ByteRobust, a large-scale GPU infrastructure management system tailored for robust and stable training of LLMs. It exploits the uniqueness of LLM training process and gives top priorities to detecting and recovering failures in a routine manner. Leveraging parallelisms and characteristics of LLM training, ByteRobust enables high-capacity fault tolerance, prompt fault demarcation, and localization with an effective data-driven approach, comprehensively ensuring continuous and efficient training of LLM tasks. ByteRobust is deployed on a production GPU platform with over 200,000 GPUs and achieves 97% ETTR for a three-month training job on 9,600 GPUs.

ROOT: Rethinking Offline Optimization as Distributional Translation via Probabilistic Bridge

arXiv:2509.16300v1 Announce Type: new Abstract: This paper studies the black-box optimization task which aims to find the maxima of a black-box function using a static set of its observed input-output pairs. This is often achieved via learning and optimizing a surrogate function with that offline data. Alternatively, it can also be framed as an inverse modeling task that maps a desired performance to potential input candidates that achieve it. Both approaches are constrained by the limited amount of offline data. To mitigate this limitation, we introduce a new perspective that casts offline optimization as a distributional translation task. This is formulated as learning a probabilistic bridge transforming an implicit distribution of low-value inputs (i.e., offline data) into another distribution of high-value inputs (i.e., solution candidates). Such probabilistic bridge can be learned using low- and high-value inputs sampled from synthetic functions that resemble the target function. These synthetic functions are constructed as the mean posterior of multiple Gaussian processes fitted with different parameterizations on the offline data, alleviating the data bottleneck. The proposed approach is evaluated on an extensive benchmark comprising most recent methods, demonstrating significant improvement and establishing a new state-of-the-art performance.

Auto-bidding under Return-on-Spend Constraints with Uncertainty Quantification

arXiv:2509.16324v1 Announce Type: new Abstract: Auto-bidding systems are widely used in advertising to automatically determine bid values under constraints such as total budget and Return-on-Spend (RoS) targets. Existing works often assume that the value of an ad impression, such as the conversion rate, is known. This paper considers the more realistic scenario where the true value is unknown. We propose a novel method that uses conformal prediction to quantify the uncertainty of these values based on machine learning methods trained on historical bidding data with contextual features, without assuming the data are i.i.d. This approach is compatible with current industry systems that use machine learning to predict values. Building on prediction intervals, we introduce an adjusted value estimator derived from machine learning predictions, and show that it provides performance guarantees without requiring knowledge of the true value. We apply this method to enhance existing auto-bidding algorithms with budget and RoS constraints, and establish theoretical guarantees for achieving high reward while keeping RoS violations low. Empirical results on both simulated and real-world industrial datasets demonstrate that our approach improves performance while maintaining computational efficiency.

Highly Imbalanced Regression with Tabular Data in SEP and Other Applications

arXiv:2509.16339v1 Announce Type: new Abstract: We investigate imbalanced regression with tabular data that have an imbalance ratio larger than 1,000 ("highly imbalanced"). Accurately estimating the target values of rare instances is important in applications such as forecasting the intensity of rare harmful Solar Energetic Particle (SEP) events. For regression, the MSE loss does not consider the correlation between predicted and actual values. Typical inverse importance functions allow only convex functions. Uniform sampling might yield mini-batches that do not have rare instances. We propose CISIR that incorporates correlation, Monotonically Decreasing Involution (MDI) importance, and stratified sampling. Based on five datasets, our experimental results indicate that CISIR can achieve lower error and higher correlation than some recent methods. Also, adding our correlation component to other recent methods can improve their performance. Lastly, MDI importance can outperform other importance functions. Our code can be found in https://github.com/Machine-Earning/CISIR.

Estimating Clinical Lab Test Result Trajectories from PPG using Physiological Foundation Model and Patient-Aware State Space Model -- a UNIPHY+ Approach

arXiv:2509.16345v1 Announce Type: new Abstract: Clinical laboratory tests provide essential biochemical measurements for diagnosis and treatment, but are limited by intermittent and invasive sampling. In contrast, photoplethysmogram (PPG) is a non-invasive, continuously recorded signal in intensive care units (ICUs) that reflects cardiovascular dynamics and can serve as a proxy for latent physiological changes. We propose UNIPHY+Lab, a framework that combines a large-scale PPG foundation model for local waveform encoding with a patient-aware Mamba model for long-range temporal modeling. Our architecture addresses three challenges: (1) capturing extended temporal trends in laboratory values, (2) accounting for patient-specific baseline variation via FiLM-modulated initial states, and (3) performing multi-task estimation for interrelated biomarkers. We evaluate our method on the two ICU datasets for predicting the five key laboratory tests. The results show substantial improvements over the LSTM and carry-forward baselines in MAE, RMSE, and $R^2$ among most of the estimation targets. This work demonstrates the feasibility of continuous, personalized lab value estimation from routine PPG monitoring, offering a pathway toward non-invasive biochemical surveillance in critical care.

Improving Deep Tabular Learning

arXiv:2509.16354v1 Announce Type: new Abstract: Tabular data remain a dominant form of real-world information but pose persistent challenges for deep learning due to heterogeneous feature types, lack of natural structure, and limited label-preserving augmentations. As a result, ensemble models based on decision trees continue to dominate benchmark leaderboards. In this work, we introduce RuleNet, a transformer-based architecture specifically designed for deep tabular learning. RuleNet incorporates learnable rule embeddings in a decoder, a piecewise linear quantile projection for numerical features, and feature masking ensembles for robustness and uncertainty estimation. Evaluated on eight benchmark datasets, RuleNet matches or surpasses state-of-the-art tree-based methods in most cases, while remaining computationally efficient, offering a practical neural alternative for tabular prediction tasks.

Guided Sequence-Structure Generative Modeling for Iterative Antibody Optimization

arXiv:2509.16357v1 Announce Type: new Abstract: Therapeutic antibody candidates often require extensive engineering to improve key functional and developability properties before clinical development. This can be achieved through iterative design, where starting molecules are optimized over several rounds of in vitro experiments. While protein structure can provide a strong inductive bias, it is rarely used in iterative design due to the lack of structural data for continually evolving lead molecules over the course of optimization. In this work, we propose a strategy for iterative antibody optimization that leverages both sequence and structure as well as accumulating lab measurements of binding and developability. Building on prior work, we first train a sequence-structure diffusion generative model that operates on antibody-antigen complexes. We then outline an approach to use this model, together with carefully predicted antibody-antigen complexes, to optimize lead candidates throughout the iterative design process. Further, we describe a guided sampling approach that biases generation toward desirable properties by integrating models trained on experimental data from iterative design. We evaluate our approach in multiple in silico and in vitro experiments, demonstrating that it produces high-affinity binders at multiple stages of an active antibody optimization campaign.

EMPEROR: Efficient Moment-Preserving Representation of Distributions

arXiv:2509.16379v1 Announce Type: new Abstract: We introduce EMPEROR (Efficient Moment-Preserving Representation of Distributions), a mathematically rigorous and computationally efficient framework for representing high-dimensional probability measures arising in neural network representations. Unlike heuristic global pooling operations, EMPEROR encodes a feature distribution through its statistical moments. Our approach leverages the theory of sliced moments: features are projected onto multiple directions, lightweight univariate Gaussian mixture models (GMMs) are fit to each projection, and the resulting slice parameters are aggregated into a compact descriptor. We establish determinacy guarantees via Carleman's condition and the Cram\'er-Wold theorem, ensuring that the GMM is uniquely determined by its sliced moments, and we derive finite-sample error bounds that scale optimally with the number of slices and samples. Empirically, EMPEROR captures richer distributional information than common pooling schemes across various data modalities, while remaining computationally efficient and broadly applicable.

CoUn: Empowering Machine Unlearning via Contrastive Learning

arXiv:2509.16391v1 Announce Type: new Abstract: Machine unlearning (MU) aims to remove the influence of specific "forget" data from a trained model while preserving its knowledge of the remaining "retain" data. Existing MU methods based on label manipulation or model weight perturbations often achieve limited unlearning effectiveness. To address this, we introduce CoUn, a novel MU framework inspired by the observation that a model retrained from scratch using only retain data classifies forget data based on their semantic similarity to the retain data. CoUn emulates this behavior by adjusting learned data representations through contrastive learning (CL) and supervised learning, applied exclusively to retain data. Specifically, CoUn (1) leverages semantic similarity between data samples to indirectly adjust forget representations using CL, and (2) maintains retain representations within their respective clusters through supervised learning. Extensive experiments across various datasets and model architectures show that CoUn consistently outperforms state-of-the-art MU baselines in unlearning effectiveness. Additionally, integrating our CL module into existing baselines empowers their unlearning effectiveness.

Federated Learning for Financial Forecasting

arXiv:2509.16393v1 Announce Type: new Abstract: This paper studies Federated Learning (FL) for binary classification of volatile financial market trends. Using a shared Long Short-Term Memory (LSTM) classifier, we compare three scenarios: (i) a centralized model trained on the union of all data, (ii) a single-agent model trained on an individual data subset, and (iii) a privacy-preserving FL collaboration in which agents exchange only model updates, never raw data. We then extend the study with additional market features, deliberately introducing not independent and identically distributed data (non-IID) across agents, personalized FL and employing differential privacy. Our numerical experiments show that FL achieves accuracy and generalization on par with the centralized baseline, while significantly outperforming the single-agent model. The results show that collaborative, privacy-preserving learning provides collective tangible value in finance, even under realistic data heterogeneity and personalization requirements.

GRID: Graph-based Reasoning for Intervention and Discovery in Built Environments

arXiv:2509.16397v1 Announce Type: new Abstract: Manual HVAC fault diagnosis in commercial buildings takes 8-12 hours per incident and achieves only 60 percent diagnostic accuracy, reflecting analytics that stop at correlation instead of causation. To close this gap, we present GRID (Graph-based Reasoning for Intervention and Discovery), a three-stage causal discovery pipeline that combines constraint-based search, neural structural equation modeling, and language model priors to recover directed acyclic graphs from building sensor data. Across six benchmarks: synthetic rooms, EnergyPlus simulation, the ASHRAE Great Energy Predictor III dataset, and a live office testbed, GRID achieves F1 scores ranging from 0.65 to 1.00, with exact recovery (F1 = 1.00) in three controlled environments (Base, Hidden, Physical) and strong performance on real-world data (F1 = 0.89 on ASHRAE, 0.86 in noisy conditions). The method outperforms ten baseline approaches across all evaluation scenarios. Intervention scheduling achieves low operational impact in most scenarios (cost <= 0.026) while reducing risk metrics compared to baseline approaches. The framework integrates constraint-based methods, neural architectures, and domain-specific language model prompts to address the observational-causal gap in building analytics.

Local Mechanisms of Compositional Generalization in Conditional Diffusion

arXiv:2509.16447v1 Announce Type: new Abstract: Conditional diffusion models appear capable of compositional generalization, i.e., generating convincing samples for out-of-distribution combinations of conditioners, but the mechanisms underlying this ability remain unclear. To make this concrete, we study length generalization, the ability to generate images with more objects than seen during training. In a controlled CLEVR setting (Johnson et al., 2017), we find that length generalization is achievable in some cases but not others, suggesting that models only sometimes learn the underlying compositional structure. We then investigate locality as a structural mechanism for compositional generalization. Prior works proposed score locality as a mechanism for creativity in unconditional diffusion models (Kamb & Ganguli, 2024; Niedoba et al., 2024), but did not address flexible conditioning or compositional generalization. In this paper, we prove an exact equivalence between a specific compositional structure ("conditional projective composition") (Bradley et al., 2025) and scores with sparse dependencies on both pixels and conditioners ("local conditional scores"). This theory also extends to feature-space compositionality. We validate our theory empirically: CLEVR models that succeed at length generalization exhibit local conditional scores, while those that fail do not. Furthermore, we show that a causal intervention explicitly enforcing local conditional scores restores length generalization in a previously failing model. Finally, we investigate feature-space compositionality in color-conditioned CLEVR, and find preliminary evidence of compositional structure in SDXL.

Entropic Causal Inference: Graph Identifiability

arXiv:2509.16463v1 Announce Type: new Abstract: Entropic causal inference is a recent framework for learning the causal graph between two variables from observational data by finding the information-theoretically simplest structural explanation of the data, i.e., the model with smallest entropy. In our work, we first extend the causal graph identifiability result in the two-variable setting under relaxed assumptions. We then show the first identifiability result using the entropic approach for learning causal graphs with more than two nodes. Our approach utilizes the property that ancestrality between a source node and its descendants can be determined using the bivariate entropic tests. We provide a sound sequential peeling algorithm for general graphs that relies on this property. We also propose a heuristic algorithm for small graphs that shows strong empirical performance. We rigorously evaluate the performance of our algorithms on synthetic data generated from a variety of models, observing improvement over prior work. Finally we test our algorithms on real-world datasets.

Towards Universal Debiasing for Language Models-based Tabular Data Generation

arXiv:2509.16475v1 Announce Type: new Abstract: Large language models (LLMs) have achieved promising results in tabular data generation. However, inherent historical biases in tabular datasets often cause LLMs to exacerbate fairness issues, particularly when multiple advantaged and protected features are involved. In this work, we introduce a universal debiasing framework that minimizes group-level dependencies by simultaneously reducing the mutual information between advantaged and protected attributes. By leveraging the autoregressive structure and analytic sampling distributions of LLM-based tabular data generators, our approach efficiently computes mutual information, reducing the need for cumbersome numerical estimations. Building on this foundation, we propose two complementary methods: a direct preference optimization (DPO)-based strategy, namely UDF-DPO, that integrates seamlessly with existing models, and a targeted debiasing technique, namely UDF-MIX, that achieves debiasing without tuning the parameters of LLMs. Extensive experiments demonstrate that our framework effectively balances fairness and utility, offering a scalable and practical solution for debiasing in high-stakes applications.

Revisiting Broken Windows Theory

arXiv:2509.16490v1 Announce Type: new Abstract: We revisit the longstanding question of how physical structures in urban landscapes influence crime. Leveraging machine learning-based matching techniques to control for demographic composition, we estimate the effects of several types of urban structures on the incidence of violent crime in New York City and Chicago. We additionally contribute to a growing body of literature documenting the relationship between perception of crime and actual crime rates by separately analyzing how the physical urban landscape shapes subjective feelings of safety. Our results are twofold. First, in consensus with prior work, we demonstrate a "broken windows" effect in which abandoned buildings, a sign of social disorder, are associated with both greater incidence of crime and a heightened perception of danger. This is also true of types of urban structures that draw foot traffic such as public transportation infrastructure. Second, these effects are not uniform within or across cities. The criminogenic effects of the same structure types across two cities differ in magnitude, degree of spatial localization, and heterogeneity across subgroups, while within the same city, the effects of different structure types are confounded by different demographic variables. Taken together, these results emphasize that one-size-fits-all approaches to crime reduction are untenable and policy interventions must be specifically tailored to their targets.

FairTune: A Bias-Aware Fine-Tuning Framework Towards Fair Heart Rate Prediction from PPG

arXiv:2509.16491v1 Announce Type: new Abstract: Foundation models pretrained on physiological data such as photoplethysmography (PPG) signals are increasingly used to improve heart rate (HR) prediction across diverse settings. Fine-tuning these models for local deployment is often seen as a practical and scalable strategy. However, its impact on demographic fairness particularly under domain shifts remains underexplored. We fine-tune PPG-GPT a transformer-based foundation model pretrained on intensive care unit (ICU) data across three heterogeneous datasets (ICU, wearable, smartphone) and systematically evaluate the effects on HR prediction accuracy and gender fairness. While fine-tuning substantially reduces mean absolute error (up to 80%), it can simultaneously widen fairness gaps, especially in larger models and under significant distributional characteristics shifts. To address this, we introduce FairTune, a bias-aware fine-tuning framework in which we benchmark three mitigation strategies: class weighting based on inverse group frequency (IF), Group Distributionally Robust Optimization (GroupDRO), and adversarial debiasing (ADV). We find that IF and GroupDRO significantly reduce fairness gaps without compromising accuracy, with effectiveness varying by deployment domain. Representation analyses further reveal that mitigation techniques reshape internal embeddings to reduce demographic clustering. Our findings highlight that fairness does not emerge as a natural byproduct of fine-tuning and that explicit mitigation is essential for equitable deployment of physiological foundation models.

A Closer Look at Model Collapse: From a Generalization-to-Memorization Perspective

arXiv:2509.16499v1 Announce Type: new Abstract: The widespread use of diffusion models has led to an abundance of AI-generated data, raising concerns about model collapse -- a phenomenon in which recursive iterations of training on synthetic data lead to performance degradation. Prior work primarily characterizes this collapse via variance shrinkage or distribution shift, but these perspectives miss practical manifestations of model collapse. This paper identifies a transition from generalization to memorization during model collapse in diffusion models, where models increasingly replicate training data instead of generating novel content during iterative training on synthetic samples. This transition is directly driven by the declining entropy of the synthetic training data produced in each training cycle, which serves as a clear indicator of model degradation. Motivated by this insight, we propose an entropy-based data selection strategy to mitigate the transition from generalization to memorization and alleviate model collapse. Empirical results show that our approach significantly enhances visual quality and diversity in recursive generation, effectively preventing collapse.

GRIL: Knowledge Graph Retrieval-Integrated Learning with Large Language Models

arXiv:2509.16502v1 Announce Type: new Abstract: Retrieval-Augmented Generation (RAG) has significantly mitigated the hallucinations of Large Language Models (LLMs) by grounding the generation with external knowledge. Recent extensions of RAG to graph-based retrieval offer a promising direction, leveraging the structural knowledge for multi-hop reasoning. However, existing graph RAG typically decouples retrieval and reasoning processes, which prevents the retriever from adapting to the reasoning needs of the LLM. They also struggle with scalability when performing multi-hop expansion over large-scale graphs, or depend heavily on annotated ground-truth entities, which are often unavailable in open-domain settings. To address these challenges, we propose a novel graph retriever trained end-to-end with LLM, which features an attention-based growing and pruning mechanism, adaptively navigating multi-hop relevant entities while filtering out noise. Within the extracted subgraph, structural knowledge and semantic features are encoded via soft tokens and the verbalized graph, respectively, which are infused into the LLM together, thereby enhancing its reasoning capability and facilitating interactive joint training of the graph retriever and the LLM reasoner. Experimental results across three QA benchmarks show that our approach consistently achieves state-of-the-art performance, validating the strength of joint graph-LLM optimization for complex reasoning tasks. Notably, our framework eliminates the need for predefined ground-truth entities by directly optimizing the retriever using LLM logits as implicit feedback, making it especially effective in open-domain settings.

Federated Learning with Ad-hoc Adapter Insertions: The Case of Soft-Embeddings for Training Classifier-as-Retriever

arXiv:2509.16508v1 Announce Type: new Abstract: When existing retrieval-augmented generation (RAG) solutions are intended to be used for new knowledge domains, it is necessary to update their encoders, which are taken to be pretrained large language models (LLMs). However, fully finetuning these large models is compute- and memory-intensive, and even infeasible when deployed on resource-constrained edge devices. We propose a novel encoder architecture in this work that addresses this limitation by using a frozen small language model (SLM), which satisfies the memory constraints of edge devices, and inserting a small adapter network before the transformer blocks of the SLM. The trainable adapter takes the token embeddings of the new corpus and learns to produce enhanced soft embeddings for it, while requiring significantly less compute power to update than full fine-tuning. We further propose a novel retrieval mechanism by attaching a classifier head to the SLM encoder, which is trained to learn a similarity mapping of the input embeddings to their corresponding documents. Finally, to enable the online fine-tuning of both (i) the encoder soft embeddings and (ii) the classifier-as-retriever on edge devices, we adopt federated learning (FL) and differential privacy (DP) to achieve an efficient, privacy-preserving, and product-grade training solution. We conduct a theoretical analysis of our methodology, establishing convergence guarantees under mild assumptions on gradient variance when deployed for general smooth nonconvex loss functions. Through extensive numerical experiments, we demonstrate (i) the efficacy of obtaining soft embeddings to enhance the encoder, (ii) training a classifier to improve the retriever, and (iii) the role of FL in achieving speedup.

LLM-Guided Co-Training for Text Classification

arXiv:2509.16516v1 Announce Type: new Abstract: In this paper, we introduce a novel weighted co-training approach that is guided by Large Language Models (LLMs). Namely, in our co-training approach, we use LLM labels on unlabeled data as target labels and co-train two encoder-only based networks that train each other over multiple iterations: first, all samples are forwarded through each network and historical estimates of each network's confidence in the LLM label are recorded; second, a dynamic importance weight is derived for each sample according to each network's belief in the quality of the LLM label for that sample; finally, the two networks exchange importance weights with each other -- each network back-propagates all samples weighted with the importance weights coming from its peer network and updates its own parameters. By strategically utilizing LLM-generated guidance, our approach significantly outperforms conventional SSL methods, particularly in settings with abundant unlabeled data. Empirical results show that it achieves state-of-the-art performance on 4 out of 5 benchmark datasets and ranks first among 14 compared methods according to the Friedman test. Our results highlight a new direction in semi-supervised learning -- where LLMs serve as knowledge amplifiers, enabling backbone co-training models to achieve state-of-the-art performance efficiently.

mmExpert: Integrating Large Language Models for Comprehensive mmWave Data Synthesis and Understanding

arXiv:2509.16521v1 Announce Type: new Abstract: Millimeter-wave (mmWave) sensing technology holds significant value in human-centric applications, yet the high costs associated with data acquisition and annotation limit its widespread adoption in our daily lives. Concurrently, the rapid evolution of large language models (LLMs) has opened up opportunities for addressing complex human needs. This paper presents mmExpert, an innovative mmWave understanding framework consisting of a data generation flywheel that leverages LLMs to automate the generation of synthetic mmWave radar datasets for specific application scenarios, thereby training models capable of zero-shot generalization in real-world environments. Extensive experiments demonstrate that the data synthesized by mmExpert significantly enhances the performance of downstream models and facilitates the successful deployment of large models for mmWave understanding.

SCAN: Self-Denoising Monte Carlo Annotation for Robust Process Reward Learning

arXiv:2509.16548v1 Announce Type: new Abstract: Process reward models (PRMs) offer fine-grained, step-level evaluations that facilitate deeper reasoning processes in large language models (LLMs), proving effective in complex tasks like mathematical reasoning. However, developing PRMs is challenging due to the high cost and limited scalability of human-annotated data. Synthetic data from Monte Carlo (MC) estimation is a promising alternative but suffers from a high noise ratio, which can cause overfitting and hinder large-scale training. In this work, we conduct a preliminary study on the noise distribution in synthetic data from MC estimation, identifying that annotation models tend to both underestimate and overestimate step correctness due to limitations in their annotation capabilities. Building on these insights, we propose Self-Denoising Monte Carlo Annotation (SCAN), an efficient data synthesis and noise-tolerant learning framework. Our key findings indicate that: (1) Even lightweight models (e.g., 1.5B parameters) can produce high-quality annotations through a self-denoising strategy, enabling PRMs to achieve superior performance with only 6% the inference cost required by vanilla MC estimation. (2) With our robust learning strategy, PRMs can effectively learn from this weak supervision, achieving a 39.2 F1 score improvement (from 19.9 to 59.1) in ProcessBench. Despite using only a compact synthetic dataset, our models surpass strong baselines, including those trained on large-scale human-annotated datasets such as PRM800K. Furthermore, performance continues to improve as we scale up the synthetic data, highlighting the potential of SCAN for scalable, cost-efficient, and robust PRM training.

ViTCAE: ViT-based Class-conditioned Autoencoder

arXiv:2509.16554v1 Announce Type: new Abstract: Vision Transformer (ViT) based autoencoders often underutilize the global Class token and employ static attention mechanisms, limiting both generative control and optimization efficiency. This paper introduces ViTCAE, a framework that addresses these issues by re-purposing the Class token into a generative linchpin. In our architecture, the encoder maps the Class token to a global latent variable that dictates the prior distribution for local, patch-level latent variables, establishing a robust dependency where global semantics directly inform the synthesis of local details. Drawing inspiration from opinion dynamics, we treat each attention head as a dynamical system of interacting tokens seeking consensus. This perspective motivates a convergence-aware temperature scheduler that adaptively anneals each head's influence function based on its distributional stability. This process enables a principled head-freezing mechanism, guided by theoretically-grounded diagnostics like an attention evolution distance and a consensus/cluster functional. This technique prunes converged heads during training to significantly improve computational efficiency without sacrificing fidelity. By unifying a generative Class token with an adaptive attention mechanism rooted in multi-agent consensus theory, ViTCAE offers a more efficient and controllable approach to transformer-based generation.

Learned Digital Codes for Over-the-Air Federated Learning