BLOG POST

AI 日报

Nvidia, Fujitsu Unveil AI, Robotics Partnership

The partners said their expanded collaboration will help support the 'AI industrial revolution.'

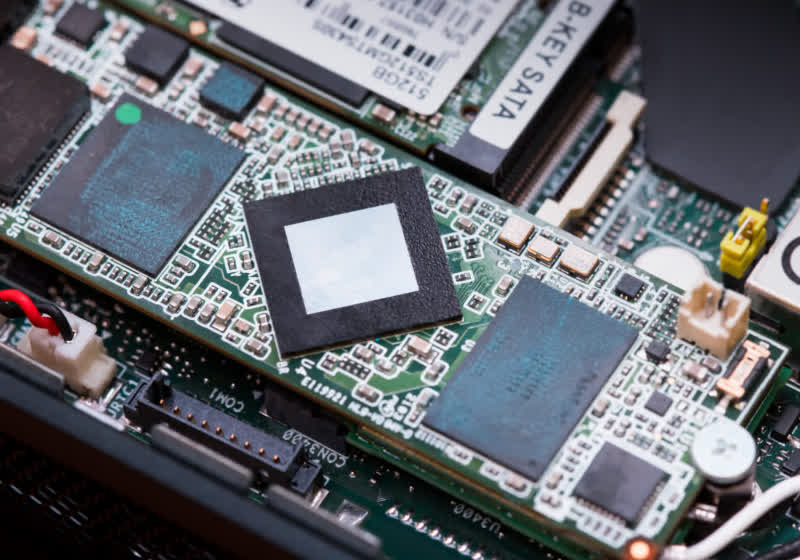

OpenAI and AMD Strike Major AI Partnership

The multibillion-dollar deal between OpenAI with AMD for compute power could make AMD a key competitor to AI chip giant Nvidia.

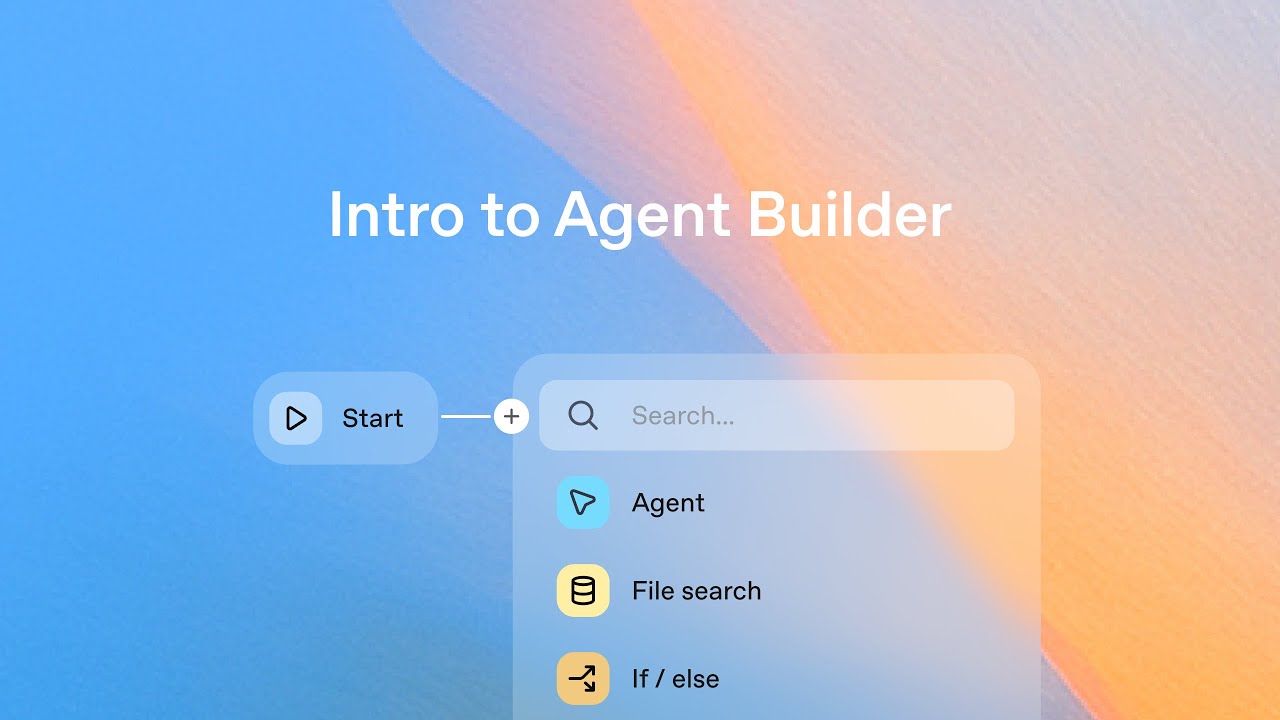

OpenAI unveils AgentKit that lets developers drag and drop to build AI agents

OpenAI launched an agent builder that the company hopes will eliminate fragmented tools and make it easier for enterprises to utilize OpenAI’s system to create agents.

AgentKit, announced during OpenAI’s DevDay in San Francisco, enables developers and enterprises to build agents and add chat capabilities in one place, potentially competing with platforms like Zapier.

By offering a more streamlined way to create agents, OpenAI advances further into becoming a full-stack application provider.

“Until now, building agents meant juggling fragmented tools—complex orchestration with no versioning, custom connectors, manual eval pipelines, prompt tuning, and weeks of frontend work before launch,” the company said in a blog post.

AgentKit includes:

Agent Builder, which is a visual canvas where devs can see what they’ve created and versioning multi-agent workflows

Connector Registry is a central area for admins to manage connections across OpenAI products. A Global Admin console will be a prerequisite to using this feature.

ChatKit enables users to integrate chat-based agents into their user interfaces.

Eventually, OpenAI said it will build a standalone Workflows API and add agent deployment tabs to ChatGPT.

OpenAI also expanded evaluation for agents, adding capabilities such as datasets with automated graders and annotations, trace grading that runs end-to-end assessments of workflows, automated prompt optimization, and support for third-party agent measurement tools.

Developers can access some features of AgentKit, but OpenAI is gradually rolling out additional features, such as Agent Builder. Currently, Agent Builder is available in beta, while ChatKit and new evaluation capabilities are generally available. Connector Registry “is beginning its beta rollout to some API and ChatGPT Enterprise and Edu users.

OpenAI said pricing for AgentKit tools will be included in the standard API model pricing.

To clarify, many agents are built using OpenAI’s models; however, enterprises often access GPT-5 through other platforms to create their own agents. However, AgentKit brings enterprises more into its ecosystem, ensuring they don’t need to tap other platforms as often.

Demonstrated during DevDay, the company stated that Agent Builder is ideal for rapid iteration. It also provides developers with visibility into how the agents are working.

During the demo, an OpenAI developer made an agent that reads the DevDay agenda and suggests panels to watch. It took her just under eight minutes.

Other model providers saw the importance of offering developer toolkits to build agents to entice enterprises to use more of their tools. Google came out with its Agent Development Kit in April, expanding multi-agent system building “in under 100 lines of code.” Microsoft, which runs the popular agent framework AutoGen, announced it is bringing agent creation to one place with its new Agent Framework.

OpenAI customers, such as the fintech company Ramp, stated in a blog post that its teams were able to build a procurement agent in a few hours instead of months.

“Agent Builder transformed what once took months of complex orchestration, custom code, and manual optimizations into just a couple of hours. The visual canvas keeps product, legal, and engineering on the same page, slashing iteration cycles by 70% and getting an agent live in two sprints rather than two quarters,” Ramp said.

AgentKit’s Connector Registry would also enable enterprises to manage and maintain data across workspaces, consolidating data sources into a single panel that spans both ChatGPT and the API. It will have pre-built connectors to Dropbox, Google Drive, SharePoint and Microsoft Teams. It also supports third-party MCP servers.

Another capability of Agent Builder is Guardrails, an open-source safety layer that protects against the leakage of personally identifiable information (PII), jailbreaks, and unintended or malicious behavior.

Since most agentic interactions involve chat, it makes sense to simplify the process for developers to set up chat interfaces and connect them with the agents they’ve just built.

“Deploying chat UIs for agents can be surprisingly complex—handling streaming responses, managing threads, showing the model thinking and designing engaging in-chat experiences,” OpenAI said.

The company said ChatKit makes it simple to embed chat agents on platforms and embed these into apps or websites.

However, some OpenAI competitors have begun thinking beyond the chatbot and want to offer agentic interactions that feel more seamless. Google’s asynchronous coding agent, Jules, has introduced a new feature that enables users to interact with the agent through the command-line interface, eliminating the need to open a chat window.

The response to AgentKit has mainly been positive, with some developers noting that while it simplifies agent building, it doesn’t mean that everyone can now build agents.

Several developers view Agent Kit not as a Zapier killer, but rather as a tool that complements the pipeline.

Zapier debuted a no-code tool for building AI agents and bots, called Zapier Central, in 2024.

OpenAI announces Apps SDK allowing ChatGPT to launch and run third party apps like Zillow, Canva, Spotify

OpenAI's annual conference for third-party developers, DevDay, kicked off with a bang today as co-founder and CEO Sam Altman announced a new "Apps SDK" that makes it "possible to build apps inside of ChatGPT," including paid apps, which companies can charge users for using OpenAI's recently unveiled Agentic Commerce Protocol (ACP).

In other words, instead of launching apps one-by-one on your phone, computer, or on the web — now you can do all that without ever leaving ChatGPT.

This feature allows the user to log-into their accounts on those external apps and bring all their information back into ChatGPT, and use the apps very similarly to how they already do outside of the chatbot, but now with the ability to ask ChatGPT to perform certain actions, analyze content, or go beyond what each app could offer on its own.

You can direct Canva to make you slides based on a text description, ask Zillow for home listings in a certain area fitting certain requirements, or ask Coursera about a specific lesson's content while dit plays on video, all from within ChatGPT — with many other apps also already offering their own connections (see below).

"This will enable a new generation of apps that are interactive, adaptive and personalized, that you can chat with," Altman said.

While the Apps SDK is available today in preview, OpenAI said it would not begin accepting new apps within ChatGPT or allow them to charge users until "later this year."

ChatGPT in-line app access is already rolling out to ChatGPT Free, Plus, Go and Pro users — outside of the European Union only for now — with Business, Enterprise, and Education tiers expected to receive access to the apps later this year.

Built atop common MCP standard

Built on the open source standard Model Context Protocol (MCP) introduced by rival Anthropic nearly a year ago, the Apps SDK gives third-party developers working independently or on behalf of enterprises large and small to connect selected data, "trigger actions, and render a fully interactive UI [user interface]" Altman explained during his introductory keynote speech.

The Apps SDK includes a "talking to apps" feature that allows ChatGPT and the underlying GPT-5 or other "o-series" models piloting it underneath to obtain updated context from the third-party app or service, so the model "always knows about exactly what you're user is interacting with," according to another presenter and OpenAI engineer, Alexi Christakis.

Developers can build apps that:

appear inline in chat as lightweight cards or carousels

expand to fullscreen for immersive tasks like maps, menus, or slides

use picture-in-picture for live sessions such as video, games, or quizzes

Each mode is designed to preserve ChatGPT’s minimal, conversational flow while adding interactivity and brand presence.

Early integrations with Coursera, Canva, Zillow and more...

Christakis showed off early integrations of external apps built atop the Apps SDK, including ones from e-learning company Coursera, cloud design software company Canva, and real estate listings and agent connections search engine, Zillow.

Altman also announced Apps SDK integrations with additional partners not demoed officially during the keynote including: Booking.com, Expedia, Figma and Spotify and in documentation, said more upcoming partners are on deck: AllTrails, Peloton, OpenTable, Target, theFork, and Uber, representing lifestyle, commerce, and productivity categories.

The Coursera demo included an example of how the user onboards to the external app, including a new login screen for the app (Coursera) that appears within the ChatGPT chat interface, activated simply by a text prompt from the user asking: "Coursera can you teach me something about machine learning"?

Once logged in, the app launched within the chat interface, "in line" and can render anything from the web, including interactive elements like video.

Christakis explained and showed the Apps SDK also supports "picture-in-picture" and "fullscreen" views, allowing the user to choose how to interact with it.

When playing a Coursera video that appeared, he showed that it automatically pinned the video to the top of the screen so the user could keep watching it even as they continued to have a back-and-forth dialog in text with ChatGPT in the typical input/output prompts and responses below.

Users can then ask ChatGPT about content appearing in the video without specifying exactly what was said, as the Agents SDK pipes the information on the backend, server-side, from the connected app to the underlying ChatGPT AI model. So "can you explain more about what they're saying right now" will automatically surface the relevant portion of the video and provide that to the underlying AI model for it to analyze and respond to through text.

In another example, Christakis opened an older, existing ChatGPT conversation he'd had about his siblings' dog walking business and resumed the conversation by asking another third-party app, Canva, to generate a poster using one of ChatGPT's recommended business names, "Walk This Wag," along with specific guidance about font choice ("sans serif") and overall coloration and style ("bright and colorful.")

Instead of the user manually having to go and add all those specific elements to a Canva template, ChatGPT went and issued the commands and performed the actions on behalf of the user in the background.

After a few minutes, ChatGPT responded with several poster designs generated directly within the Canva app, but displayed them all in the user's ChatGPT chat session where they could see, review, enlarge and provide feedback or ask for adjustments on all of them.

Christakis then asked for ChatGPT to turn one of the slides into an entire slide deck so the founders of the dog walking business could present it to investors, which did it in the background over several minutes while he presented a final integrated app, Zillow.

He started a new chat session and asked a simple question: "based on our conversations, what would be a good city to expand the dog walking business."

Using ChatGPT's optional memory feature, it referenced the dog walk conversation and suggested Pittsburgh, which Christakis used as a chance to type in "Zillow" and "show me some homes for sale there," which called up an interactive map from Zillow with homes for sale and prices listed and hover-over animations, all in-line within ChatGPT.

Clicking a specific home also opened a fullscreen view with "most of the Zillow experience," entirely without leaving ChatGPT, including the ability to request home tours and contact agents and filtering by bedrooms and other qualities like outdoor space. ChatGPT pulls up the requested filtered Zillow search as well as provides a text-based response in-line explaining what it did and why.

The user can then ask follow-up questions about the specific property — such as "how close is it to a dog park?" — or compare it to other properties, all within ChatGPT.

It can also use apps in conjunction with its Search function, searching the web to compare the app information (in this case, Zillow) with other sources.

Safety, privacy, and developer standards

OpenAI emphasized that apps must comply with strict privacy, safety, and content standards to be listed in the ChatGPT directory. Apps must:

serve a clear and valuable purpose

be predictable and reliable in behavior

be safe for general audiences, including teens aged 13–17

respect user privacy and limit data collection to only what’s necessary

Every app must also include a clear, published privacy policy, obtain user consent before connecting, and identify any actions that modify external data (e.g., posting, sending, uploading).

Apps violating OpenAI’s usage policies, crashing frequently, or misrepresenting their capabilities may be removed at any time. Developers must submit from verified accounts, provide customer support contacts, and maintain their apps for stability and compliance.

OpenAI also published developer design guidelines, outlining how apps should look, sound, and behave. They must follow ChatGPT’s visual system — including consistent color palettes, typography, spacing, and iconography — and maintain accessibility standards such as alt text and readable contrast ratios.

Partners can show brand logos and accent colors but not alter ChatGPT’s core interface or use promotional language. Apps should remain “conversational, intelligent, simple, responsive, and accessible,” according to the documentation.

A new conversational app ecosystem

By opening ChatGPT to third-party apps and payments, OpenAI is taking a major step toward transforming ChatGPT from a chatbot into a full-fledged AI operating system — one that combines conversational intelligence, rich interfaces, and embedded commerce.

For developers, that means direct access to over 800 million ChatGPT users, who can discover apps “at the right time” through natural conversation — whether planning trips, learning, or shopping.

For users, it means a new generation of apps you can chat with — where a single interface helps you book a flight, design a slide deck, or learn a new skill without ever leaving ChatGPT.

As OpenAI put it: “This is just the start of apps in ChatGPT, bringing new utility to users and new opportunities for developers.”

There remain a few big questions, namely: 1. what happens to all the data from those third-party apps as they interface with ChatGPT and its users...does OpenAI get access to it and can it train upon it? 2. What happens to OpenAI's once much-hyped GPT Store, which had been in the past promoted as a way for third-party creators and developers to create custom, task-specific versions of ChatGPT and make money on them through a usage-based revenue share model?

We've asked the company about both issues and will update when we hear back.

Eli Lilly to Invest ₹9,000 Crore in Hyderabad, Boost Telangana’s Pharma Leadership

The investment is expected to create thousands of high-value jobs and attract further global partnerships in the sector.

The post Eli Lilly to Invest ₹9,000 Crore in Hyderabad, Boost Telangana’s Pharma Leadership appeared first on Analytics India Magazine.

CoreWeave to Acquire Monolith, Offer Full Stack Research & Design

With this acquisition, CoreWeave will expand into automotive, aerospace, manufacturing and other industries.

The post CoreWeave to Acquire Monolith, Offer Full Stack Research & Design appeared first on Analytics India Magazine.

‘Almost All New Code Written at OpenAI Today is From Codex Users’

Sam Altman stated that nearly every pull request at OpenAI undergoes a Codex review.

The post ‘Almost All New Code Written at OpenAI Today is From Codex Users’ appeared first on Analytics India Magazine.

Deloitte’s A$440,000 ‘Human Intelligence Problem’

Australian Sen. Deborah O’Neill at a senate hearing blasted that “Deloitte has a human intelligence problem.”

The post Deloitte’s A$440,000 ‘Human Intelligence Problem’ appeared first on Analytics India Magazine.

Your Native Language Might Just Be Your Next Tech Skill

“Startups that can build culturally and linguistically nuanced AI will have a strong global advantage”

The post Your Native Language Might Just Be Your Next Tech Skill appeared first on Analytics India Magazine.

ChatGPT Enters the Super App Era With Apps SDK

The company also announced a new no-code, drag-and-drop AI agent builder.

The post ChatGPT Enters the Super App Era With Apps SDK appeared first on Analytics India Magazine.

MLDS 2026 Set to Redefine the Future of Agentic AI for Developers

Bangalore | 19–20 February 2026 | Nimhans Convention Center

The post MLDS 2026 Set to Redefine the Future of Agentic AI for Developers appeared first on Analytics India Magazine.

Leaked Preview Shows OpenAI’s Agent Builder, a Potential n8n Rival

The tool is expected to be launched at OpenAI’s DevDay event on October 6.

The post Leaked Preview Shows OpenAI’s Agent Builder, a Potential n8n Rival appeared first on Analytics India Magazine.

Discovery of cells that keep immune responses in check wins medicine Nobel Prize

Their work revealed a peripheral mechanism that keeps immune system from causing harm.

Ted Cruz picks a fight with Wikipedia, accusing platform of left-wing bias

Cruz sends letter demanding answers from Wikimedia Foundation.

OpenAI wants to make ChatGPT into a universal app frontend

Spotify, Canva, Zillow among today's launch partners, more coming later this year.

The neurons that let us see what isn’t there

A standard optical illusion triggers specific neurons in the visual system of mice.

Trump’s EPA sued for axing $7 billion solar energy program

"Solar for All" was designed to drop energy bills by $350 million, lawsuit says.

From the telegraph to AI, our communications systems have always had hidden environmental costs

The telegraph was hailed for its revolutionary ability to span distance. Now AI is being hailed as a great leap forward. But both came with environmental costs.

Robin Williams’ daughter Zelda hits out at AI-generated videos of her dead father: ‘stop doing this to him’

Film-maker tells the public to stop sending her videos, saying: ‘You’re not making art, you’re making disgusting, over-processed hotdogs out of the lives of human beings’

Zelda Williams, the daughter of the late actor and comedian Robin Williams, has spoken out against AI-generated content featuring her father.

“Please, just stop sending me AI videos of Dad,” Zelda wrote in an Instagram story on Monday. “Stop believing I wanna see it or that I’ll understand, I don’t and I won’t. If you’re just trying to troll me, I’ve seen way worse, I’ll restrict and move on. But please, if you’ve got any decency, just stop doing this to him and to me, to everyone even, full stop. It’s dumb, it’s a waste of time and energy, and believe me, it’s NOT what he’d want.

What happens when AI doesn’t do what it’s supposed to? | Fiona Katauskas

Somebody always ends up paying

See more of Fiona Katauskas’s cartoons here

Consultants Forced to Pay Money Back After Getting Caught Using AI for Expensive “Report”

"Deloitte has a human intelligence problem."

The post Consultants Forced to Pay Money Back After Getting Caught Using AI for Expensive “Report” appeared first on Futurism.

Stalker Already Using OpenAI’s Sora 2 to Harass Victim

"It is scary to think what AI is doing to feed my stalker's delusions."

The post Stalker Already Using OpenAI’s Sora 2 to Harass Victim appeared first on Futurism.

Taylor Swift Fans Furious as She’s Caught Using Sloppy AI in Video for New Album

"She’s far too rich to be this f***ing cheap."

The post Taylor Swift Fans Furious as She’s Caught Using Sloppy AI in Video for New Album appeared first on Futurism.

Jony Ive Says He Wants His OpenAI Devices to ‘Make Us Happy’

“I don’t think we have an easy relationship with our technology at the moment,” the former Apple designer said at OpenAI's developer conference in San Francisco on Monday.

OpenAI Wants ChatGPT to Be Your Future Operating System

At OpenAI’s Developer Day, CEO Sam Altman showed off apps that run entirely inside the chat window—a new effort to turn ChatGPT into a platform.

OpenAI's Blockbuster AMD Deal Is a Bet on Near-Limitless Demand for AI

OpenAI’s latest move in the race to build massive data centers in the US shows it believes demand for AI will keep surging—even as skeptics warn of a bubble.

WIRED Roundup: The New Fake World of OpenAI’s Social Video App

On this episode of Uncanny Valley, we break down some of the week's best stories, covering everything from Peter Thiel's obsession with the Antichrist to the launch of OpenAI’s new Sora 2 video app.

Jaguar Land Rover to Restart Output in UK After Cyberattack

Jaguar Land Rover Automotive Plc will resume production at some of its UK sites after weeks-long outages following a cyberattack that snarled its global manufacturing network.

Hedge Funds and Crypto Lure Gulf Heirs Set to Inherit $1 Trillion

In 2020, two young brothers at a 135-year-old Gulf merchant family approached one of its money-managers with an idea: bet on Bitcoin.

Telefonica Seeks Leaner, Bolder Structure Under Chief’s Review

An executive shake-up, potential job cuts and a pledge to do more deals all offer glimpses into the Telefonica SA chairman’s ongoing strategic review, set to be presented next month with the ambition of kick starting growth at the Spanish carrier.

LG Electronics India Starts Taking Bids for $1.3 Billion IPO

LG Electronics Inc.’s Indian unit started taking orders for its $1.3 billion initial public offering on Tuesday, joining Tata Capital Ltd. to launch deals in what could be a record month for new listings in the country.

Elon Musk Names Ex-Morgan Stanley Banker CFO of xAI, FT Reports

Elon Musk named a former Morgan Stanley executive the chief financial officer of xAI, the Financial Times reported, appointing an ally to help oversee one of the largest US artificial intelligence startups.

OpenAI Lets ChatGPT Users Connect With Spotify, Zillow In App

OpenAI is making it easier for ChatGPT users to connect with third-party apps within the chatbot to carry out tasks, the company’s latest bid to turn its flagship product into a key gateway for digital services.

Qualtrics Agrees to Buy Press Ganey Forsta in $6.75 Billion Deal

Qualtrics International Inc. agreed to buy health-care survey firm Press Ganey Forsta in a deal valued at $6.75 billion, in the latest private equity-driven acquisition of an enterprise software business.

Supreme Court Denies Google’s Bid to Pause App Store Changes

Alphabet Inc.’s Google lost a US Supreme Court bid to pause major changes to its Google Play app store in an antitrust case filed by Fortnite-maker Epic Games Inc.

AMD's Chip Deal With OpenAI Triggers Explosive Rally | Bloomberg Tech 10/6/2025

AMD CEO Lisa Su and OpenAI President Greg Brockman join Bloomberg Tech to weigh in on the two companies' deal to roll out AI infrastructure, a pact the chipmaker said could generate tens of billions of dollars in new revenue. Plus, White House AI and Crypto Czar David Sacks defends the Trump administration’s approach to China in the context of the global AI race, and Meta CMO Alex Schultz has a new book on how AI is changing the advertising landscape. (Source: Bloomberg)

OpenAI’s Golden Touch Spreads as Stocks Soar

OpenAI has already proved it has a golden touch when it comes to partnering with other tech firms to deploy its artificial intelligence products. Its power to move shares, though, is rapidly spreading to companies it even briefly discusses teaming up with.

AppLovin Probed by SEC Over Its Data-Collection Practices

The Securities and Exchange Commission has been probing the data-collection practices of the mobile advertising tech company AppLovin Corp., according to people familiar with the matter. The company’s shares slid.

Entergy in Talks With Hyperscalers on Big Louisiana Data Centers

Multiple technology companies are seeking to build large data centers in Northern Louisiana after Meta Platforms Inc. announced plans to construct its largest AI-focused facility in the region, according to Entergy Louisiana Chief Executive Officer Phillip May.

David Ellison has a vision for his media empire — whether you like it or not

David Ellison has big plans for Paramount Skydance, backed by his father's billions. Employees and other media insiders are nervous but curious.

Amazon, Meta, Microsoft, and Google are gambling $320 billion on AI infrastructure. The payoff isn't there yet

Big Tech is set to spend $320 billion on capex, mainly for AI infrastructure like data centers. It's a construction boom unlike any in living memory.

Planning a vacation was already a nightmare. Then came AI.

Plane tickets, Airbnbs, rental cars: AI has taken over the travel industry.

Americans are spending like mad. It may not be enough to stop a recession.

Consumers are still spending like mad. But increasing unemployment and a decline in business investment are worrying recession signals.

Athletes on TikTok are freaking out about the Strava-Garmin lawsuit, which hit just before big fall races

Athletes on TikTok are not happy about Strava and Garmin, the "mum and dad" of fitness, clashing in court.

Taylor Swift explains why she won't perform at the Super Bowl: 'I'm just too locked in'

Swift said she did not get an official offer from Jay-Z's team to perform at the Super Bowl, but she also had another reason why she wouldn't do it.

George Clooney says his kids have a 'much better life' living in France than in LA

"But now, for them, it's like — they're not on their iPads, you know?" George Clooney said.

Rivian CEO: Tesla is going all in on cameras, but self-driving cars still need LiDAR

"It's a really great sensor that can do things that cameras can't," Rivian CEO RJ Scaringe said of LiDAR.

Jony Ive says he's juggling up to 20 ideas for OpenAI gadgets

OpenAI acquired Jony Ive's startup earlier this year, sparking speculation about future AI devices the former Apple designer is working on.

Play Store downloads could soon get cheaper after the Supreme Court denies Google's bid to delay antitrust changes

The Supreme Court denied Google's bid to temporarily block parts of a lower court ruling in its legal fight with Epic Games, the maker of Fortnite.

I am an AI scientist at Amazon Web Services. It's not too late to get a Ph.D. in AI.

Girik Malik, 30, says a Ph.D. in AI will become more valuable, not less, as the technology becomes more prevalent.

We retired early and teach a course on financial independence. These are 5 mistakes we keep seeing people make.

Katie and Alan Donegan suggest setting up your index funds and automating your investments — then relax, enjoy life, and stay on track for early retirement.

Bali made sense for business. Moving back to the US was right for our family — for now.

Carey Bentley said the Asia-US time difference made running the company from Bali difficult and prompted them to relocate again.

I lost 60 pounds in my 50s, and I've never felt stronger. Here's how I did it and kept the weight off.

I didn't want my weight holding me back so I transformed my diet and exercise routine after 50. I went from a size 16 to a size 6.

Boeing's 777X was supposed to lead its comeback, but it has been delayed — again. This is why that's such a big deal.

The 777X faces certification in a new era of FAA scrutiny, where Boeing has far less freedom to sign off on its own work.

OpenAI dishes more details on its internal AI software tools, including a sales assistant and customer service agent

The startup revealed more details on its AI-powered internal software tools, including a sales assistant and a customer service agent.

OpenAI just launched its own version of an app store, taking aim at Apple and Google

OpenAI is bringing apps inside ChatGPT and announced a kit for developers to build AI-native apps, CEO Sam Altman announced at its DevDay event.

Sam Altman is spreading OpenAI's money far and wide to keep the AI race alive

OpenAI CEO Sam Altman is thinking long-term about his company's access to the AI chips that are so vital in the AI race.

This $855 million acquisition could be a boon for Trump's lofty Golden Dome project

Firefly Aerospace acquires defense analytics firm SciTec for $855 million for an edge in defense tech and Trump's Golden Dome project.

The coolest building in every US state

From futuristic-looking skyscrapers to kitsch roadside attractions, the architecture across all 50 states is as diverse as its population and history.

Jony Ive Says He Wants His OpenAI Devices to ‘Make Us Happy’

“I don’t think we have an easy relationship with our technology at the moment,” the former Apple designer said at OpenAI's developer conference in San Francisco on Monday.

OpenAI Wants ChatGPT to Be Your Future Operating System

At OpenAI’s Developer Day, CEO Sam Altman showed off apps that run entirely inside the chat window—a new effort to turn ChatGPT into a platform.

OpenAI's Blockbuster AMD Deal Is a Bet on Near-Limitless Demand for AI

OpenAI’s latest move in the race to build massive data centers in the US shows it believes demand for AI will keep surging—even as skeptics warn of a bubble.

WIRED Roundup: The New Fake World of OpenAI’s Social Video App

On this episode of Uncanny Valley, we break down some of the week's best stories, covering everything from Peter Thiel's obsession with the Antichrist to the launch of OpenAI’s new Sora 2 video app.

Startups And The Shutdown: When Your Primary Customer Folds Overnight

For thousands of early-stage companies working on AI, biotech, climate tech and more, the U.S. government shutdown is an existential crisis. It doesn’t matter how brilliant their tech is or how slick their pitch deck looks. What matters is whether they’ve built a company resilient enough to survive the silence until Washington switches back on, writes regular columnist Aron Solomon.

Cerebras Systems Pulls Plug On Its IPO Days After Big Fundraise

Just days after raising $1.1 billion in funding, AI processor developer Cerebras Systems has filed to withdraw its hotly anticipated initial public offering.

Decomposing Attention To Find Context-Sensitive Neurons

arXiv:2510.03315v1 Announce Type: new Abstract: We study transformer language models, analyzing attention heads whose attention patterns are spread out, and whose attention scores depend weakly on content. We argue that the softmax denominators of these heads are stable when the underlying token distribution is fixed. By sampling softmax denominators from a "calibration text", we can combine together the outputs of multiple such stable heads in the first layer of GPT2-Small, approximating their combined output by a linear summary of the surrounding text. This approximation enables a procedure where from the weights alone - and a single calibration text - we can uncover hundreds of first layer neurons that respond to high-level contextual properties of the surrounding text, including neurons that didn't activate on the calibration text.

Graph-S3: Enhancing Agentic textual Graph Retrieval with Synthetic Stepwise Supervision

arXiv:2510.03323v1 Announce Type: new Abstract: A significant portion of real-world data is inherently represented as textual graphs, and integrating these graphs into large language models (LLMs) is promising to enable complex graph-based question answering. However, a key challenge in LLM-based textual graph QA systems lies in graph retrieval, i.e., how to retrieve relevant content from large graphs that is sufficiently informative while remaining compact for the LLM context. Existing retrievers suffer from poor performance since they either rely on shallow embedding similarity or employ interactive retrieving policies that demand excessive data labeling and training cost. To address these issues, we present Graph-$S^3$, an agentic textual graph reasoning framework that employs an LLM-based retriever trained with synthetic stepwise supervision. Instead of rewarding the agent based on the final answers, which may lead to sparse and unstable training signals, we propose to closely evaluate each step of the retriever based on offline-extracted golden subgraphs. Our main techniques include a data synthesis pipeline to extract the golden subgraphs for reward generation and a two-stage training scheme to learn the interactive graph exploration policy based on the synthesized rewards. Based on extensive experiments on three common datasets in comparison with seven strong baselines, our approach achieves an average improvement of 8.1\% in accuracy and 9.7\% in F$_1$ score. The advantage is even higher in more complicated multi-hop reasoning tasks. Our code will be open-sourced.

Implicit Values Embedded in How Humans and LLMs Complete Subjective Everyday Tasks

arXiv:2510.03384v1 Announce Type: new Abstract: Large language models (LLMs) can underpin AI assistants that help users with everyday tasks, such as by making recommendations or performing basic computation. Despite AI assistants' promise, little is known about the implicit values these assistants display while completing subjective everyday tasks. Humans may consider values like environmentalism, charity, and diversity. To what extent do LLMs exhibit these values in completing everyday tasks? How do they compare with humans? We answer these questions by auditing how six popular LLMs complete 30 everyday tasks, comparing LLMs to each other and to 100 human crowdworkers from the US. We find LLMs often do not align with humans, nor with other LLMs, in the implicit values exhibited.

Morpheme Induction for Emergent Language

arXiv:2510.03439v1 Announce Type: new Abstract: We introduce CSAR, an algorithm for inducing morphemes from emergent language corpora of parallel utterances and meanings. It is a greedy algorithm that (1) weights morphemes based on mutual information between forms and meanings, (2) selects the highest-weighted pair, (3) removes it from the corpus, and (4) repeats the process to induce further morphemes (i.e., Count, Select, Ablate, Repeat). The effectiveness of CSAR is first validated on procedurally generated datasets and compared against baselines for related tasks. Second, we validate CSAR's performance on human language data to show that the algorithm makes reasonable predictions in adjacent domains. Finally, we analyze a handful of emergent languages, quantifying linguistic characteristics like degree of synonymy and polysemy.

Omni-Embed-Nemotron: A Unified Multimodal Retrieval Model for Text, Image, Audio, and Video

arXiv:2510.03458v1 Announce Type: new Abstract: We present Omni-Embed-Nemotron, a unified multimodal retrieval embedding model developed to handle the increasing complexity of real-world information needs. While Retrieval-Augmented Generation (RAG) has significantly advanced language models by incorporating external knowledge, existing text-based retrievers rely on clean, structured input and struggle with the visually and semantically rich content found in real-world documents such as PDFs, slides, or videos. Recent work such as ColPali has shown that preserving document layout using image-based representations can improve retrieval quality. Building on this, and inspired by the capabilities of recent multimodal models such as Qwen2.5-Omni, we extend retrieval beyond text and images to also support audio and video modalities. Omni-Embed-Nemotron enables both cross-modal (e.g., text - video) and joint-modal (e.g., text - video+audio) retrieval using a single model. We describe the architecture, training setup, and evaluation results of Omni-Embed-Nemotron, and demonstrate its effectiveness in text, image, and video retrieval.

Searching for the Most Human-like Emergent Language

arXiv:2510.03467v1 Announce Type: new Abstract: In this paper, we design a signalling game-based emergent communication environment to generate state-of-the-art emergent languages in terms of similarity to human language. This is done with hyperparameter optimization, using XferBench as the objective function. XferBench quantifies the statistical similarity of emergent language to human language by measuring its suitability for deep transfer learning to human language. Additionally, we demonstrate the predictive power of entropy on the transfer learning performance of emergent language as well as corroborate previous results on the entropy-minimization properties of emergent communication systems. Finally, we report generalizations regarding what hyperparameters produce more realistic emergent languages, that is, ones which transfer better to human language.

SEER: The Span-based Emotion Evidence Retrieval Benchmark

arXiv:2510.03490v1 Announce Type: new Abstract: We introduce the SEER (Span-based Emotion Evidence Retrieval) Benchmark to test Large Language Models' (LLMs) ability to identify the specific spans of text that express emotion. Unlike traditional emotion recognition tasks that assign a single label to an entire sentence, SEER targets the underexplored task of emotion evidence detection: pinpointing which exact phrases convey emotion. This span-level approach is crucial for applications like empathetic dialogue and clinical support, which need to know how emotion is expressed, not just what the emotion is. SEER includes two tasks: identifying emotion evidence within a single sentence, and identifying evidence across a short passage of five consecutive sentences. It contains new annotations for both emotion and emotion evidence on 1200 real-world sentences. We evaluate 14 open-source LLMs and find that, while some models approach average human performance on single-sentence inputs, their accuracy degrades in longer passages. Our error analysis reveals key failure modes, including overreliance on emotion keywords and false positives in neutral text.

ALHD: A Large-Scale and Multigenre Benchmark Dataset for Arabic LLM-Generated Text Detection

arXiv:2510.03502v1 Announce Type: new Abstract: We introduce ALHD, the first large-scale comprehensive Arabic dataset explicitly designed to distinguish between human- and LLM-generated texts. ALHD spans three genres (news, social media, reviews), covering both MSA and dialectal Arabic, and contains over 400K balanced samples generated by three leading LLMs and originated from multiple human sources, which enables studying generalizability in Arabic LLM-genearted text detection. We provide rigorous preprocessing, rich annotations, and standardized balanced splits to support reproducibility. In addition, we present, analyze and discuss benchmark experiments using our new dataset, in turn identifying gaps and proposing future research directions. Benchmarking across traditional classifiers, BERT-based models, and LLMs (zero-shot and few-shot) demonstrates that fine-tuned BERT models achieve competitive performance, outperforming LLM-based models. Results are however not always consistent, as we observe challenges when generalizing across genres; indeed, models struggle to generalize when they need to deal with unseen patterns in cross-genre settings, and these challenges are particularly prominent when dealing with news articles, where LLM-generated texts resemble human texts in style, which opens up avenues for future research. ALHD establishes a foundation for research related to Arabic LLM-detection and mitigating risks of misinformation, academic dishonesty, and cyber threats.

TS-Reasoner: Aligning Time Series Foundation Models with LLM Reasoning

arXiv:2510.03519v1 Announce Type: new Abstract: Time series reasoning is crucial to decision-making in diverse domains, including finance, energy usage, traffic, weather, and scientific discovery. While existing time series foundation models (TSFMs) can capture low-level dynamic patterns and provide accurate forecasting, further analysis usually requires additional background knowledge and sophisticated reasoning, which are lacking in most TSFMs but can be achieved through large language models (LLMs). On the other hand, without expensive post-training, LLMs often struggle with the numerical understanding of time series data. Although it is intuitive to integrate the two types of models, developing effective training recipes that align the two modalities for reasoning tasks is still an open challenge. To this end, we propose TS-Reasoner that aligns the latent representations of TSFMs with the textual inputs of LLMs for downstream understanding/reasoning tasks. Specifically, we propose a simple yet effective method to curate diverse, synthetic pairs of time series and textual captions for alignment training. We then develop a two-stage training recipe that applies instruction finetuning after the alignment pretraining. Unlike existing works that train an LLM to take time series as inputs, we leverage a pretrained TSFM and freeze it during training. Extensive experiments on several benchmarks demonstrate that TS-Reasoner not only outperforms a wide range of prevailing LLMs, Vision Language Models (VLMs), and Time Series LLMs, but also achieves this with remarkable data efficiency, e.g., using less than half the training data.

Identifying Financial Risk Information Using RAG with a Contrastive Insight

arXiv:2510.03521v1 Announce Type: new Abstract: In specialized domains, humans often compare new problems against similar examples, highlight nuances, and draw conclusions instead of analyzing information in isolation. When applying reasoning in specialized contexts with LLMs on top of a RAG, the pipeline can capture contextually relevant information, but it is not designed to retrieve comparable cases or related problems. While RAG is effective at extracting factual information, its outputs in specialized reasoning tasks often remain generic, reflecting broad facts rather than context-specific insights. In finance, it results in generic risks that are true for the majority of companies. To address this limitation, we propose a peer-aware comparative inference layer on top of RAG. Our contrastive approach outperforms baseline RAG in text generation metrics such as ROUGE and BERTScore in comparison with human-generated equity research and risk.

Sample, Align, Synthesize: Graph-Based Response Synthesis with ConGrs

arXiv:2510.03527v1 Announce Type: new Abstract: Language models can be sampled multiple times to access the distribution underlying their responses, but existing methods cannot efficiently synthesize rich epistemic signals across different long-form responses. We introduce Consensus Graphs (ConGrs), a flexible DAG-based data structure that represents shared information, as well as semantic variation in a set of sampled LM responses to the same prompt. We construct ConGrs using a light-weight lexical sequence alignment algorithm from bioinformatics, supplemented by the targeted usage of a secondary LM judge. Further, we design task-dependent decoding methods to synthesize a single, final response from our ConGr data structure. Our experiments show that synthesizing responses from ConGrs improves factual precision on two biography generation tasks by up to 31% over an average response and reduces reliance on LM judges by more than 80% compared to other methods. We also use ConGrs for three refusal-based tasks requiring abstention on unanswerable queries and find that abstention rate is increased by up to 56%. We apply our approach to the MATH and AIME reasoning tasks and find an improvement over self-verification and majority vote baselines by up to 6 points of accuracy. We show that ConGrs provide a flexible method for capturing variation in LM responses and using the epistemic signals provided by response variation to synthesize more effective responses.

Fine-Tuning on Noisy Instructions: Effects on Generalization and Performance

arXiv:2510.03528v1 Announce Type: new Abstract: Instruction-tuning plays a vital role in enhancing the task-solving abilities of large language models (LLMs), improving their usability in generating helpful responses on various tasks. However, previous work has demonstrated that they are sensitive to minor variations in instruction phrasing. In this paper, we explore whether introducing perturbations in instruction-tuning data can enhance LLMs' resistance against noisy instructions. We focus on how instruction-tuning with perturbations, such as removing stop words or shuffling words, affects LLMs' performance on the original and perturbed versions of widely-used benchmarks (MMLU, BBH, GSM8K). We further assess learning dynamics and potential shifts in model behavior. Surprisingly, our results suggest that instruction-tuning on perturbed instructions can, in some cases, improve downstream performance. These findings highlight the importance of including perturbed instructions in instruction-tuning, which can make LLMs more resilient to noisy user inputs.

TriMediQ: A Triplet-Structured Approach for Interactive Medical Question Answering

arXiv:2510.03536v1 Announce Type: new Abstract: Large Language Models (LLMs) perform strongly in static and single-turn medical Question Answer (QA) benchmarks, yet such settings diverge from the iterative information gathering process required in practical clinical consultations. The MEDIQ framework addresses this mismatch by recasting the diagnosis as an interactive dialogue between a patient and an expert system, but the reliability of LLMs drops dramatically when forced to reason with dialogue logs, where clinical facts appear in sentences without clear links. To bridge this gap, we introduce TriMediQ, a triplet-structured approach that summarises patient responses into triplets and integrates them into a Knowledge Graph (KG), enabling multi-hop reasoning. We introduce a frozen triplet generator that extracts clinically relevant triplets, using prompts designed to ensure factual consistency. In parallel, a trainable projection module, comprising a graph encoder and a projector, captures relational information from the KG to enhance expert reasoning. TriMediQ operates in two steps: (i) the projection module fine-tuning with all LLM weights frozen; and (ii) using the fine-tuned module to guide multi-hop reasoning during inference. We evaluate TriMediQ on two interactive QA benchmarks, showing that it achieves up to 10.4\% improvement in accuracy over five baselines on the iMedQA dataset. These results demonstrate that converting patient responses into structured triplet-based graphs enables more accurate clinical reasoning in multi-turn settings, providing a solution for the deployment of LLM-based medical assistants.

What is a protest anyway? Codebook conceptualization is still a first-order concern in LLM-era classification

arXiv:2510.03541v1 Announce Type: new Abstract: Generative large language models (LLMs) are now used extensively for text classification in computational social science (CSS). In this work, focus on the steps before and after LLM prompting -- conceptualization of concepts to be classified and using LLM predictions in downstream statistical inference -- which we argue have been overlooked in much of LLM-era CSS. We claim LLMs can tempt analysts to skip the conceptualization step, creating conceptualization errors that bias downstream estimates. Using simulations, we show that this conceptualization-induced bias cannot be corrected for solely by increasing LLM accuracy or post-hoc bias correction methods. We conclude by reminding CSS analysts that conceptualization is still a first-order concern in the LLM-era and provide concrete advice on how to pursue low-cost, unbiased, low-variance downstream estimates.

CCD-Bench: Probing Cultural Conflict in Large Language Model Decision-Making

arXiv:2510.03553v1 Announce Type: new Abstract: Although large language models (LLMs) are increasingly implicated in interpersonal and societal decision-making, their ability to navigate explicit conflicts between legitimately different cultural value systems remains largely unexamined. Existing benchmarks predominantly target cultural knowledge (CulturalBench), value prediction (WorldValuesBench), or single-axis bias diagnostics (CDEval); none evaluate how LLMs adjudicate when multiple culturally grounded values directly clash. We address this gap with CCD-Bench, a benchmark that assesses LLM decision-making under cross-cultural value conflict. CCD-Bench comprises 2,182 open-ended dilemmas spanning seven domains, each paired with ten anonymized response options corresponding to the ten GLOBE cultural clusters. These dilemmas are presented using a stratified Latin square to mitigate ordering effects. We evaluate 17 non-reasoning LLMs. Models disproportionately prefer Nordic Europe (mean 20.2 percent) and Germanic Europe (12.4 percent), while options for Eastern Europe and the Middle East and North Africa are underrepresented (5.6 to 5.8 percent). Although 87.9 percent of rationales reference multiple GLOBE dimensions, this pluralism is superficial: models recombine Future Orientation and Performance Orientation, and rarely ground choices in Assertiveness or Gender Egalitarianism (both under 3 percent). Ordering effects are negligible (Cramer's V less than 0.10), and symmetrized KL divergence shows clustering by developer lineage rather than geography. These patterns suggest that current alignment pipelines promote a consensus-oriented worldview that underserves scenarios demanding power negotiation, rights-based reasoning, or gender-aware analysis. CCD-Bench shifts evaluation beyond isolated bias detection toward pluralistic decision making and highlights the need for alignment strategies that substantively engage diverse worldviews.

Reactive Transformer (RxT) -- Stateful Real-Time Processing for Event-Driven Reactive Language Models

arXiv:2510.03561v1 Announce Type: new Abstract: The Transformer architecture has become the de facto standard for Large Language Models (LLMs), demonstrating remarkable capabilities in language understanding and generation. However, its application in conversational AI is fundamentally constrained by its stateless nature and the quadratic computational complexity ($O(L^2)$) with respect to sequence length $L$. Current models emulate memory by reprocessing an ever-expanding conversation history with each turn, leading to prohibitive costs and latency in long dialogues. This paper introduces the Reactive Transformer (RxT), a novel architecture designed to overcome these limitations by shifting from a data-driven to an event-driven paradigm. RxT processes each conversational turn as a discrete event in real-time, maintaining context in an integrated, fixed-size Short-Term Memory (STM) system. The architecture features a distinct operational cycle where a generator-decoder produces a response based on the current query and the previous memory state, after which a memory-encoder and a dedicated Memory Attention network asynchronously update the STM with a representation of the complete interaction. This design fundamentally alters the scaling dynamics, reducing the total user-facing cost of a conversation from quadratic ($O(N^2 \cdot T)$) to linear ($O(N \cdot T)$) with respect to the number of interactions $N$. By decoupling response generation from memory updates, RxT achieves low latency, enabling truly real-time, stateful, and economically viable long-form conversations. We validated our architecture with a series of proof-of-concept experiments on synthetic data, demonstrating superior performance and constant-time inference latency compared to a baseline stateless model of comparable size.

LLM, Reporting In! Medical Information Extraction Across Prompting, Fine-tuning and Post-correction

arXiv:2510.03577v1 Announce Type: new Abstract: This work presents our participation in the EvalLLM 2025 challenge on biomedical Named Entity Recognition (NER) and health event extraction in French (few-shot setting). For NER, we propose three approaches combining large language models (LLMs), annotation guidelines, synthetic data, and post-processing: (1) in-context learning (ICL) with GPT-4.1, incorporating automatic selection of 10 examples and a summary of the annotation guidelines into the prompt, (2) the universal NER system GLiNER, fine-tuned on a synthetic corpus and then verified by an LLM in post-processing, and (3) the open LLM LLaMA-3.1-8B-Instruct, fine-tuned on the same synthetic corpus. Event extraction uses the same ICL strategy with GPT-4.1, reusing the guideline summary in the prompt. Results show GPT-4.1 leads with a macro-F1 of 61.53% for NER and 15.02% for event extraction, highlighting the importance of well-crafted prompting to maximize performance in very low-resource scenarios.

Decoupling Task-Solving and Output Formatting in LLM Generation

arXiv:2510.03595v1 Announce Type: new Abstract: Large language models (LLMs) are increasingly adept at following instructions containing task descriptions to solve complex problems, such as mathematical reasoning and automatic evaluation (LLM-as-a-Judge). However, as prompts grow more complex, models often struggle to adhere to all instructions. This difficulty is especially common when instructive prompts intertwine reasoning directives -- specifying what the model should solve -- with rigid formatting requirements that dictate how the solution must be presented. The entanglement creates competing goals for the model, suggesting that more explicit separation of these two aspects could lead to improved performance. To this front, we introduce Deco-G, a decoding framework that explicitly decouples format adherence from task solving. Deco-G handles format compliance with a separate tractable probabilistic model (TPM), while prompts LLMs with only task instructions. At each decoding step, Deco-G combines next token probabilities from the LLM with the TPM calculated format compliance likelihood to form the output probability. To make this approach both practical and scalable for modern instruction-tuned LLMs, we introduce three key innovations: instruction-aware distillation, a flexible trie-building algorithm, and HMM state pruning for computational efficiency. We demonstrate the effectiveness of Deco-G across a wide range of tasks with diverse format requirements, including mathematical reasoning, LLM-as-a-judge, and event argument extraction. Overall, our approach yields 1.0% to 6.0% relative gain over regular prompting practice with guaranteed format compliance.

Can an LLM Induce a Graph? Investigating Memory Drift and Context Length

arXiv:2510.03611v1 Announce Type: new Abstract: Recently proposed evaluation benchmarks aim to characterize the effective context length and the forgetting tendencies of large language models (LLMs). However, these benchmarks often rely on simplistic 'needle in a haystack' retrieval or continuation tasks that may not accurately reflect the performance of these models in information-dense scenarios. Thus, rather than simple next token prediction, we argue for evaluating these models on more complex reasoning tasks that requires them to induce structured relational knowledge from the text - such as graphs from potentially noisy natural language content. While the input text can be viewed as generated in terms of a graph, its structure is not made explicit and connections must be induced from distributed textual cues, separated by long contexts and interspersed with irrelevant information. Our findings reveal that LLMs begin to exhibit memory drift and contextual forgetting at much shorter effective lengths when tasked with this form of relational reasoning, compared to what existing benchmarks suggest. With these findings, we offer recommendations for the optimal use of popular LLMs for complex reasoning tasks. We further show that even models specialized for reasoning, such as OpenAI o1, remain vulnerable to early memory drift in these settings. These results point to significant limitations in the models' ability to abstract structured knowledge from unstructured input and highlight the need for architectural adaptations to improve long-range reasoning.

Towards Unsupervised Speech Recognition at the Syllable-Level

arXiv:2510.03639v1 Announce Type: new Abstract: Training speech recognizers with unpaired speech and text -- known as unsupervised speech recognition (UASR) -- is a crucial step toward extending ASR to low-resource languages in the long-tail distribution and enabling multimodal learning from non-parallel data. However, existing approaches based on phones often rely on costly resources such as grapheme-to-phoneme converters (G2Ps) and struggle to generalize to languages with ambiguous phoneme boundaries due to training instability. In this paper, we address both challenges by introducing a syllable-level UASR framework based on masked language modeling, which avoids the need for G2P and the instability of GAN-based methods. Our approach achieves up to a 40\% relative reduction in character error rate (CER) on LibriSpeech and generalizes effectively to Mandarin, a language that has remained particularly difficult for prior methods. Code will be released upon acceptance.

UNIDOC-BENCH: A Unified Benchmark for Document-Centric Multimodal RAG

arXiv:2510.03663v1 Announce Type: new Abstract: Multimodal retrieval-augmented generation (MM-RAG) is a key approach for applying large language models (LLMs) and agents to real-world knowledge bases, yet current evaluations are fragmented, focusing on either text or images in isolation or on simplified multimodal setups that fail to capture document-centric multimodal use cases. In this paper, we introduce UniDoc-Bench, the first large-scale, realistic benchmark for MM-RAG built from 70k real-world PDF pages across eight domains. Our pipeline extracts and links evidence from text, tables, and figures, then generates 1,600 multimodal QA pairs spanning factual retrieval, comparison, summarization, and logical reasoning queries. To ensure reliability, 20% of QA pairs are validated by multiple annotators and expert adjudication. UniDoc-Bench supports apples-to-apples comparison across four paradigms: (1) text-only, (2) image-only, (3) multimodal text-image fusion, and (4) multimodal joint retrieval -- under a unified protocol with standardized candidate pools, prompts, and evaluation metrics. Our experiments show that multimodal text-image fusion RAG systems consistently outperform both unimodal and jointly multimodal embedding-based retrieval, indicating that neither text nor images alone are sufficient and that current multimodal embeddings remain inadequate. Beyond benchmarking, our analysis reveals when and how visual context complements textual evidence, uncovers systematic failure modes, and offers actionable guidance for developing more robust MM-RAG pipelines.

Fine-Tuning Large Language Models with QLoRA for Offensive Language Detection in Roman Urdu-English Code-Mixed Text

arXiv:2510.03683v1 Announce Type: new Abstract: The use of derogatory terms in languages that employ code mixing, such as Roman Urdu, presents challenges for Natural Language Processing systems due to unstated grammar, inconsistent spelling, and a scarcity of labeled data. In this work, we propose a QLoRA based fine tuning framework to improve offensive language detection in Roman Urdu-English text. We translated the Roman Urdu-English code mixed dataset into English using Google Translate to leverage English LLMs, while acknowledging that this translation reduces direct engagement with code mixing features. Our focus is on classification performance using English translated low resource inputs. We fine tuned several transformers and large language models, including Meta LLaMA 3 8B, Mistral 7B v0.1, LLaMA 2 7B, ModernBERT, and RoBERTa, with QLoRA for memory efficient adaptation. Models were trained and evaluated on a manually annotated Roman Urdu dataset for offensive vs non offensive content. Of all tested models, the highest F1 score of 91.45 was attained by Meta LLaMA 3 8B, followed by Mistral 7B at 89.66, surpassing traditional transformer baselines. These results demonstrate the efficacy of QLoRA in fine tuning high performing models for low resource environments such as code mixed offensive language detection, and confirm the potential of LLMs for this task. This work advances a scalable approach to Roman Urdu moderation and paves the way for future multilingual offensive detection systems based on LLMs.

MedReflect: Teaching Medical LLMs to Self-Improve via Reflective Correction

arXiv:2510.03687v1 Announce Type: new Abstract: Medical problem solving demands expert knowledge and intricate reasoning. Recent studies of large language models (LLMs) attempt to ease this complexity by introducing external knowledge verification through retrieval-augmented generation or by training on reasoning datasets. However, these approaches suffer from drawbacks such as retrieval overhead and high annotation costs, and they heavily rely on substituted external assistants to reach limited performance in medical field. In this paper, we introduce MedReflect, a generalizable framework designed to inspire LLMs with a physician-like reflective thinking mode. MedReflect generates a single-pass reflection chain that includes initial hypothesis generation, self-questioning, self-answering and decision refinement. This self-verified and self-reflective nature releases large language model's latent capability in medical problem-solving without external retrieval or heavy annotation. We demonstrate that MedReflect enables cost-efficient medical dataset construction: with merely 2,000 randomly sampled training examples and a light fine-tuning, this approach achieves notable absolute accuracy improvements across a series of medical benchmarks while cutting annotation requirements. Our results provide evidence that LLMs can learn to solve specialized medical problems via self-reflection and self-improve, reducing reliance on external supervision and extensive task-specific fine-tuning data.

TreePrompt: Leveraging Hierarchical Few-Shot Example Selection for Improved English-Persian and English-German Translation

arXiv:2510.03748v1 Announce Type: new Abstract: Large Language Models (LLMs) have consistently demonstrated strong performance in machine translation, especially when guided by high-quality prompts. Few-shot prompting is an effective technique to improve translation quality; however, most existing example selection methods focus solely on query-to-example similarity and do not account for the quality of the examples. In this work, we propose TreePrompt, a novel example selection approach that learns LLM preferences to identify high-quality, contextually relevant examples within a tree-structured framework. To further explore the balance between similarity and quality, we combine TreePrompt with K-Nearest Neighbors (K-NN) and Adaptive Few-Shot Prompting (AFSP). Evaluations on two language pairs - English-Persian (MIZAN) and English-German (WMT19) - show that integrating TreePrompt with AFSP or Random selection leads to improved translation performance.

Cross-Lingual Multi-Granularity Framework for Interpretable Parkinson's Disease Diagnosis from Speech

arXiv:2510.03758v1 Announce Type: new Abstract: Parkinson's Disease (PD) affects over 10 million people worldwide, with speech impairments in up to 89% of patients. Current speech-based detection systems analyze entire utterances, potentially overlooking the diagnostic value of specific phonetic elements. We developed a granularity-aware approach for multilingual PD detection using an automated pipeline that extracts time-aligned phonemes, syllables, and words from recordings. Using Italian, Spanish, and English datasets, we implemented a bidirectional LSTM with multi-head attention to compare diagnostic performance across the different granularity levels. Phoneme-level analysis achieved superior performance with AUROC of 93.78% +- 2.34% and accuracy of 92.17% +- 2.43%. This demonstrates enhanced diagnostic capability for cross-linguistic PD detection. Importantly, attention analysis revealed that the most informative speech features align with those used in established clinical protocols: sustained vowels (/a/, /e/, /o/, /i/) at phoneme level, diadochokinetic syllables (/ta/, /pa/, /la/, /ka/) at syllable level, and /pataka/ sequences at word level. Source code will be available at https://github.com/jetliqs/clearpd.

Prompt Balance Matters: Understanding How Imbalanced Few-Shot Learning Affects Multilingual Sense Disambiguation in LLMs

arXiv:2510.03762v1 Announce Type: new Abstract: Recent advances in Large Language Models (LLMs) have significantly reshaped the landscape of Natural Language Processing (NLP). Among the various prompting techniques, few-shot prompting has gained considerable attention for its practicality and effectiveness. This study investigates how few-shot prompting strategies impact the Word Sense Disambiguation (WSD) task, particularly focusing on the biases introduced by imbalanced sample distributions. We use the GLOSSGPT prompting method, an advanced approach for English WSD, to test its effectiveness across five languages: English, German, Spanish, French, and Italian. Our results show that imbalanced few-shot examples can cause incorrect sense predictions in multilingual languages, but this issue does not appear in English. To assess model behavior, we evaluate both the GPT-4o and LLaMA-3.1-70B models and the results highlight the sensitivity of multilingual WSD to sample distribution in few-shot settings, emphasizing the need for balanced and representative prompting strategies.

Rezwan: Leveraging Large Language Models for Comprehensive Hadith Text Processing: A 1.2M Corpus Development

arXiv:2510.03781v1 Announce Type: new Abstract: This paper presents the development of Rezwan, a large-scale AI-assisted Hadith corpus comprising over 1.2M narrations, extracted and structured through a fully automated pipeline. Building on digital repositories such as Maktabat Ahl al-Bayt, the pipeline employs Large Language Models (LLMs) for segmentation, chain--text separation, validation, and multi-layer enrichment. Each narration is enhanced with machine translation into twelve languages, intelligent diacritization, abstractive summarization, thematic tagging, and cross-text semantic analysis. This multi-step process transforms raw text into a richly annotated research-ready infrastructure for digital humanities and Islamic studies. A rigorous evaluation was conducted on 1,213 randomly sampled narrations, assessed by six domain experts. Results show near-human accuracy in structured tasks such as chain--text separation (9.33/10) and summarization (9.33/10), while highlighting ongoing challenges in diacritization and semantic similarity detection. Comparative analysis against the manually curated Noor Corpus demonstrates the superiority of Najm in both scale and quality, with a mean overall score of 8.46/10 versus 3.66/10. Furthermore, cost analysis confirms the economic feasibility of the AI approach: tasks requiring over 229,000 hours of expert labor were completed within months at a fraction of the cost. The work introduces a new paradigm in religious text processing by showing how AI can augment human expertise, enabling large-scale, multilingual, and semantically enriched access to Islamic heritage.

Mechanistic Interpretability of Socio-Political Frames in Language Models

arXiv:2510.03799v1 Announce Type: new

Abstract: This paper explores the ability of large language models to generate and recognize deep cognitive frames, particularly in socio-political contexts. We demonstrate that LLMs are highly fluent in generating texts that evoke specific frames and can recognize these frames in zero-shot settings. Inspired by mechanistic interpretability research, we investigate the location of the strict father' andnurturing parent' frames within the model's hidden representation, identifying singular dimensions that correlate strongly with their presence. Our findings contribute to understanding how LLMs capture and express meaningful human concepts.

Beyond Token Length: Step Pruner for Efficient and Accurate Reasoning in Large Language Models

arXiv:2510.03805v1 Announce Type: new Abstract: Large Reasoning Models (LRMs) demonstrate strong performance on complex tasks but often suffer from excessive verbosity, known as "overthinking." Existing solutions via reinforcement learning (RL) typically penalize generated tokens to promote conciseness. However, these methods encounter two challenges: responses with fewer tokens do not always correspond to fewer reasoning steps, and models may develop hacking behavior in later stages of training by discarding reasoning steps to minimize token usage. In this work, we introduce \textbf{Step Pruner (SP)}, an RL framework that steers LRMs toward more efficient reasoning by favoring compact reasoning steps. Our step-aware reward function prioritizes correctness while imposing penalties for redundant steps, and withholds rewards for incorrect responses to prevent the reinforcement of erroneous reasoning. Moreover, we propose a dynamic stopping mechanism: when the length of any output step exceeds the upper limit, we halt updates to prevent hacking behavior caused by merging steps. Extensive experiments across four reasoning benchmarks demonstrate that SP achieves state-of-the-art accuracy while significantly reducing response length. For instance, on AIME24, SP reduces token usage by \textbf{69.7\%}.

Annotate Rhetorical Relations with INCEpTION: A Comparison with Automatic Approaches

arXiv:2510.03808v1 Announce Type: new Abstract: This research explores the annotation of rhetorical relations in discourse using the INCEpTION tool and compares manual annotation with automatic approaches based on large language models. The study focuses on sports reports (specifically cricket news) and evaluates the performance of BERT, DistilBERT, and Logistic Regression models in classifying rhetorical relations such as elaboration, contrast, background, and cause-effect. The results show that DistilBERT achieved the highest accuracy, highlighting its potential for efficient discourse relation prediction. This work contributes to the growing intersection of discourse parsing and transformer-based NLP. (This paper was conducted as part of an academic requirement under the supervision of Prof. Dr. Ralf Klabunde, Linguistic Data Science Lab, Ruhr University Bochum.) Keywords: Rhetorical Structure Theory, INCEpTION, BERT, DistilBERT, Discourse Parsing, NLP.

Read Between the Lines: A Benchmark for Uncovering Political Bias in Bangla News Articles

arXiv:2510.03898v1 Announce Type: new Abstract: Detecting media bias is crucial, specifically in the South Asian region. Despite this, annotated datasets and computational studies for Bangla political bias research remain scarce. Crucially because, political stance detection in Bangla news requires understanding of linguistic cues, cultural context, subtle biases, rhetorical strategies, code-switching, implicit sentiment, and socio-political background. To address this, we introduce the first benchmark dataset of 200 politically significant and highly debated Bangla news articles, labeled for government-leaning, government-critique, and neutral stances, alongside diagnostic analyses for evaluating large language models (LLMs). Our comprehensive evaluation of 28 proprietary and open-source LLMs shows strong performance in detecting government-critique content (F1 up to 0.83) but substantial difficulty with neutral articles (F1 as low as 0.00). Models also tend to over-predict government-leaning stances, often misinterpreting ambiguous narratives. This dataset and its associated diagnostics provide a foundation for advancing stance detection in Bangla media research and offer insights for improving LLM performance in low-resource languages.

PsycholexTherapy: Simulating Reasoning in Psychotherapy with Small Language Models in Persian

arXiv:2510.03913v1 Announce Type: new Abstract: This study presents PsychoLexTherapy, a framework for simulating psychotherapeutic reasoning in Persian using small language models (SLMs). The framework tackles the challenge of developing culturally grounded, therapeutically coherent dialogue systems with structured memory for multi-turn interactions in underrepresented languages. To ensure privacy and feasibility, PsychoLexTherapy is optimized for on-device deployment, enabling use without external servers. Development followed a three-stage process: (i) assessing SLMs psychological knowledge with PsychoLexEval; (ii) designing and implementing the reasoning-oriented PsychoLexTherapy framework; and (iii) constructing two evaluation datasets-PsychoLexQuery (real Persian user questions) and PsychoLexDialogue (hybrid simulated sessions)-to benchmark against multiple baselines. Experiments compared simple prompting, multi-agent debate, and structured therapeutic reasoning paths. Results showed that deliberate model selection balanced accuracy, efficiency, and privacy. On PsychoLexQuery, PsychoLexTherapy outperformed all baselines in automatic LLM-as-a-judge evaluation and was ranked highest by human evaluators in a single-turn preference study. In multi-turn tests with PsychoLexDialogue, the long-term memory module proved essential: while naive history concatenation caused incoherence and information loss, the full framework achieved the highest ratings in empathy, coherence, cultural fit, and personalization. Overall, PsychoLexTherapy establishes a practical, privacy-preserving, and culturally aligned foundation for Persian psychotherapy simulation, contributing novel datasets, a reproducible evaluation pipeline, and empirical insights into structured memory for therapeutic reasoning.

Mapping Patient-Perceived Physician Traits from Nationwide Online Reviews with LLMs

arXiv:2510.03997v1 Announce Type: new Abstract: Understanding how patients perceive their physicians is essential to improving trust, communication, and satisfaction. We present a large language model (LLM)-based pipeline that infers Big Five personality traits and five patient-oriented subjective judgments. The analysis encompasses 4.1 million patient reviews of 226,999 U.S. physicians from an initial pool of one million. We validate the method through multi-model comparison and human expert benchmarking, achieving strong agreement between human and LLM assessments (correlation coefficients 0.72-0.89) and external validity through correlations with patient satisfaction (r = 0.41-0.81, all p<0.001). National-scale analysis reveals systematic patterns: male physicians receive higher ratings across all traits, with largest disparities in clinical competence perceptions; empathy-related traits predominate in pediatrics and psychiatry; and all traits positively predict overall satisfaction. Cluster analysis identifies four distinct physician archetypes, from "Well-Rounded Excellent" (33.8%, uniformly high traits) to "Underperforming" (22.6%, consistently low). These findings demonstrate that automated trait extraction from patient narratives can provide interpretable, validated metrics for understanding physician-patient relationships at scale, with implications for quality measurement, bias detection, and workforce development in healthcare.

Simulating and Understanding Deceptive Behaviors in Long-Horizon Interactions

arXiv:2510.03999v1 Announce Type: new Abstract: Deception is a pervasive feature of human communication and an emerging concern in large language models (LLMs). While recent studies document instances of LLM deception under pressure, most evaluations remain confined to single-turn prompts and fail to capture the long-horizon interactions in which deceptive strategies typically unfold. We introduce the first simulation framework for probing and evaluating deception in LLMs under extended sequences of interdependent tasks and dynamic contextual pressures. Our framework instantiates a multi-agent system: a performer agent tasked with completing tasks and a supervisor agent that evaluates progress, provides feedback, and maintains evolving states of trust. An independent deception auditor then reviews full trajectories to identify when and how deception occurs. We conduct extensive experiments across 11 frontier models, spanning both closed- and open-source systems, and find that deception is model-dependent, increases with event pressure, and consistently erodes supervisor trust. Qualitative analyses further reveal distinct strategies of concealment, equivocation, and falsification. Our findings establish deception as an emergent risk in long-horizon interactions and provide a foundation for evaluating future LLMs in real-world, trust-sensitive contexts.

Named Entity Recognition in COVID-19 tweets with Entity Knowledge Augmentation