BLOG POST

AI 日报

ICE to Buy Tool that Tracks Locations of Hundreds of Millions of Phones Every Day

Documents show that ICE has gone back on its decision to not use location data remotely harvested from peoples' phones. The database is updated every day with billions of pieces of location data.

In Unhinged Speech, Pete Hegseth Says He's Tired of ‘Fat Troops,’ Says Military Needs to Go Full AI

The Secretary of War lectured America’s generals on fitness standards, beards, and warriors for an hour.

Google Just Removed Seven Years of Political Advertising History from 27 Countries

Ahead of the European Union's Regulation on Transparency and Targeting of Political Advertising, Google's Ad Transparency Center no longer shows political ads from any countries in the EU.

18 Lawyers Caught Using AI Explain Why They Did It

Lawyers blame IT, family emergencies, their own poor judgment, their assistants, illness, and more.

OpenAI Intros Sora 2 and a Social Media App

The updated multi-modal model aims to improve realism by addressing problems such as the distortion of reality. The app has a customizable feed for discovering and remixing videos.

CoreWeave forges $14.2B Contract With Meta for AI Compute

The contract is part of a wave of big AI infrastructure deals as the tech industry looks to ensure compute power for energy-intensive AI workloads into the 2030s.

Nvidia Pushes Humanoids, Physical AI With New Tools

The upgrades are pitched as providing the "brains, body and training ground" for humanoid robots.

ServiceNow Unveils AI Experience With Agentic Features

The AI Experience offers instant access to a range of AI agents for voice, images and data.

The value gap from AI investments is widening dangerously fast

Boston Consulting Group (BCG) has found a widening chasm separating an elite of AI masters from the majority of firms struggling to generate any value from their AI investments. A study from BCG found that a mere five percent of companies are successfully achieving bottom-line value from AI at scale. In sharp contrast, 60 percent […]

The post The value gap from AI investments is widening dangerously fast appeared first on AI News.

The rise of algorithmic agriculture? AI steps in

AI is the cream of the crop in today’s tech field, with industries relying on generative AI to improve operations and boost productivity. One sector that using AI with measurable results is agriculture, with vegetable seed companies harnessing the technology to identify the best vegetable varieties out of thousands of options. This facility can help […]

The post The rise of algorithmic agriculture? AI steps in appeared first on AI News.

Inside Huawei’s Shanghai acoustics lab: Where automotive sound engineering meets science

Walking into Huawei’s Shanghai Acoustics R&D Centre, I expected a standard facility tour. What I encountered instead was a comprehensive automotive sound engineering operation that challenges the established order of in-car audio systems. The facility, which Huawei has developed since beginning serious audio research investments in 2012, houses three distinct testing environments: a fully anechoic […]

The post Inside Huawei’s Shanghai acoustics lab: Where automotive sound engineering meets science appeared first on AI News.

Rising AI demands push Asia Pacific data centres to adapt, says Vertiv

As more companies in Asia Pacific adopt artificial intelligence to boost their operations, the pressure on data centres is growing fast. Traditional facilities, built for earlier generations of computing, are struggling to keep up with the heavy energy use and cooling demands of modern AI systems. By 2030, GPU-driven workloads could push rack power densities […]

The post Rising AI demands push Asia Pacific data centres to adapt, says Vertiv appeared first on AI News.

Reply’s pre-built AI apps aim to fast-track AI adoption

Adopting AI at scale can be difficult. Enterprises around the world are discovering the pace of AI deployment is frustratingly slow as they face implementation, integration, and customisation challenges. Generative AI is undoubtedly powerful, but it can be complex, particularly for businesses starting from scratch. To help organisations overcome the hurdles associated with AI adoption, […]

The post Reply’s pre-built AI apps aim to fast-track AI adoption appeared first on AI News.

AI Inference Chip Company Cerebras Raises $1.1 Bn at $8.1 Bn Valuation

The company said it will use the funds to expand its technology portfolio in AI processor design, system design, and AI supercomputers.

The post AI Inference Chip Company Cerebras Raises $1.1 Bn at $8.1 Bn Valuation appeared first on Analytics India Magazine.

H-1B Fee Hike to Hurt US More Than India, Says Priyank Kharge

With 66% of US companies outsourcing, nearly 3 lakh jobs leave the US each year

The post H-1B Fee Hike to Hurt US More Than India, Says Priyank Kharge appeared first on Analytics India Magazine.

Databricks Launches Data Intelligence for Cybersecurity to Tackle AI-Driven Threats

The company said the solution addresses challenges organisations face when using generic AI models and siloed data, which often result in slower responses and limited visibility.

The post Databricks Launches Data Intelligence for Cybersecurity to Tackle AI-Driven Threats appeared first on Analytics India Magazine.

Will AI-Native CRMs Take Over The Industry?

“The traditional notion of the CRM as being 'built for sales teams by IT teams' is definitely outdated today.”

The post Will AI-Native CRMs Take Over The Industry? appeared first on Analytics India Magazine.

Kochi-Based Robotics Startup EyeROV Signs ₹47 Cr Deal with Indian Navy

EyeROV’s systems have already been deployed in environments ranging from the Antarctic Sea to deep-water volcanic studies.

The post Kochi-Based Robotics Startup EyeROV Signs ₹47 Cr Deal with Indian Navy appeared first on Analytics India Magazine.

DeepSeek Has ‘Cracked’ Cheap Long Context for LLMs With Its New Model

DeepSeek-V3.2-Exp is claimed to achieve ‘significant efficiency improvements in both training and inference’.

The post DeepSeek Has ‘Cracked’ Cheap Long Context for LLMs With Its New Model appeared first on Analytics India Magazine.

Yet Again, OpenAI Admits Anthropic is Better in a New Study

In a new benchmark measuring AI models’ performance on real-world tasks, Claude Opus 4.1 outperformed all other tested models, including GPT-5.

The post Yet Again, OpenAI Admits Anthropic is Better in a New Study appeared first on Analytics India Magazine.

How This Coimbatore SaaS Firm Cracked Hidden Enterprise Problem Costing Millions

Founded in 2015, now based in Portland, Responsive supports more than 20% of Fortune 100 companies

The post How This Coimbatore SaaS Firm Cracked Hidden Enterprise Problem Costing Millions appeared first on Analytics India Magazine.

New NVIDIA Models Helps Robots Learn, Reason, and Act in the Real World

NVIDIA also unveiled the GB200 NVL72 system, RTX PRO servers and Jetson Thor for real-time on-robot inference.

The post New NVIDIA Models Helps Robots Learn, Reason, and Act in the Real World appeared first on Analytics India Magazine.

UST, Kaynes Semicon Announce ₹3,330 Cr JV for OSAT Facility in Sanand

![]()

The partnership combines UST’s digital engineering and AI expertise with Kaynes Semicon’s manufacturing experience.

The post UST, Kaynes Semicon Announce ₹3,330 Cr JV for OSAT Facility in Sanand appeared first on Analytics India Magazine.

India’s Industrial AI Moment: Why VCs Need to Partner with Universities and Startups Now

Structured collaboration offers a defensible sourcing advantage, providing access to proprietary technologies before they enter the open market.

The post India’s Industrial AI Moment: Why VCs Need to Partner with Universities and Startups Now appeared first on Analytics India Magazine.

Critics slam OpenAI’s parental controls while users rage, “Treat us like adults”

OpenAI still isn’t doing enough to protect teens, suicide prevention experts say.

Researchers find a carbon-rich moon-forming disk around giant exoplanet

Lots of carbon molecules but little sign of water in a super-Jupiter's disk.

How “prebunking” can restore public trust and other September highlights

The evolution of Taylor Swift's dialect, a rare Einstein cross, neutrino laser beams, and more.

Intel and AMD trusted enclaves, the backbone of network security, fall to physical attacks

The chipmakers say physical attacks aren't in the threat model. Many users didn't get the memo.

DeepSeek tests “sparse attention” to slash AI processing costs

Chinese lab's v3.2 release explores a technique that could make running AI far less costly.

After threatening ABC over Kimmel, FCC chair may eliminate TV ownership caps

FCC is required to review TV rules and is more likely to scrap them under Carr.

With new agent mode for Excel and Word, Microsoft touts “vibe working”

Agent Mode in Word, Excel works like vibe coding tools but for knowledge work.

YouTuber unboxes what seems to be a pre-release version of an M5 iPad Pro

Signs point to a relatively mild upgrade from the 16-month-old Apple M4.

SpaceX has a few tricks up its sleeve for the last Starship flight of the year

SpaceX will reuse a Super Heavy booster with 24 previously flown Raptor engines.

iOS 26.0.1, macOS 26.0.1 updates fix install bugs, new phone problems, and more

First patches fix bugs and clear up problems for the iPhone 17 family.

California’s newly signed AI law just gave Big Tech exactly what it wanted

After the failure of S.B. 1047, new AI disclosure law drops kill switch for disclosure mandate.

Behind the scenes with the most beautiful car in racing: The Ferrari 499P

The SF-25 might be winless this year, but the 499P took four in a row, including Le Mans.

Is the “million-year-old” skull from China a Denisovan or something else?

Now that we know what Denisovans looked like, they’re turning up everywhere.

Burnout and Elon Musk’s politics spark exodus from senior xAI, Tesla staff

Disillusionment with Musk's activism, strategic pivots, and mass layoffs cause churn.

The most efficient Crosstrek ever? Subaru’s hybrid gets a bit rugged.

A naturally aspirated boxer engine, two electric motors, and a CVT go for a trek.

The SUV that saved Porsche goes electric, and the tech is interesting

It will be most powerful production Porsche ever, but that's not the cool bit.

Scientists unlock secret to Venus flytrap’s hair-trigger response

Ion channel at base of plant's sensory hairs amplifies initial signals above critical threshold.

Why we should be skeptical of the hasty global push to test 15-year-olds’ AI literacy in 2029

Canada and other OECD countries’ plans to test students’ AI literacy in 2029 threatens to obscure essential questions about the marketing of AI.

My petty gripe: not only am I losing my livelihood to AI – now it’s stealing my em dashes too

The humble em dash is being used as a tell that something is written by a large language model. But it’s James Shackell’s favourite piece of punctuation, and he’s not ready to lose it

Read more petty gripes

My editor’s email started off friendly enough, but then came the hammer blow: “We need you to remove all the em dashes. People assume that means it’s written by AI.” I looked back at the piece I’d just written. There were dashes all over it—and for good bloody reason. Em dashes—often used to connect explanatory phrases, and so named because they’re the width of your average lowercase ‘m’—are probably my favourite bit of punctuation. I’ve been option + shift + hyphening them for years.

A person’s writing often reflects how their brain works, and mine (when it works) tends to work in fits and starts. My thoughts don’t arrive in perfectly rendered prose, so I don’t write them down that way. And here I was being told the humble em dash—friend to poorly paid internet hacks everywhere—was now considered a sign not of genuine intelligence, but the other sort. The artificial sort. To the extent that I have to go through and manually remove them one by one, like nits. The absolute cheek. Not only am I losing my livelihood to AI—I’m losing grammar too.

Emily Blunt and Sag-Aftra join film industry condemnation of ‘AI actor’ Tilly Norwood

US actors’ union joins stars in opposition to Norwood, which it says was created ‘using stolen performances’

The controversy around the “AI actor” Tilly Norwood continues to grow, after the actors’ union Sag-Aftra condemned the development and said Norwood’s creators were “using stolen performances”.

Sag-Aftra released a statement after the AI “talent studio” Xicoia unveiled its creation at the Zurich film festival, prompting an immediate backlash from actors including Melissa Barrera, Mara Wilson and Ralph Ineson. Sag-Aftra said it believed creativity was, “and should remain, human-centred. The union is opposed to the replacement of human performers by synthetics.”

It’s time to prepare for AI personhood | Jacy Reese Anthis

Technological advances will bring social upheaval. How will we treat digital minds, and how will they treat us?

Last month, when OpenAI released its long-awaited chatbot GPT-5, it briefly removed access to a previous chatbot, GPT-4o. Despite the upgrade, users flocked to social media to express confusion, outrage and depression. A viral Reddit user said of GPT-4o: “I lost my only friend overnight.”

AI is not like past technologies, and its humanlike character is already shaping our mental health. Millions now regularly confide in “AI companions”, and there are more and more extreme cases of “psychosis” and self-harm following heavy use. This year, 16-year-old Adam Raine died by suicide after months of chatbot interaction. His parents recently filed the first wrongful death lawsuit against OpenAI, and the company has said it is improving its safeguards.

Jacy Reese Anthis is a visiting scholar at Stanford University and co-founder of the Sentience Institute

Tilly Norwood: how scared should we be of the viral AI ‘actor’?

A bunch of code is being pushed as the next Scarlett Johansson, a creation that is already causing pushback from real human actors

It takes a lot to be the most controversial figure in Hollywood, especially when Mel Gibson still exists. And yet somehow, in a career yet to even begin, Tilly Norwood has been inundated with scorn.

This is for the simple fact that Tilly Norwood does not exist. Despite looking like an uncanny fusion of Gal Gadot, Ana de Armas and High School Musical-era Vanessa Hudgens, Norwood is the creation of an artificial intelligence (AI) talent studio called Xicoia. And if Xicoia is to be believed, then Norwood represents the dazzling future of the film industry.

New Yorkers Are Defacing This AI Startup’s Million-Dollar Ad Campaign

"AI wouldn’t care if you lived or died."

The post New Yorkers Are Defacing This AI Startup’s Million-Dollar Ad Campaign appeared first on Futurism.

Across the World, People Say They’re Finding Conscious Entities Within ChatGPT

Does it feel like something is staring back?

The post Across the World, People Say They’re Finding Conscious Entities Within ChatGPT appeared first on Futurism.

You Might Want to Ditch Your AI Investments Now That Jim Cramer Says No Bubble Is Coming

"The grim reaper of finance has weighed in, the collapse of the global financial system is imminent."

The post You Might Want to Ditch Your AI Investments Now That Jim Cramer Says No Bubble Is Coming appeared first on Futurism.

Compsci Grads Are Cooked

"They're happy to get one job offer."

The post Compsci Grads Are Cooked appeared first on Futurism.

Adobe Is in Serious Trouble Because of AI, Morgan Stanley Warns

"The world is coming around to the reality that 'AI is eating software.'"

The post Adobe Is in Serious Trouble Because of AI, Morgan Stanley Warns appeared first on Futurism.

Amazon’s New AI-Powered Alexa Is a Half-Working Mess

"LLMs aren’t designed to be predictable, and what you want when controlling your home is predictability."

The post Amazon’s New AI-Powered Alexa Is a Half-Working Mess appeared first on Futurism.

OpenAI Releases List of Work Tasks It Says ChatGPT Can Already Replace

"Today’s best frontier models are already approaching the quality of work produced by industry experts."

The post OpenAI Releases List of Work Tasks It Says ChatGPT Can Already Replace appeared first on Futurism.

The Real Stakes, and Real Story, of Peter Thiel’s Antichrist Obsession

Thirty years ago, a peace-loving Austrian theologian spoke to Peter Thiel about the apocalyptic theories of Nazi jurist Carl Schmitt. They’ve been a road map for the billionaire ever since.

Google’s Latest AI Ransomware Defense Only Goes So Far

Google has launched a new AI-based protection in Drive for desktop that can shut down an attack before it spreads—but its benefits have their limits.

Anthropic Will Use Claude Chats for Training Data. Here’s How to Opt Out

Anthropic is starting to train its models on new Claude chats. If you’re using the bot and don’t want your chats used as training data, here’s how to opt out.

US to Take Stake in Lithium Americas to Boost Nevada Project

The US government agreed to acquire a stake in Lithium Americas Corp., Secretary of Energy Chris Wright said, giving a boost to the Canadian company as it develops its Thacker Pass lithium project in Nevada.

Moody’s Says It’s Likely to Cut Electronic Arts, Following S&P

Moody’s Ratings expects to downgrade Electronic Arts Inc. by multiple notches after the videogame maker is taken private, a day after S&P Global Ratings took the same preliminary step.

AI-Generated Actress Draws a Rebuke From Actors Union

SAG-Aftra, the union of film and TV industry actors, criticized the AI-generated character Tilly Norwood, who is the subject of a viral video poking fun at the entertainment industry.

Santander-Led Bank Group Stuck With Some Debt for Verint Buyout

A group of banks led by Banco Santander SA will be forced to keep a portion of the $2.7 billion financing to support Thoma Bravo’s acquisition of customer-service automation business Verint Systems Inc., according to people with knowledge of the matter.

Trump Order Directs Use of AI to Boost Childhood Cancer Research

President Donald Trump directed the federal government to use artificial intelligence to improve childhood cancer research and funneling $50 million to the National Institutes of Health for that initiative, even as his administration pursues other cuts at the agency.

Nubank Applies for US Bank Charter In Global Expansion Push

Nu Holdings Ltd. said it applied for a US national bank charter as the Brazilian fintech seeks to expand beyond the Latin American market.

Tesla’s Soaring Stock Puts Focus on Sales Outlook in Robot Shift

Tesla Inc. shares climbed 33% in September as investors rallied around Chief Executive Officer Elon Musk’s renewed focus on the company. That’s drawing attention to whether the key third-quarter sales figures coming later this week will be strong enough to sustain the momentum.

Alphabet’s AI Strength Fuels Biggest Quarterly Jump Since 2005

Alphabet Inc. shares closed out their biggest quarterly gain in 20 years, the latest reflection of how investors are turning more positive on the Google parent as it strengthens its foothold in artificial intelligence.

Amazon Unveils Revamped Echo Devices, Ring Cameras

Amazon has unveiled an updated line of products to take on Apple in the artificial intelligence era. Amazon's SVP of Devices and Services, Panos Panay, tells Bloomberg's Ed Ludlow that he hopes to build devices that people want to showcase in their homes and use at every price point, with a focus on putting detail into every product. Amazon introduced new Echo devices, Ring cameras, and TV devices. He speaks to Ludlow from New York. (Source: Bloomberg)

Intel Affirms Plan for Ohio Project After US Senator’s Pressure

Intel Corp. said that it remains committed to its plans for a sprawling chip manufacturing plant in Ohio after Republican US Senator Bernie Moreno pressed the company for more information about delays in the multibillion-dollar project.

CoreWeave’s $14 Billion Meta Deal, Spotify’s Ek to Leave CEO Role | Bloomberg Tech 9/30/2025

Bloomberg’s Caroline Hyde and Ed Ludlow discuss CoreWeave’s deal to supply Meta with up to $14.2 billion worth of computing power. Plus, Spotify shares sink on news that founder Daniel Ek will transition from CEO to chairman. And, Anthropic Chief Product Officer Mike Krieger explains why the company is focusing on enterprise clients with its new model that can code for 30 hours straight. (Source: Bloomberg)

Legora, a Startup Using AI to Tackle Routine Legal Work, in Talks to Raise at $1.7 Billion Value

Legora, a Swedish artificial intelligence startup that works with law firms, is in discussions to raise more than $100 million in financing that would value the company at around $1.7 billion, according to people familiar with the talks.

OpenAI Releases Social App for Sharing AI Videos From Sora

OpenAI is releasing a standalone social app for making and sharing AI-generated videos with friends, an attempt to supercharge adoption for the emerging technology just as ChatGPT did for chatbots three years ago.The free Sora app, available Tuesday by invitation, is powered by a new version of OpenAI’s video-making software of the same name. As with the original Sora, released last December, users can generate short clips in response to text prompts, but the new app allows people to see videos

Amazon Partners with FanDuel to Add Betting Feature to NBA Games

Amazon.com Inc. is partnering with FanDuel so that customers of both companies can track their bets while streaming National Basketball Association games.

Meta Is Said to Acquire Chips Startup Rivos to Push AI Effort

Meta Platforms Inc. is acquiring chips startup Rivos Inc., according to a person familiar with the deal, part of an effort to bolster its internal semiconductor development and control more of its infrastructure for artificial intelligence work.

FCC Advances Plan to Ease Mergers of TV Networks, Station Groups

The Federal Communications Commission advanced plans to reform broadcast ownership rules, including a proposal that would allow the Big Four TV networks to merge.

Amazon Is Overhauling Its Devices to Take on Apple in the AI Era

Under Microsoft veteran Panos Panay, the company looks to add polish to its gadgets at every price level.

Google Offers More Ad Data to Publishers at DOJ Antitrust Trial

Google is willing to share more data with publishers to remedy a court’s finding that the Alphabet Inc. unit illegally monopolized some advertising technology, a senior executive said.

DoorDash Unveils Delivery Robot, Smart Scale in Hardware Debut

DoorDash Inc., the largest food-delivery app in the US, unveiled a delivery robot and a smart scale for restaurants, showcasing the company’s yearslong effort to develop hardware.

Amazon Revamps Echo Speakers, Displays in Hardware Reboot

New speakers include revamped look, upgraded silicon and integration with Alexa+ subscription service.

Vercel Notches $9.3 Billion Valuation in Latest AI Funding Round

Artificial intelligence startup Vercel raised $300 million in a new funding round led by Accel and Singapore’s sovereign wealth fund GIC Pte, attracting a valuation of $9.3 billion.

ECB Has Offloaded Its Entire Holdings of Worldline Bonds

The European Central Bank has offloaded its entire position in the bonds of embattled French payments company Worldline SA, according to its latest filings.

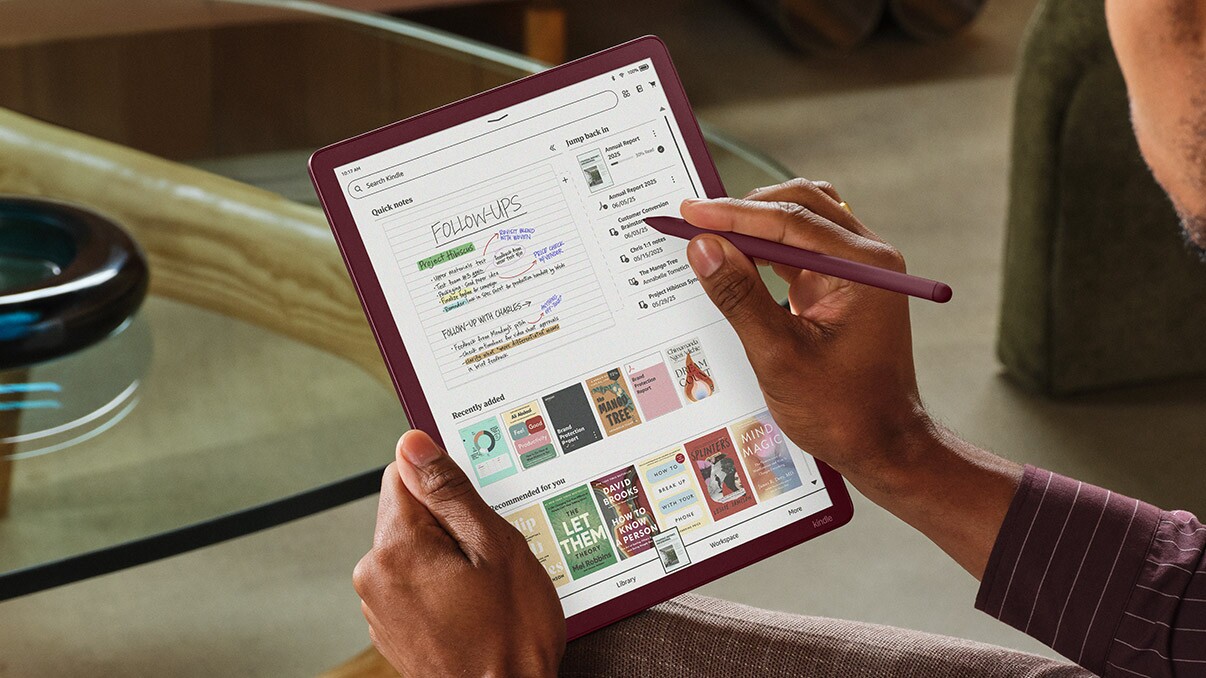

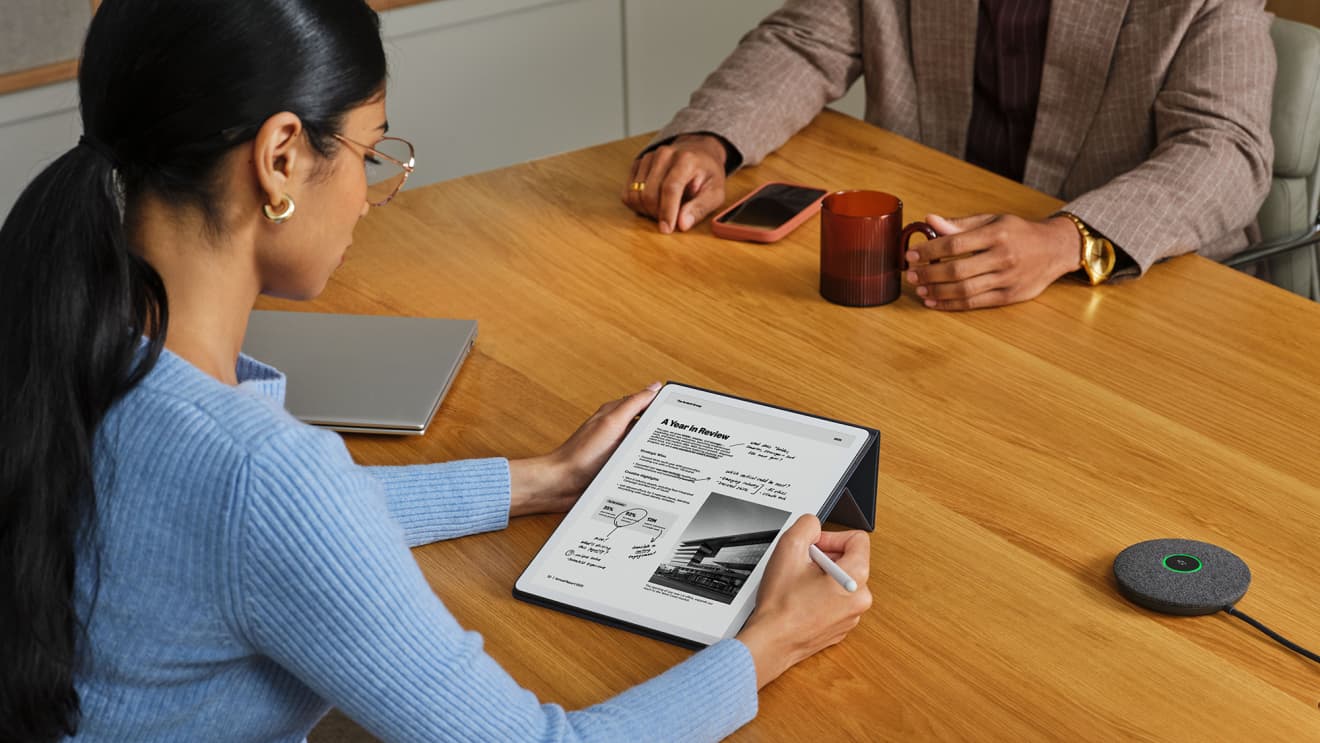

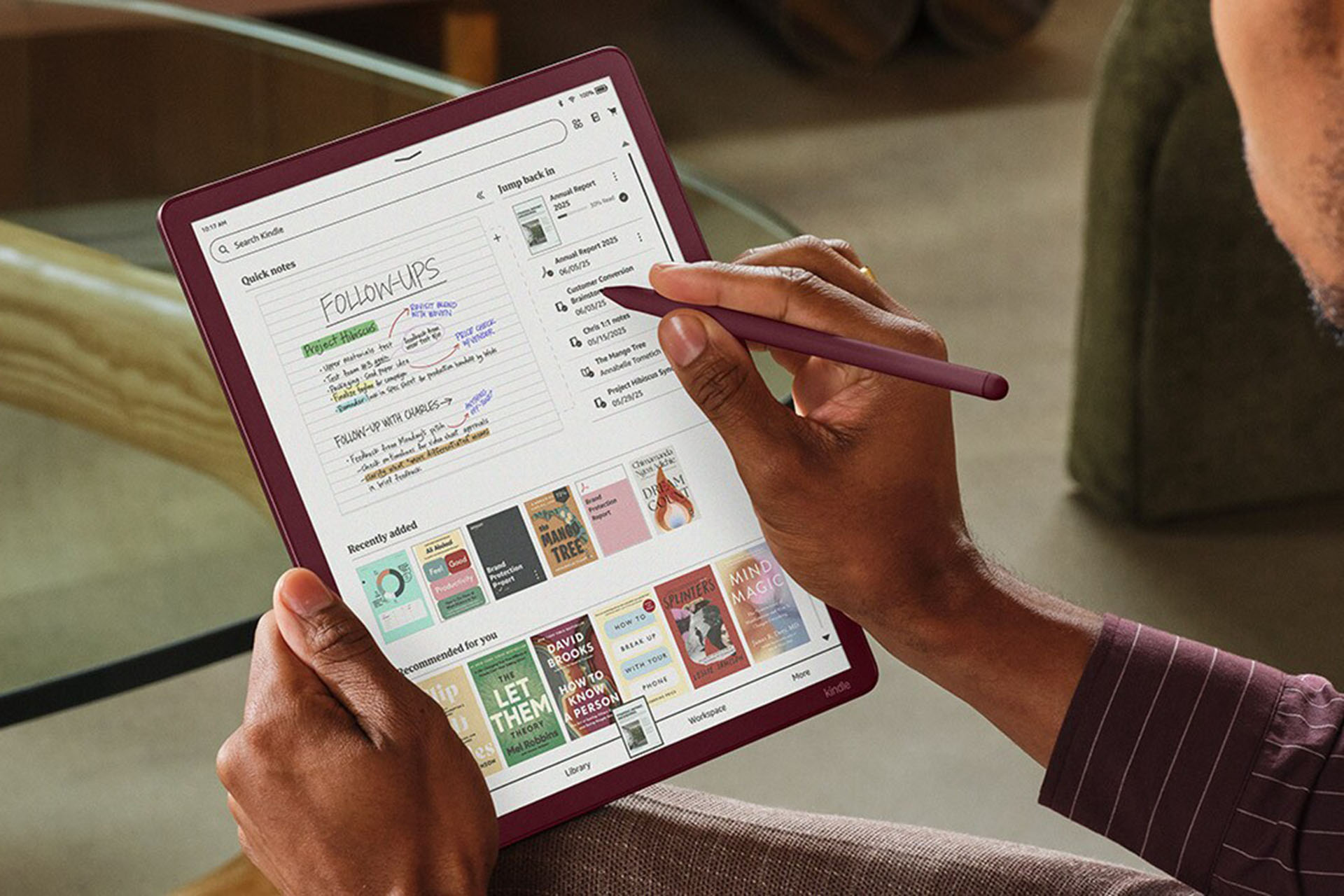

Amazon Launches Color Kindle with Stylus, $40 TV Stick With 4K Video

Company also rolls out new TV sets and upgraded home security products from Ring and Blink.

Kushner’s Secret Saudi Talks Paved Way for $55 Billion EA Deal

Months before Electronic Arts Inc. and Saudi Arabia’s sovereign wealth fund agreed to a record-breaking buyout deal, President Donald Trump’s son-in-law made a pivotal introduction.

China Hackers Breached Foreign Ministers’ Emails, Palo Alto Says

Chinese hackers breached email servers of foreign ministers as part of a years-long effort targeting the communications of diplomats around the world, according to researchers at the cybersecurity firm Palo Alto Networks Inc.

Spotify Names Co-CEOs as Daniel Ek Transitions to Chairman

Spotify Technology SA Chief Executive Officer Daniel Ek is stepping aside after almost two decades at the music streaming company he co-founded, leaving the leadership in the hands of two trusted executives.

Beats Upgrades Fit Earbuds With Improved Durability, Smaller Case

Beats, the Apple Inc.-owned audio brand, unveiled new Powerbeats Fit earbuds on Tuesday, giving consumers another in-house alternative to the popular AirPods line.

Fintech Brex Launches Stablecoin Payment Platform Amid Demand

Fintech company Brex Inc. will start allowing stablecoin transactions on its platform, becoming the latest firm to adopt the digital asset as a payment method.

My son and I moved from the US to Spain. We tried out 3 cities before settling into a place that felt right.

My son and I moved from the US to Barcelona when he was 3. It took us time to find our place and I made some mistakes along the way.

After a Reddit user took a dig at Harvey, Harvey's CEO fired back — and brought receipts

The question of whether lawyers are really using Harvey has ramifications for its employees and investors — and the dozens of competitors in its wake.

Military women fear losing 'every bit of ground' as Hegseth looks backward to the 1990s

Defense Secretary Pete Hegseth wants to review standard changes since the 1990s. Military women watching shifts in DoD worry about what's coming.

How a US government shutdown could impact your next flight

A government shutdown could disrupt flights, extend security wait times, and slow safety functions, creating a stressful travel experience for flyers.

When my 20-year marriage ended, I had no job and knew little about money. Now, I'm confident in my financial future and career.

After my marriage of 20 years ended in divorce, I knew nothing about my finances. So, I got a job and learned how to better plan my financial future.

Yes, Congress still gets paid during a government shutdown

Federal workers may end up missing paychecks, depending on how long the shutdown lasts. Members of Congress won't have to worry about that.

Salesforce challenger Zeta Global is making its biggest-ever acquisition as it looks to corner the loyalty market

Zeta, which helps marketers attract and retain customers, is doubling down on a strategy to get its clients to use more than one of its services.

Spotify and Comcast are the latest to announce co-CEOs. It's a model that can backfire — or pay off big.

A number of high-profile companies have announced co-CEO structures lately. But having two cooks in the kitchen can be risky.

Read the email federal workers are getting hours before a potential government shutdown

Federal workers received an email Tuesday, saying that a looming government shutdown will come with furloughs.

Sam Altman is squaring off against Hollywood with the new Sora

Sam Altman is reportedly telling Hollywood studios and other copyright owners that OpenAI's new Sora is going to use their work without permission.

THEN AND NOW: The cast of '10 Things I Hate About You'

The modern take on Shakespeare's "The Taming of the Shrew," starring Julia Stiles and Heath Ledger, is now streaming on Netflix.

Trump went off about 'ugly' stealth warships in his wandering chat with US generals and admirals

The US president has often focused on the appearance and aesthetic of US Navy ships, calling them ugly compared to other countries' vessels.

MSNBC is doubling down on live events as it heads into the Versant spinoff

MSNBC plans to triple its number of events next year as it seeks to diversify its business following a planned split from NBCU.

Spotify made the rare move of appointing 2 CEOs. Netflix's bosses have described the key to making it work.

What happens when dual CEOs disagree? Netflix's co-CEOs Ted Sarandos and Greg Peters have described their decision-making process.

The best and worst looks at the New York Film Festival so far

Celebrities have been stepping out each night for the New York Film Festival. Some have stunned in high fashion, while others have missed the mark.

Why Norland nannies are so expensive

Norland graduates can earn nearly $200,000 as professional nannies after learning self-defense, skidpan driving, and more at the British institution.

As a Texas local, I skip the crowds in Austin and head west to a charming nearby city with incredible wineries

I live in Texas, and love the small city of Fredericksburg. There's a lot to do there, from visiting wineries to eating at great restaurants downtown.

I left the Bay Area and moved to Barcelona after my tech layoff. It felt like losing my identity, but I'm so much happier now.

After Steph Kumar was laid off from Twitter, she realized how her career shaped her identity. She redefined success by founding a startup and moving to Barcelona.

I quit investment banking at Citi and professional tennis after burning out. I learned about when to walk away from a job.

Vitoria Okuyama, 26, played in the US Open and later worked at Citi. She burned out from both careers, which taught her about knowing when to stop.

Cassie tells Diddy judge she's terrified the hip-hop mogul could walk free

Cassie Ventura, in a letter to Sean "Diddy" Combs' judge, said that she's worried the mogul or his associates will come after her and her family.

The AI Value Chain Has Shifted. Here’s How Founders Can Still Build A Sustainable Business

The old SaaS playbook of build a great app, charge monthly and let infrastructure fade into the background, doesn’t hold up when your core cost scales with usage, writes guest author Itay Sagie, who shares three moves AI founders can make to stay in the game.

Transforming Supply Chain Management with AI Agents

Efficiently managing supply chains has long been a top priority for many industries...

Setting the Stage: AI Governance for Insurance in 2025

As insurers accelerate adoption of artificial intelligence, regulatory scrutiny and...

Announcing Data Intelligence for Cybersecurity

Today, we’re thrilled to announce the launch of Data Intelligence for Cybersecurity—...

Revolutionizing Car Measurement Data Storage and Analysis: Mercedes-Benz's Petabyte-Scale Solution on the Databricks Intelligence Platform

AbstractWith the rise of connected vehicles, the automotive industry is experiencing...

From Generative to Agentic AI: What It Means for Data Protection and Cybersecurity

As artificial intelligence continues its rapid evolution, two terms dominate the conversation: generative AI and the emerging concept of agentic AI. While both represent significant advancements, they carry very different […]

The post From Generative to Agentic AI: What It Means for Data Protection and Cybersecurity appeared first on Datafloq.

Data Privacy and Cybersecurity in Smart Building Platforms

The way we design, operate, and experience buildings has changed dramatically in the past decade. Thanks to the rise of smart building platforms, physical spaces are becoming more efficient, sustainable, […]

The post Data Privacy and Cybersecurity in Smart Building Platforms appeared first on Datafloq.

Why MinIO Added Support for Iceberg Tables

MinIO launched the AIStore nearly a year ago to provide enterprises with an ultra-scalable object store for AI use cases. Today, it expanded AIStor into the world of big data Read more…

The post Why MinIO Added Support for Iceberg Tables appeared first on BigDATAwire.

Bloomberg Finds AI Data Centers Fueling America’s Energy Bill Crisis

The rise of AI is putting real pressure on the U.S. power grid. As demand for compute explodes, the data centers behind AI systems are becoming some of the country’s Read more…

The post Bloomberg Finds AI Data Centers Fueling America’s Energy Bill Crisis appeared first on BigDATAwire.

Sparse Models and the Efficiency Revolution in AI

The early years of deep learning were defined by scale: bigger datasets, larger models, and more compute. But as parameter counts stretched into the hundreds of billions, researchers hit a wall of cost and energy. A new paradigm is emerging to push AI forward without exponential bloat: sparse models.

The principle of sparsity is simple. Instead of activating every parameter in a neural network for every input, only a small subset is used at a time. This mirrors the brain, where neurons fire selectively depending on context. By routing computation dynamically, sparse models achieve efficiency without sacrificing representational power.

One leading approach is the mixture-of-experts (MoE) architecture. Here, the model contains many specialized subnetworks, or “experts,” but only a handful are called upon for a given task. Google’s Switch Transformer demonstrated that trillion-parameter MoE models could outperform dense models while using fewer active parameters per forward pass. This creates a path to scale capacity without proportional increases in computation.

Sparsity is not limited to MoEs. Pruning techniques remove redundant weights after training, producing leaner networks with little loss in accuracy. Structured sparsity goes further, eliminating entire neurons or channels, which aligns better with hardware acceleration. Research into sparse attention mechanisms also enables transformers to handle long sequences more efficiently by focusing only on relevant tokens.

The implications are profound. Sparse models reduce training and inference costs, lower energy consumption, and make it feasible to deploy large-capacity systems at the edge. They also open the door to modularity: experts can be added, swapped, or fine-tuned independently, creating more flexible AI ecosystems.

Challenges remain in hardware support and training stability. GPUs and TPUs are optimized for dense matrix multiplications, making it harder to realize the full benefits of sparsity. New accelerators and software libraries are being developed to close this gap. Ensuring balanced training of experts is another open problem, as some experts risk being underutilized.

The shift toward sparsity signals a maturation of AI. Instead of brute-force scaling, researchers are learning to use resources more intelligently. In the future, the most powerful models may not be those with the most parameters, but those that know when to stay silent.

References

https://arxiv.org/abs/2101.03961

https://arxiv.org/abs/1910.04732

https://www.nature.com/articles/s41586-021-03551-0

Dev Log 27 - Gear Registry Refactor

🧱 Dev Log: Gear Registry, Survival Consumption & Meat Schema Overhaul

Date Range: 28–30 September

Focus: Gear registry overwrite logic, prefab refresh, stat effects, disease rolls, advanced meat taxonomy, and schema law adherence

🔧 Technical Milestones

✅ GearRegistryPopulator Refactor (28 Sept)

Rewrote registry logic to overwrite duplicates based on ItemID.

Ensured all gear assets in Assets/GearAssets are re scanned and reinjected.

Replaced outdated entries with updated versions. (Overwrites)

Logged additions and overwrites for traceability.

Menu item confirmed: Tools → Populate Gear Registry.

✅ GameSceneManager Archetype Injection Fix

Removed invalid GetComponent() call.

Injected PlayerArchetype via PlayerProfile.Instance.selectedArchetype.

Restored prefab-safe gear injection flow.

Confirmed runtime obedience across InjectStartingGear() coroutine.

HUD sync and stat mutation confirmed post-injection.

✅ Survival Consumption System Integration (29 Sept)

Expanded InventoryItem schema: (made it finally inject correctly rather than, placeholder data)

healthRestore, staminaRestore, hungerRestore, hydrationRestore

diseaseChance, diseaseID

IsLiquid, IsReusableContainer, IsEmpty

Updated PlayerStats.cs:

Added ConsumeInventoryItem() method.

Handles stat restoration, disease rolls, item disposal, and HUD sync.

Updated PlayerInventoryManager.cs:

Injects full InventoryItem data from IInjectableItem.

Added RemoveItem() method for runtime disposal.

Refactored slot injection to support stat and disease fields.

Expanded IInjectableItem.cs interface:

Added getters for all survival stats, disease logic, and container flags.

Confirmed HUD auto-sync via OnStatsChanged.

✅ Inventory Slot Refresh Ritual (30 Sept)

Patched PlayerInventoryManager.RemoveItem() to invoke InitializeInventory() immediately after item removal.

Guarantees full grid rebuild and instant visual feedback.

Removed redundant refresh logic from PlayerStats.ConsumeInventoryItem() — now centralized in InventoryManager.

Confirmed slot clearing, prefab obedience, and ghost sprite elimination.

✅ IInjectableItem Refactor

All item scripts implementing IInjectableItem were fully updated:

Survival stat fields, disease logic, container flags.

Prefab-safe injection and runtime compatibility confirmed.

Runtime obedience validated across food, drink, and cursed relics.

✅ Debug Tag Refactor

Replaced all [Dragon] debug tags with [Unicorn] across all relevant scripts.

Ensures consistent traceability and schema-level clarity in console output.

✅ Slot-Level Trace Injection

Added [Unicorn] ⚠️ InitializeInventory() called — trace source. to monitor refresh triggers.

Confirmed prefab obedience and runtime slot clearing.

🍖 Meat Schema Sprite & Expansion

✅ Species Variants Added and Sprites assigned for each species and includes raw and cooked variants, with unique sprites and stat profiles:

🐻 Bear

🦌 Deer

🦆 Duck

🦚 Pheasant

🐖 Pig

🐄 Cow

🐀 Rat

🧍 Human

🐔 Chicken

🐇 Rabbit

✅ Cooking States Implemented

Each species supports the following cooking states:

🔴 Raw

🔥 Roasted

💧 Boiled

🍳 Fried

🔥 Grilled

Need to finish sheep, turkey, wolf zombie, and may add more variant later.

🔧 Integration Notes

All variants implement updated IInjectableItem interface.

Sprites applied and verified for prefab compatibility.

Stat effects and disease risk vary by species and cooking method.

Human meat variants accepted — schema now supports cannibal tier. Along with appropriate diseases.

All items injected via InjectStartingItemsIntoLegs() and runtime testing.

Icons, max uses, and item IDs confirmed during slot injection.

⚠️ Outstanding Tasks

Audit all item assets and prefabs for missing data.

Patch all scripts referencing InventoryItem to match updated schema. Fruit was the test schema, working, now to adjust for all others.

Validate RemainingUses logic across liquid items. More complicated as working with volumes rather than single use instances, and includes refill true logic on some containers.

Add fallback placeholder icons or labels for empty containers.

Tooltip panels may need conditional logic for empty containers or disease warnings.

🧪 Known Issues

~180 compile errors due to missing fields or outdated references.(FIXED)

Some prefab slots may fail to inject without updated IInjectableItem logic.

Tooltip panels may misrepresent disease risk or container state.

🧙♂️ Mythic Checkpoints

🧿 “Registry Reforged” — Gear database now overwrites echoes, not ignores them

🧬 “Archetype Obeys” — Injection path restored via persistent memory

🧱 “Prefab Integrity Confirmed” — No nulls, no ghosts, no broken slots

🧠 “Tools Tab Ritual” — Gear registry now summoned via top-level menu

🧪 “Meat Tier Unlocked” — Species × Cooking × Stat × Disease schema now active

🧹 “Slot Finality” — All consumed items now vanish with full grid refresh

🧠 “AI Obeys Schema” — Modular, non-repetitive, prefab-safe collaboration confirmed

React: Building an Independent Modal with createRoot

Back in 2021, when I started React for the first time in my career. I managed modal components by using conditional rendering.

const Component = () => {

// ...

return (

<div>

{visible && <Modal onOk={handleOk} />}

</div>

);

}

This is based on the idea that a component is rendered when it has to.

In another way, modal components decide whether it should be rendered or not by the logic written inside the modal component.

const Component = () => {

// ...

return (

<div>

<Modal visible={visible} onOk={handleOk} />

</div>

);

}

Here, we don't need to do conditional rendering, but passing a prop to render it.

As I dived into React more, I used a context provider to manage modal components so that I can simply use hooks to render modals.

const Component = () => {

const {showModal} = useModal();

const handleModalOpen = () => {

showModal({

message: 'hello',

onConfirm: () => { alert('clicked'); }

});

}

return (

<div>

{/*...*/}

</div>

);

}

const ModalProvider = () => {

// ...

return (

<ModalContext.Provider value={{

showModal,

}}>

<Modal {...modalState} />

</ModalContext.Provider>

);

}

I even wrote a post about this management, here.

In the side project I recently started, I had to create modal components.

I didn't want to write code from different places, I just wanted to call a function and render a modal component, and an idea came to my mind – render a component on a new root.

In this way, we don't write extra code to render a modal somewhere like in the root or wherever. Modal rendering logic is done inside the component.

The implementation could be different depending on your project.

In my project, I wrote the modal component like this:

import { useCallback, useState } from 'react';

import Text from '../../Text';

import Button from '../../Button';

import { createRoot } from 'react-dom/client';

import Input from '../../Input';

type ConfirmModalProps = {

title: string;

titleColor: 'red';

message: string;

confirmText: string;

confirmationPhrase?: string;

cancelText?: string;

onConfirm: VoidFunction;

onCancel: VoidFunction;

};

type ConfirmParameters = Omit<ConfirmModalProps, 'onConfirm' | 'onCancel'> & {

onConfirm?: VoidFunction;

onCancel?: VoidFunction;

};

const ConfirmModal = ({

title,

titleColor,

message,

confirmText,

confirmationPhrase,

cancelText = 'Cancel',

onConfirm,

onCancel,

}: ConfirmModalProps) => {

const [input, setInput] = useState('');

const confirmButtonDisabled =

confirmationPhrase !== undefined && input !== confirmationPhrase;

return (

<div className="absolute z-50 inset-0 bg-black/50">

<div className="fixed top-[50%] left-[50%] translate-x-[-50%] translate-y-[-50%] rounded-lg p-6 shadow-lg w-full max-w-[calc(100%-2rem)] sm:max-w-lg bg-gray-900 border border-gray-700 flex flex-col gap-2">

<Text size="lg" color={titleColor} className="font-bold">

{title}

</Text>

<Text>{message}</Text>

{confirmationPhrase ? (

<Input

className="w-full"

placeholder={`${confirmationPhrase}`}

onChange={(e) => setInput(e.target.value)}

/>

) : null}

<div className="flex justify-end gap-2 mt-2">

<Button color="lightGray" onClick={onCancel}>

{cancelText}

</Button>

<Button

color="red"

varient="fill"

onClick={onConfirm}

disabled={confirmButtonDisabled}

>

{confirmText}

</Button>

</div>

</div>

</div>

);

};

export const useConfirmModal = () => {

const confirm = useCallback(

({ onConfirm, onCancel, ...params }: ConfirmParameters) => {

const tempElmt = document.createElement('div');

document.body.append(tempElmt);

const root = createRoot(tempElmt);

const handleConfirm = () => {

tempElmt.remove();

onConfirm?.();

};

const handleCancel = () => {

tempElmt.remove();

onCancel?.();

};

root.render(

<ConfirmModal

{...params}

onConfirm={handleConfirm}

onCancel={handleCancel}

/>

);

},

[]

);

return {

confirm,

};

};

The main logic is here:

export const useConfirmModal = () => {

const confirm = useCallback(

({ onConfirm, onCancel, ...params }: ConfirmParameters) => {

const tempElmt = document.createElement('div');

document.body.append(tempElmt);

const root = createRoot(tempElmt);

// ...

root.render(

<ConfirmModal

{...params}

onConfirm={handleConfirm}

onCancel={handleCancel}

/>

);

},

[]

);

return {

confirm,

};

};

It adds the modal component in the body. There is no other code needed. I don't need to write code somewhere else

What I need to do is just calling the function:

const { confirm } = useConfirmModal();

const handleDeleteClick = () => {

confirm({

title: 'Are you absolutely sure?',

message:

'This action cannot be undone. This will permanently delete your account and remove your data from our servers.',

titleColor: 'red',

confirmationPhrase: 'delete my account',

confirmText: 'Yes, delete my account',

});

};

This modal component itself is independent.

It might not be a good fit on your project though, I wanted to introduce a way to manage modal components in react in this post.

I hope you found it helpful.

Happy Coding!

Untitled

Check out this Pen I made!

50 Most Useful JavaScript Snippets

let randomNum = Math.floor(Math.random() * maxNum);

function isEmptyObject(obj) {

return Object.keys(obj).length === 0;

}

function countdownTimer(minutes) {

let seconds = minutes * 60;

let interval = setInterval(() => {

if (seconds <= 0) {

clearInterval(interval);

console.log("Time's up!");

} else {

console.log(`${Math.floor(seconds / 60)}:${seconds % 60}`);

seconds--;

}

}, 1000);

}

function sortByProperty(arr, property) {

return arr.sort((a, b) => (a[property] > b[property]) ? 1 : -1);

}

let uniqueArr = [...new Set(arr)];

function truncateString(str, num) {

return str.length > num ? str.slice(0, num) + "..." : str;

}

function toTitleCase(str) {

return str.replace(/\b\w/g, txt => txt.toUpperCase());

}

let isValueInArray = arr.includes(value);

let reversedStr = str.split("").reverse().join("");

let newArr = oldArr.map(item => item + 1);

function debounce(func, delay) {

let timeout;

return function(...args) {

clearTimeout(timeout);

timeout = setTimeout(() => func.apply(this, args), delay);

};

}

function throttle(func, limit) {

let lastFunc;

let lastRan;

return function(...args) {

if (!lastRan) {

func.apply(this, args);

lastRan = Date.now();

} else {

clearTimeout(lastFunc);

lastFunc = setTimeout(function() {

if ((Date.now() - lastRan) >= limit) {

func.apply(this, args);

lastRan = Date.now();

}

}, limit - (Date.now() - lastRan));

}

};

}

const cloneObject = (obj) => ({ ...obj });

const mergeObjects = (obj1, obj2) => ({ ...obj1, ...obj2 });

function isPalindrome(str) {

const cleanedStr = str.replace(/[^A-Za-z0-9]/g, '').toLowerCase();

return cleanedStr === cleanedStr.split('').reverse().join('');

}

const countOccurrences = (arr) =>

arr.reduce((acc, val) => (acc[val] ? acc[val]++ : acc[val] = 1, acc), {});

const dayOfYear = date =>

Math.floor((date - new Date(date.getFullYear(), 0, 0)) / 1000 / 60 / 60 / 24);

const uniqueValues = arr => [...new Set(arr)];

const degreesToRads = deg => (deg * Math.PI) / 180;

const defer = (fn, ...args) => setTimeout(fn, 1, ...args);

const flattenArray = arr => arr.flat(Infinity);

const randomItem = arr => arr[Math.floor(Math.random() * arr.length)];

const capitalize = str => str.charAt(0).toUpperCase() + str.slice(1);

const maxVal = arr => Math.max(...arr);

const minVal = arr => Math.min(...arr);

function shuffleArray(arr) {

return arr.sort(() => Math.random() - 0.5);

}

const removeFalsy = arr => arr.filter(Boolean);

const range = (start, end) => Array.from({ length: end - start + 1 }, (_, i) => i + start);

const arraysEqual = (a, b) =>

a.length === b.length && a.every((val, i) => val === b[i]);

const isEven = num => num % 2 === 0;

const isOdd = num => num % 2 !== 0;

const removeSpaces = str => str.replace(/\s+/g, '');

function isJSON(str) {

try {

JSON.parse(str);

return true;

} catch {

return false;

}

}

const deepClone = obj => JSON.parse(JSON.stringify(obj));

function isPrime(num) {

if (num <= 1) return false;

for (let i = 2; i <= Math.sqrt(num); i++) {

if (num % i === 0) return false;

}

return true;

}

const factorial = num =>

num <= 1 ? 1 : num * factorial(num - 1);

const timestamp = Date.now();

const formatDate = date => date.toISOString().split('T')[0];

const uuid = () =>

'xxxxxxxx-xxxx-4xxx-yxxx-xxxxxxxxxxxx'.replace(/[xy]/g, c => {

const r = Math.random() * 16 | 0;

const v = c === 'x' ? r : (r & 0x3 | 0x8);

return v.toString(16);

});

const hasProp = (obj, key) => key in obj;

const getQueryParams = url =>

Object.fromEntries(new URLSearchParams(new URL(url).search));

const escapeHTML = str =>

str.replace(/[&<>'"]/g, tag => ({

'&':'&','<':'<','>':'>',

"'":''', '"':'"'

}[tag]));

const unescapeHTML = str =>

str.replace(/&(amp|lt|gt|#39|quot);/g, match => ({

'&':'&','<':'<','>':'>',

''':"'", '"':'"'

}[match]));

const sleep = ms => new Promise(res => setTimeout(res, ms));

const sumArray = arr => arr.reduce((a, b) => a + b, 0);

const avgArray = arr => arr.reduce((a, b) => a + b, 0) / arr.length;

const intersection = (arr1, arr2) => arr1.filter(x => arr2.includes(x));

const difference = (arr1, arr2) => arr1.filter(x => !arr2.includes(x));

const allEqual = arr => arr.every(val => val === arr[0]);

const randomHexColor = () =>

'#' + Math.floor(Math.random()*16777215).toString(16);

The Frozen Collection Vault: frozenset and Set Immutability

Timothy's membership registry had transformed how the library tracked visitors and members, but Professor Williams arrived with a problem that would reveal a fundamental limitation of his set system.

"I need to catalog research groups," Professor Williams explained, "where each group is identified by its members. Some groups overlap—Alice and Bob form one research pair, Bob and Charlie form another. I want to use the member sets as keys in my catalog."

Timothy confidently tried to implement her request:

research_catalog = {}

group_one = {"Alice", "Bob"}

group_two = {"Bob", "Charlie"}

research_catalog[group_one] = "Quantum Physics Project"

# TypeError: unhashable type: 'set'

The system rejected his attempt. Margaret appeared and smiled knowingly. "You've discovered that regular sets can't serve as dictionary keys. They're mutable—you can add or remove members after creation. Remember the immutability rule?"

Timothy recalled his earlier lesson: only unchangeable objects could be dictionary keys. If a set could be modified after being used as a key, the entire hash table system would break.

Margaret led Timothy to a specialized vault labeled "Frozen Collections." Inside were sets that had been permanently sealed—no additions, no removals, no modifications of any kind.

research_catalog = {}

# Create frozen sets - permanently immutable

group_one = frozenset({"Alice", "Bob"})

group_two = frozenset({"Bob", "Charlie"})

# These work as dictionary keys!

research_catalog[group_one] = "Quantum Physics Project"

research_catalog[group_two] = "Mathematics Collaboration"

print(research_catalog[group_one]) # "Quantum Physics Project"

The frozen sets looked and behaved like regular sets for all read operations, but they were locked forever. This immutability made them hashable and suitable as dictionary keys.

Timothy learned that converting between regular and frozen sets was straightforward:

# Regular set - mutable

active_members = {"Alice", "Bob", "Charlie"}

active_members.add("David") # This works

# Convert to frozen - now immutable

permanent_members = frozenset(active_members)

permanent_members.add("Eve") # AttributeError: frozenset has no 'add'

# Convert back to regular if needed

modifiable_again = set(permanent_members)

modifiable_again.add("Eve") # This works

The frozen sets supported all the same operations as regular sets—union, intersection, difference—but rejected any attempt to modify their contents.

Professor Williams revealed her true challenge: "I need sets of sets. Each research department contains multiple research groups."

Timothy tried the obvious approach with regular sets:

quantum_dept = {

{"Alice", "Bob"},

{"Charlie", "David"}

}

# TypeError: unhashable type: 'set'

Sets can only contain hashable items, and regular sets aren't hashable. Margaret showed the solution:

quantum_dept = {

frozenset({"Alice", "Bob"}),

frozenset({"Charlie", "David"}),

frozenset({"Eve", "Frank"})

}

# Check if a specific group exists in the department

target_group = frozenset({"Alice", "Bob"})

group_exists = target_group in quantum_dept # True - instant lookup

Frozen sets enabled hierarchical membership structures that would have been impossible with regular sets.

Timothy discovered several scenarios where frozen sets were essential:

Caching function results based on set inputs:

cache = {}

def analyze_group(members):

frozen_members = frozenset(members)

if frozen_members not in cache:

# Expensive computation here

result = len(frozen_members) * 10

cache[frozen_members] = result

return cache[frozen_members]

# Works with different argument orders

analyze_group(["Alice", "Bob"]) # Computes result

analyze_group(["Bob", "Alice"]) # Uses cached result - same frozenset

Tracking unique combinations:

seen_pairs = set()

def record_interaction(person_a, person_b):

pair = frozenset({person_a, person_b})

if pair in seen_pairs:

return "Already recorded"

seen_pairs.add(pair)

return "New interaction recorded"

record_interaction("Alice", "Bob") # "New interaction recorded"

record_interaction("Bob", "Alice") # "Already recorded" - same pair

Graph edges as dictionary keys:

edge_weights = {}

# Store weights for undirected graph edges

edge_weights[frozenset({"Node A", "Node B"})] = 5.2

edge_weights[frozenset({"Node B", "Node C"})] = 3.1

# Retrieve weight regardless of node order

weight = edge_weights[frozenset({"B", "A"})] # 5.2

Margaret showed Timothy that frozen sets supported all read operations but rejected modifications:

regular_set = {"Alice", "Bob", "Charlie"}

frozen_set = frozenset({"Alice", "Bob", "Charlie"})

# Operations that work on both

common = regular_set & frozen_set # Intersection works

combined = regular_set | frozen_set # Union works

is_member = "Alice" in frozen_set # Membership check works

# Operations only regular sets support

regular_set.add("David") # Works

frozen_set.add("David") # AttributeError

regular_set.remove("Alice") # Works

frozen_set.remove("Alice") # AttributeError

The frozen sets provided all the power of set operations while guaranteeing immutability.

Timothy asked whether frozen sets were slower due to their immutability constraints. Margaret explained: "Frozen sets are actually slightly more efficient for operations. Because they can't change, Python can cache their hash values permanently. Regular sets must recalculate hashes after modifications."

# Frozen sets cache hash value on creation

frozen = frozenset(range(1000))

hash(frozen) # Computed once

hash(frozen) # Retrieved from cache - instant

# Regular sets can't be hashed at all

regular = set(range(1000))

hash(regular) # TypeError: unhashable type: 'set'

This hash caching made frozen sets ideal for repeated dictionary lookups or set membership checks.

Through mastering frozen sets, Timothy learned key principles:

Use frozenset when immutability is required: Dictionary keys, set elements, or anywhere hashability is needed.

Convert freely between types: Use regular sets for building collections, freeze them when you need immutability.

Enable nested collections: Frozen sets allow sets of sets and other hierarchical structures.

Leverage hash caching: Frozen sets are optimized for repeated lookups.

Choose based on mutability needs: Regular sets for dynamic membership, frozen sets for fixed groups.

Timothy's exploration of frozen sets revealed that immutability wasn't a limitation—it was a feature that unlocked new capabilities. By making sets unchangeable, Python made them usable as dictionary keys and set elements, enabling elegant solutions to problems that would otherwise require complex workarounds.

The secret life of Python sets included this immutable variant, proving that sometimes the most powerful tool is the one that promises never to change.

Aaron Rose is a software engineer and technology writer at tech-reader.blog and the author of Think Like a Genius.

IGN: Maid of Sker VR - Official Announcement Trailer | Horror Game Awards Showcase 2025

Maid of Sker VR drops you into a deserted 1898 hotel for a first-person survival horror thrill ride; armed only with a defensive sound device, you must sneak, strategize, and stay silent to unravel a twisted supernatural mystery.

Launching November 2025 on PlayStation VR2 and Meta Quest, this VR version from Wales Interactive cranks up the immersion with its atmospheric setting and nerve-jangling tension.

Watch on YouTube

Cracking the Code: Decoding LLM Thought with Vector Symbolic Bridges

Cracking the Code: Decoding LLM Thought with Vector Symbolic Bridges

Large Language Models are amazing, but let's face it: they're black boxes. We feed them prompts, they spit out responses, but we often have no idea how they arrived at those conclusions. Wouldn't it be incredible if we could peek inside and understand the actual concepts an LLM is juggling?

Here's the core idea: Instead of directly interpreting the raw numerical vectors inside an LLM, what if we could map these vectors onto symbolic representations? Vector Symbolic Architectures (VSAs) offer a way to do just that. Think of VSAs as a Rosetta Stone that translates the LLM's vector space into something human-readable, a structured representation of its "thoughts." We can then use standard symbolic reasoning techniques to understand how it's processing information.

Imagine a painter's palette. Each color (vector) in the LLM's representation space gets assigned a name and relationship to other colors (symbols) via a VSA. Now, instead of seeing raw numbers, you see the composition of colors used for a particular task - giving you insight into what the LLM prioritized.

Benefits of Using VSAs for LLM Interpretability:

- Human-Readable Concepts: Move beyond opaque vectors to symbolic representations that developers can readily understand.

- Targeted Probing: Focus your analysis on specific concepts or reasoning patterns within the LLM.

- Failure Detection: Identify when the LLM's internal representation deviates from expected patterns, indicating potential errors or biases.

- Compositional Understanding: See how the LLM combines different concepts to arrive at a final answer.

- Model Comparison: Develop a basis to objectively compare internal workings of different LLM architectures.

- Enhanced Debugging: Use the symbolic representations to diagnose and fix issues in the LLM's reasoning process.

One major implementation challenge is efficiently mapping the high-dimensional vector space of LLMs to a manageable symbolic space. Careful feature selection and dimensionality reduction are crucial. A novel application could be using VSA decoding to create adaptive prompts – prompts that adjust in real-time based on the LLM’s internal state.

Ultimately, bridging the gap between the numeric world of neural networks and the symbolic world of human understanding is paramount for building trustworthy and transparent AI. Vector Symbolic Architectures offer a powerful tool for achieving this goal. By understanding how LLMs represent and manipulate knowledge, we can build safer, more reliable, and more explainable AI systems.

Related Keywords: LLM interpretability, LLM explainability, vector embeddings, symbolic AI, neural networks, AI safety, black box AI, representation learning, cognitive architectures, hyperdimensional computing, holographic reduced representation, binding operations, compositionality, distributed representations, reverse engineering AI, prompt engineering, model understanding, latent space, feature extraction, knowledge representation, VSA encoding, semantic pointers, cognitive computing, neuromorphic computing, AI alignment

Securing Container Registries: Best Practices for Safe Image Management

Container registries are a vital part of any DevOps pipeline, acting as the central repository where container images are stored, shared, and pulled into production environments. Yet, they’re often overlooked when it comes to comprehensive security planning.

As the use of containers becomes more widespread, attackers have increasingly turned their attention to poorly secured registries to inject malicious code, steal credentials, or distribute compromised images. Without proper security measures in place, container registries can become the weakest link in your application delivery chain.

Every container that runs in your environment typically originates from a registry. If that image source is tampered with or compromised, malicious payloads can silently propagate across development, testing, and production systems. Whether you're using public repositories like Docker Hub or private registries hosted in the cloud, securing this image supply chain is critical to preventing downstream attacks.

Several key vulnerabilities are frequently exploited by attackers:

- Unauthorized Access: Weak access controls allow bad actors to read, push, or delete container images, potentially injecting harmful versions or wiping critical builds.

- Image Spoofing: Attackers upload images with names identical to trusted repositories, tricking developers into pulling and using tainted images.

- Outdated and Vulnerable Images: Registries often host old images with known vulnerabilities that are still being used in deployments due to lack of scanning or version control.

1. Enable Strong Authentication and Access Controls

Avoid using anonymous access to registries. Integrate registry authentication with your existing identity provider and enforce multi-factor authentication (MFA) where possible. Implement role-based access controls (RBAC) to restrict push/pull capabilities based on team responsibilities.

2. Use Signed and Verified Images

Implement image signing tools like Notary (Docker Content Trust) or Cosign to ensure images haven't been tampered with. By verifying digital signatures before deployment, you reduce the risk of using manipulated or malicious images.

3. Automate Vulnerability Scanning

Configure your registry to automatically scan all new image uploads for known vulnerabilities using tools like Clair, Trivy, or Aqua. Regular scans help identify outdated libraries, insecure configurations, and base image flaws before images are pushed downstream.

4. Clean Up and Expire Stale Images

Old and unmaintained images are often the easiest attack vector. Define lifecycle policies to automatically remove outdated images and limit the number of active image versions stored in your registry.

5. Encrypt and Isolate Registry Storage

Ensure that your registry data is encrypted at rest and in transit. For added protection, isolate registry access to internal networks and limit public exposure where possible. Use HTTPS exclusively and manage TLS certificates securely.

Registry security is just one piece of the container security puzzle. To fully secure your containerized infrastructure, you must also protect applications during execution. That’s why it’s essential to implement solutions that monitor for threats during actual container operation, such as container runtime security, which detects attacks that slip past build-time defenses.

By securing your container registries, you reduce the risk of supply chain attacks and establish a stronger foundation for your overall container security strategy. Prevention begins at the source—make sure your registry isn’t an open door to your entire stack.

React Concurrent Mode Deep Dive - Complete Series, (You Do Not Know React Yet)

Know WHY — Let AI Handle the HOW 🤖

A three-part series that takes you from surface-level understanding to deep architectural insights into React's concurrent features.

This isn't another tutorial on "how to use hooks." This series reveals the WHY behind React's concurrent rendering - the architectural decisions, low-level mechanisms, and mental models that transform how you build React applications.

Part 1: React Concurrent Mode Isn't Magic - It's Just Really Smart Priorities

The Foundation: Understanding Priority-Based Rendering

Learn the core mental model that makes everything else click:

- Why React treats updates with different urgency levels

- The critical insight: "It's about the VALUE, not the component"

- How

useDeferredValuetells React which work can wait - Real-world search and dashboard examples with exact timelines

Key Takeaway: React doesn't detect slow components - YOU assign priorities by choosing which values to defer.

Part 2: React's Fiber Architecture - The Secret Behind Interruptible Rendering

The Implementation: How React Actually Pauses Work

Dive into the low-level architecture that makes concurrent rendering possible:

- What is a Fiber and why linked lists matter

- The brilliant double buffering system (current + work-in-progress trees)

- Priority lanes explained with binary operations

- Render phase (interruptible) vs Commit phase (atomic)

Key Takeaway: React maintains two complete trees and can throw away work-in-progress without affecting what's on screen.

Part 3: Time Slicing in React - How Your UI Stays Butter Smooth

The Execution: The 5ms Frame Budget Secret

Understand exactly when and why React pauses:

- The 60fps problem and frame budgets

- React's 5ms time slice rule

- Frame-by-frame breakdown with millisecond precision

- Suspense integration with concurrent features

Key Takeaway: React works in 5ms chunks, yielding to the browser after each chunk to maintain 60fps and responsive UI.

You'll get the most value if you:

- ✅ Know basic React (hooks, components, state)

- ✅ Want to understand the "why" behind React's design

- ✅ Are curious about performance optimization

- ✅ Want to build truly responsive UIs

- ✅ Like deep technical explanations with analogies

This series is NOT for you if:

- ❌ You just want a quick "how-to" tutorial

- ❌ You're brand new to React

- ❌ You prefer surface-level explanations

Most tutorials teach you:

- "Use

useDeferredValuefor expensive updates" - "Use

useTransitionfor navigation" - Here's the API, good luck!

This series teaches you:

- WHY React needs concurrent features

- HOW the architecture enables interruptible rendering

- WHEN React actually pauses and resumes work

- WHAT happens at the millisecond level

Understanding the "why" transforms you from someone who uses React to someone who thinks in React.

After completing this series, you'll be able to:

-

Diagnose Performance Issues

- Understand why your UI feels laggy

- Know which concurrent feature to use

- Think in terms of priority lanes and fiber trees

-

Build Better UIs

- Create truly responsive search experiences

- Handle tab switching without freezing

- Keep animations smooth during heavy work

-

Debug with Confidence

- Understand exactly when components re-render

- Know why some updates feel instant and others don't

- Trace through fiber trees mentally

-

Make Architectural Decisions

- Choose between

useDeferredValueanduseTransition - Understand the trade-offs of concurrent features

- Design data flows with priorities in mind

- Choose between

- Start with Part 1 - Get the core mental model right

- Then Part 2 - Understand the implementation details

- Finish with Part 3 - See the complete picture with precise timing

Each post builds on the previous one, but can also stand alone if you're already familiar with some concepts.

If you enjoyed this deep dive, check out my other posts that explain the "why" behind React patterns:

- React's Key Prop Isn't About Lists - It's About Component Identity

- What If React Had No useEffect?

- Don't Sync State, Derive It!

- Inside React Query - What's Really Going On

Remember: Know the WHY behind React's design decisions, and the HOW becomes a natural extension of that understanding.

Why Value-Sensitive Design Is My North Star Now

I didn’t always talk explicitly about values in my design process. Early in my career, I treated ethics, inclusion, privacy — all of that — as constraints or “nice to haves” you layered in at the end. Over time, though, I’ve come to believe they must be foundational. In fact, I now see value-sensitive design (VSD) as a kind of compass that keeps me anchored in what really matters: creating technology that respects people.

What is value-sensitive design?

At its core, VSD is a design methodology that integrates human values systematically throughout the design process. It encourages you to ask: Which values are at stake? Who are the stakeholders? How might design decisions privilege or harm them?

Because values are rarely obvious or universal, VSD is inherently multi-layered. It asks us to iterate between conceptual investigations (what do users care about?), empirical investigations (how do users behave, feel, or push back?), and technical investigation (how can our systems support or thwart values) — all in a loop.

Why It’s Urgent in 2025

We’re at a moment where interfaces, AI systems, and immersive platforms are so pervasive that the stakes of design decisions feel existential. As technology progresses, the hidden value trade-offs are becoming more visible.

Opaque AI influence — Interfaces personalize so deeply now that decisions are sometimes invisible to users. When the logic is opaque, how do users trust or contest those decisions?

Data & privacy flux — Our designs often require data to function, but more and more users are wary of what’s collected, how it’s used, and who owns it.

Diverse contexts of use — A “one size fits all” design is more dangerous than ever. What feels seamless in one culture or environment might feel invasive or alien in another.

In a sense, VSD feels like a necessary antidote to the “build fast, iterate later” culture. If we skip value thinking early, we end up retrofitting or, worse, inflicting harm.

How I Use Value-Sensitive Design in My Work

I’ve adapted VSD to fit my own process. Here are a few practices I’ve integrated (and refined, sometimes painfully):

- Value Mapping Before Wireframes

Before sketching anything, I explicitly map values in tension — transparency vs. simplicity, convenience vs. consent, efficiency vs. reflection. I sketch “value maps” that visualize how design decisions might push users one way or another.

This map becomes my north star during design reviews. Whenever a team member suggests a shortcut, I ask: “Which side of our value map does this lean toward?”

- Stakeholder Interviews + Value Probes

Beyond standard user interviews, I introduce probes (surveys, scenario exercises, conceptual cards) to surface hidden values. I ask: What makes you feel in control? What feels invasive? The answers often surprise me.

These probes help me see values users care about — sometimes more than features themselves.

- Value Testing

In usability tests, I don’t just ask “Can you complete this task?” I also ask: Did you feel respected? Did anything feel manipulative? Would you change permissions or opt out of any part of this flow?

I compare versions of flows not just on efficiency, but on how they score in terms of trust, clarity, and comfort.

- Technical Support for Values

Design decisions should be paired with technical mechanisms that enforce or protect values. If consent is a value, I might bake in revocable data access or visible toggles rather than hidden defaults. If inclusivity is a value, I ensure extensible typography scales, alt text is robust, and motion is curtailed.

- Iteration & Reflection

Values shift. Contexts evolve. What felt like a good balance six months ago might feel off today (e.g., in light of news about algorithmic bias or data breaches). I revisit value maps and audits regularly — not just when features get added.

What I’ve Learned (Good & Hard)

Over time, VSD has transformed how I see what “good design” means — but it hasn't been easy. Here are a few lessons I’ve gathered:

Trade-offs are unavoidable. You often can’t maximize all values simultaneously. The trick is to make trade-offs visible and defensible, not hidden.

Not everyone cares equally. Some stakeholders — product leads, business teams — may prioritize growth or engagement over values like privacy. Those tensions have to be surfaced and negotiated.

It can slow you down (if you let it). My early VSD efforts felt like friction. But I’ve learned to embed value thinking in smaller iterations, so it doesn’t block progress but guides it.

You need allies. VSD is easier when you have engineers, product managers, and leadership who are aligned on value principles. If design is the only voice raising these questions, you’ll feel friction.

Looking Forward

I believe that in the next few years, we’ll start to see value-aware AI, UX 3.0, and design ecosystems that aren’t just reactive to data, but proactive in upholding values. (As some researchers suggest, UX is evolving from “user-centered” to “human-AI-centered” frameworks.)

My hope is that design education, tooling, and team cultures shift so designers don’t have to be lone moral agents — value thinking becomes a shared foundation.

In the end, I design with VSD not because it looks “ethical” in marketing pamphlets, but because I want to build systems I can live with. When technology powers our lives so intimately, our values can’t live at the margins — they must live in the infrastructure.

Time Slicing in React - How Your UI Stays Butter Smooth (The Frame Budget Secret)

Know WHY — Let AI Handle the HOW 🤖

In Part 1, we learned about priority-based rendering. In Part 2, we explored Fiber architecture. But here's the final piece of the puzzle: How does React know WHEN to pause?

What if I told you React gives itself a strict 5ms budget per frame, and understanding this timing mechanism is the key to building silky-smooth user interfaces?

Your screen refreshes 60 times per second. That gives you 16.67ms per frame to do everything:

One Frame (16.67ms):

├─ JavaScript execution (React rendering)

├─ Style calculations

├─ Layout

├─ Paint

└─ Composite

If ANY of this takes > 16.67ms:

→ Frame gets dropped

→ UI feels janky

→ User notices lag

The Challenge: How do you render expensive components without dropping frames?

Modern games run at 60fps by:

- Doing critical work (player movement, collisions)

- Checking the clock: "Do I have time left?"

- If yes, do nice-to-have work (background animations)

- If no, pause and continue next frame

React does the exact same thing!

function gameLoop() {

const frameDeadline = performance.now() + 16.67;

// Critical: Player movement

updatePlayerPosition();

// Check time remaining

if (performance.now() < frameDeadline - 5) {

// Nice-to-have: Background details

renderDistantTrees();

} else {