BLOG POST

AI 日报

Reddit Mods Sued by YouTuber Ethan Klein Fight Efforts to Unmask Them

Klein has attempted to subpoena Discord and Reddit for information that would reveal the identity of moderators of a subreddit critical of him. The moderators' lawyers fear their clients will be physically attacked if the subpoenas go through.

Landlords Demand Tenants’ Workplace Logins to Scrape Their Paystubs

Screenshots shared with 404 Media show tenant screening services ApproveShield and Argyle taking much more data than they need. “Opt-out means no housing.”

How Ruby Went Off the Rails

What happened to RubyGems, Bundler, and the Open Source drama that controls the internet infrastructure.

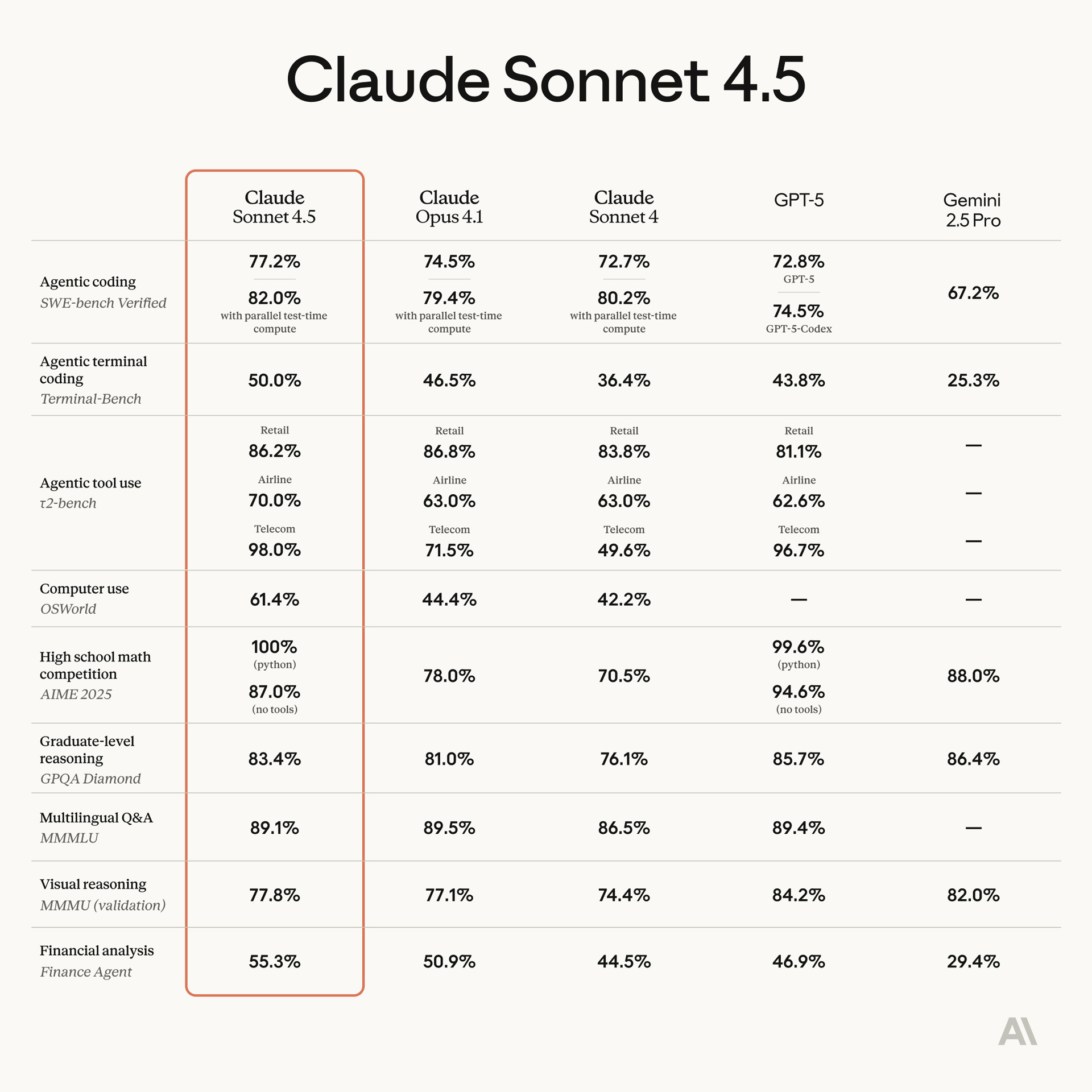

Anthropic Launches Claude Sonnet 4.5

The model was released under the vendor's AI Safety Level 3 protection, which helps prevent dangerous inputs and outputs.

Industrial Robot Demand Doubled in 10 Years

China is dominating robotic installations, while European countries are beginning to lag behind.

Huawei details open-source AI development roadmap at Huawei Connect 2025

Open-source AI development took centre stage at Huawei Connect 2025 last week, with Huawei laying out implementation timelines and the technical specifics around making its entire AI software stack publicly available by year-end. The announcements came with context that matters to developers: frank acknowledgement of past friction, specific commitments about what components will be released, […]

The post Huawei details open-source AI development roadmap at Huawei Connect 2025 appeared first on AI News.

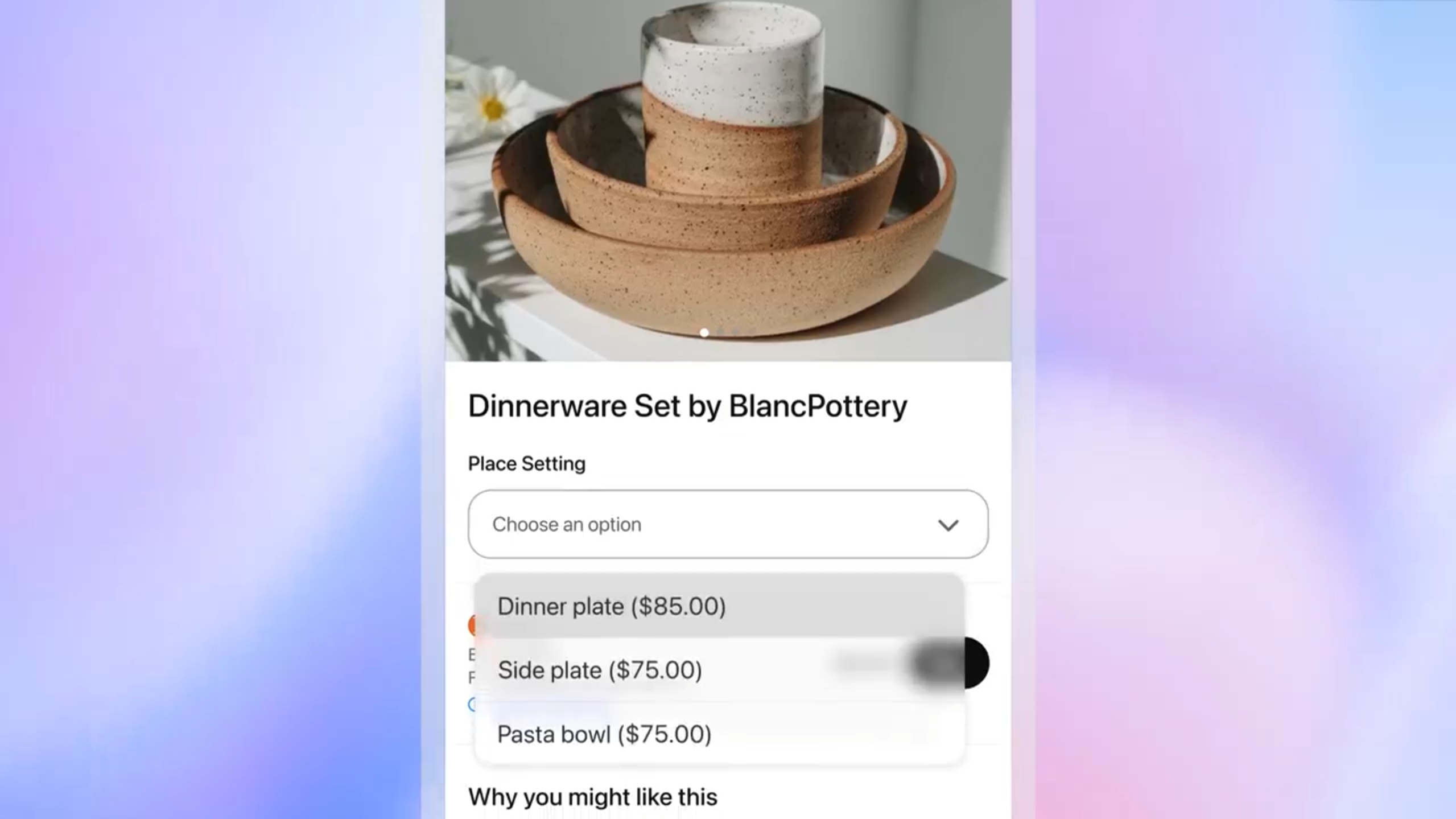

Users Can Shop From Etsy and Shopify in ChatGPT as OpenAI Launches New Agentic Commerce Protocol

Agentic Commerce Protocol (ACP), an open standard co-developed with Stripe, allows programmatic commerce flows between buyers, AI agents, and businesses.

The post Users Can Shop From Etsy and Shopify in ChatGPT as OpenAI Launches New Agentic Commerce Protocol appeared first on Analytics India Magazine.

Anthropic Launches Claude Sonnet 4.5, Touts It as ‘Best Coding Model in the World’

![]()

Claude Sonnet 4.5 achieved top scores on the SWE-bench Verified evaluation, which tests real-world software coding skills.

The post Anthropic Launches Claude Sonnet 4.5, Touts It as ‘Best Coding Model in the World’ appeared first on Analytics India Magazine.

Microsoft Brings ‘Vibe Working’ to 365 Copilot With Agent Mode and Office Agent

Office Agent in Copilot chat, powered by Anthropic models, brings presentation and document creation into a chat-first interface.

The post Microsoft Brings ‘Vibe Working’ to 365 Copilot With Agent Mode and Office Agent appeared first on Analytics India Magazine.

Lovable Introduces Cloud and AI Features to Build Full-Stack Apps

Lovable AI, powered by Google’s Gemini models, allows users to add artificial intelligence features to their apps without setup, API keys, or separate billing.

The post Lovable Introduces Cloud and AI Features to Build Full-Stack Apps appeared first on Analytics India Magazine.

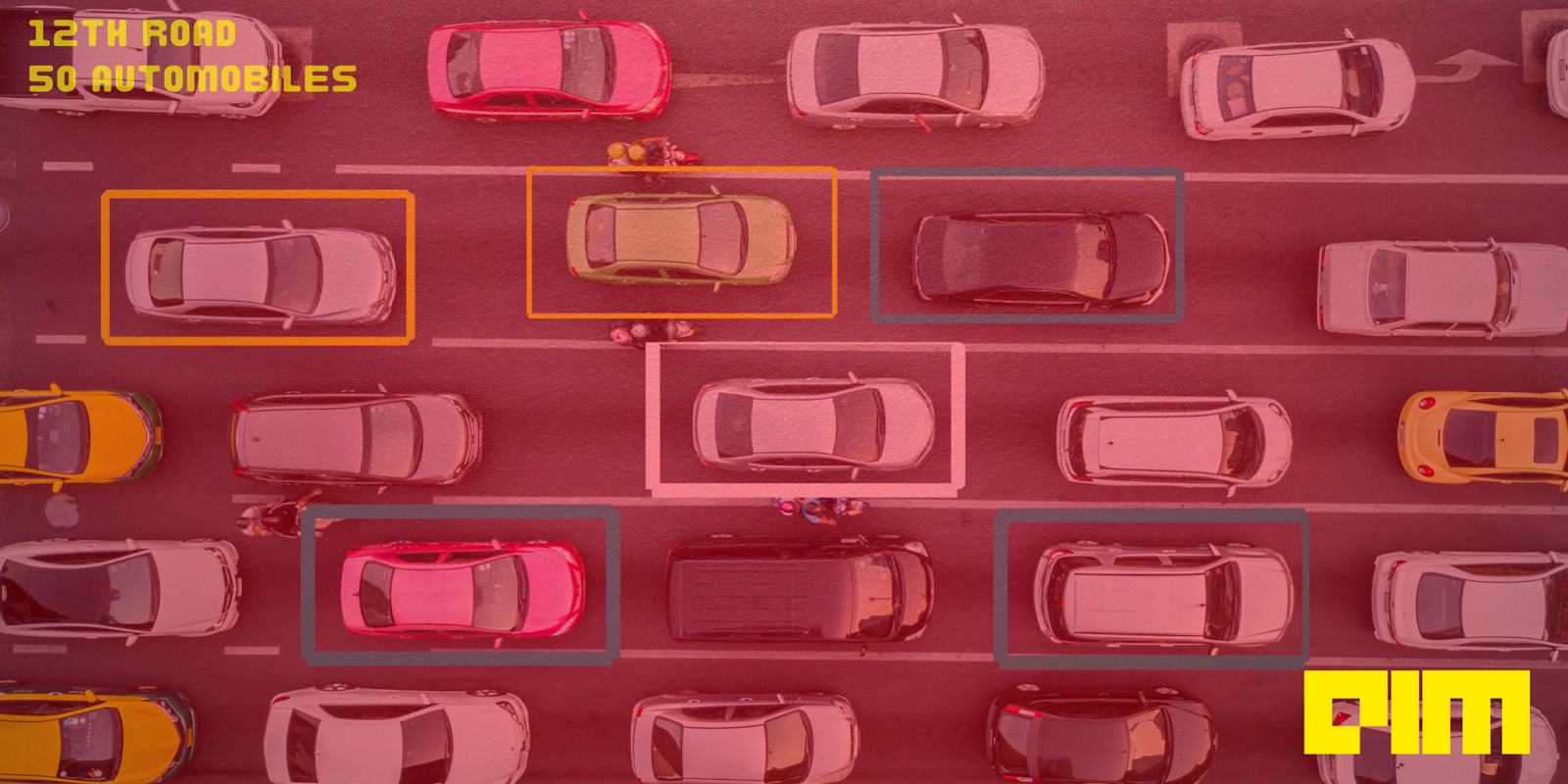

NIT Rourkela Patents AI Model to Boost Road Safety Through Vehicle-to-Vehicle Communication

This could pave way for safer roads, smarter traffic management and the future of autonomous mobility in India.

The post NIT Rourkela Patents AI Model to Boost Road Safety Through Vehicle-to-Vehicle Communication appeared first on Analytics India Magazine.

Chennai Startup Agnikul Reveals Plans for its Fully Reusable Rockets

The company said it will ensure no part of its rockets is left behind or expended.

The post Chennai Startup Agnikul Reveals Plans for its Fully Reusable Rockets appeared first on Analytics India Magazine.

WhatsApp Vs Arattai – Can Made in India Messenger Win?

India’s swadeshi tech push faces its biggest challenge: can homegrown apps like Aratai rival WhatsApp? This debate explores patriotic sentiment, privacy concerns, and network effects. Featuring ministerial endorsements and Zoho’s swadeshi success, it examines whether India can build globally competitive digital products while riding the Make in India wave.

The post WhatsApp Vs Arattai – Can Made in India Messenger Win? appeared first on Analytics India Magazine.

Sahamati Labs, Google Cloud to Bring AI into India’s Account Aggregator Network

The partnership will use generative AI to build predictive insights, personalised recommendations, and fraud detection systems.

The post Sahamati Labs, Google Cloud to Bring AI into India’s Account Aggregator Network appeared first on Analytics India Magazine.

How Pure Storage India R&D Centre Built Pure KVA

Pure KVA, one of Pure Storage’s flagship innovations from India, helps enterprises reduce AI infrastructure costs by optimising GPU usage.

The post How Pure Storage India R&D Centre Built Pure KVA appeared first on Analytics India Magazine.

Spencer Kimball on How CockroachDB Will Power Efficient AI Agents

Spencer Kimball sees AI agents outnumber humans and CockroachDB is building the database backbone to handle that.

The post Spencer Kimball on How CockroachDB Will Power Efficient AI Agents appeared first on Analytics India Magazine.

Anthropic says its new AI model “maintained focus” for 30 hours on multistep tasks

Latest Claude model beats OpenAI and Google on coding tests.

Sports piracy site Streameast returns after US government let domain expire

ICE's HSI branch shut down Streameast in 2024.

EA will be a very different company under private ownership

Analysts see trouble for studios like BioWare, potential for Saudi meddling.

F-Droid says Google’s new sideloading restrictions will kill the project

F-Droid sees Google's plans as a threat to FOSS apps.

ESA will pay an Italian company nearly $50 million to design a mini-Starship

This is a "step forward in the transition of European launch systems toward full reusability."

Senators try to halt shuttle move, saying “little evidence” of public demand

"Removing an item from the National Collection is not a viable solution..."

Fortnite disables Peacemaker emote that might resemble a swastika

Epic will "inquire into our partner's creative intentions" before making a final decision.

Taiwan pressured to move 50% of chip production to US or lose protection

Trump official plots “impossible” deal moving Taiwan’s chip supply chain into US.

It’s official: EA is selling to private equity in $55 billion deal

Investors will receive $210 per share, well above the stock's all-time high.

Big AI firms pump money into world models as LLM advances slow

World models aim to navigate the physical world by learning from videos, robotic data.

ZR1, GTD, and America’s new Nürburgring war

Ford and Chevy set near-identical lap times with very different cars; we drove both.

Will AI make cheaper personalized insurance premiums possible? Here’s why it’s a slippery slope

In this age of algorithms, personalized insurance is encountering legal limits, especially the ban on discrimination. Can we balance accurate pricing, solidarity, and fairness?

Nvidia Is Quaking in Its Boots

"We're up against a formidable, innovative, hungry, fast-moving, underregulated [competitor]."

The post Nvidia Is Quaking in Its Boots appeared first on Futurism.

Trump’s Grip on Reality Questioned After He Shares and Then Deletes Bizarre AI-Generated Video

Is the president confused?

The post Trump’s Grip on Reality Questioned After He Shares and Then Deletes Bizarre AI-Generated Video appeared first on Futurism.

OpenAI Is Preparing to Launch a Social App for AI-Generated Videos

The platform appears to closely resemble TikTok and is powered by Sora 2, OpenAI's latest video generation model.

Marissa Mayer Is Dissolving Her Sunshine Startup Lab

After seven rocky years, the company’s assets will be sold to Dazzle, a new AI firm that Mayer founded.

Building a Streamlit Python UI for LLaVA with OpenAI API Integration

Table of Contents Building a Streamlit Python UI for LLaVA with OpenAI API Integration Why Streamlit Python for Multimodal Apps? What Is Streamlit Python? The Streamlit Python-Based UI We Will Build in This Lesson Why Not FastAPI or Django? Configuring…

The post Building a Streamlit Python UI for LLaVA with OpenAI API Integration appeared first on PyImageSearch.

Stripe Joins With OpenAI to Allow Etsy Purchases Via ChatGPT

The payment-processing company Stripe Inc. is working with OpenAI to allow US shoppers to buy products through ChatGPT, the popular artificial-intelligence tool.

Google to Pay $24.5 Million to End Trump Jan. 6 Ban Lawsuit

Alphabet Inc.’s Google agreed to pay $24.5 million to resolve Donald Trump’s claims that being banished from his YouTube channel after the Jan. 6, 2021, riot at the US Capitol was illegal censorship, according to a court filing.

Verizon Is in Talks to Purchase EchoStar Wireless Spectrum

Verizon Communications Inc. is in discussions with EchoStar Corp. about purchasing some of its wireless spectrum, according to people familiar with the matter.

AI Data Centers Are Sending Power Bills Soaring

Wholesale electricity costs as much as 267% more than it did five years ago in areas near data centers. That’s being passed on to customers.

S&P Says It Expects To Cut Electronic Arts to Junk Status

S&P Global Ratings said it expects to cut Electronic Arts Inc.’s credit grades to junk status after the video-game maker’s buyout deal is closed, citing the expected addition of about $20 billion of debt from the transaction.

Pressure Grows on SingTel as Optus Emergency Call Blunders Mount

As Optus reels from another emergency call outage, the crisis has spread beyond Australia’s borders. The escalating reputational damage to the nation’s second-largest phone company is now bearing down on its parent Singapore Telecommunications Ltd.

Robinhood Shares Climb 12% on Growth in Prediction-Market Trades

Robinhood Markets Inc. shares rose over 12% after Chief Executive Officer Vladimir Tenev said the online-stock brokerage has made strong inroads into prediction markets, where people wager on elections, sporting matches and other widely followed events.

EA Agrees to Largest Leveraged Buyout in History | Bloomberg Tech 9/29/2025

Bloomberg’s Caroline Hyde and Ed Ludlow discuss Electronic Arts’ agreement to be sold in a record-breaking leveraged buyout. Plus, Huawei plans to ramp up production of its most advanced AI chips in a bid to take market share from Nvidia. And, Peloton is preparing to launch new AI software in its latest bid to revive the struggling fitness firm. (Source: Bloomberg)

Saudi Plans for Video-Game Hub Grow With $55 Billion EA Deal

Saudi Arabia is accelerating plans to transform itself into a hub for gamers with its blockbuster deal to take Electronic Arts Inc. private.

EA Agrees to $55 Billion Sale in Largest Leveraged Buyout on Record

Electronic Arts Inc. has agreed to sell itself in the largest leveraged buyout on record to a group of investors that includes a firm managed by President Donald Trump’s son-in-law Jared Kushner and Saudi Arabia’s sovereign wealth fund.

US Hits Sanctioned Firms’ Subsidiaries, Drawing Chinese Rebuke

The Trump administration is dramatically expanding US sanctions to capture subsidiaries of blacklisted companies — a crackdown that drew a swift rebuke from China, where key tech giants are already subject to stringent American trade curbs.

Anthropic Says New Model Can Code on Its Own for 30 Hours Straight

Anthropic is releasing a new artificial intelligence model that is designed to code longer and more effectively than prior versions, its latest attempt to stay ahead of rivals like OpenAI in offering tools for software developers. The new model, called Claude Sonnet 4.5, is better at following instructions and can code on its own for up to 30 hours straight, the company said on Monday. By comparison, a previous model called Claude Opus 4 is said to be able to field coding tasks for up to seven h

Walmsley’s Dream Hire Badly Needs to Find Some Blockbuster Drugs

Nearly nine years ago, when Emma Walmsley was preparing to take over as CEO of GSK Plc, she wrote in her diary that Luke Miels — then an executive at rival AstraZeneca Plc — would be “a dream appointment for this adventure.”

Black Forest Labs, AI Image Partner for Meta, in Talks for Funding at $4 Billion Valuation

German artificial intelligence startup Black Forest Labs is in discussions to raise funding that could boost its valuation to around $4 billion, according to people familiar with the matter.

Blue Owl, xAI Bets Boost Qatar Fund’s $500 Billion US Pledge

The $524 billion Qatar Investment Authority is stepping up its investments in the US with high-profile commitments to artificial intelligence and digital infrastructure, underscoring how Doha is keeping its economic priorities separate from geopolitical tensions in the Middle East.

Asahi Suspends Some Beverage Shipments After Hit by Cyberattack

Asahi Group Holdings Ltd. suspended some beverage shipments on Monday after a cyberattack hobbled some of the drinks company’s operations in Japan.

ArcelorMittal May Get $491 Million IDC South Africa Unit Bid

South Africa’s top development finance institution is working with financial advisers on a possible bid of about 8.5 billion rand ($491 million) for control of ArcelorMittal SA’s business in the country, people familiar with the situation said.

Comcast Promotes Michael Cavanagh to Co-Chief Executive Officer

Comcast Corp. named Michael Cavanagh as co-chief executive officer alongside Brian Roberts on Monday and added him to the company’s board of directors.

Pimco CEO Roman on AI Financing, Private Markets, Fixed Income

Manny Roman, CEO at Pacific Investment Management Co. (Pimco), discusses investing in AI infrastructure, global opportunities in fixed income, and why tariff uncertainties pose a risk to US stocks. He spoke Monday on “Bloomberg Surveillance.” (Source: Bloomberg)

Electric Aircraft Firm Beta Technologies Files for US IPO

Beta Technologies Inc., an electric aircraft producer, filed for a US initial public offering, disclosing growing revenue and widening losses.

Netanyahu Discusses AI in Private Meeting With Tech Investors

Israeli Prime Minister Benjamin Netanyahu met with US tech executives and investors in New York on Sunday to discuss the prospects for using artificial intelligence to boost his country’s economy and military capabilities, according to people familiar with the matter.

Electronic Arts Said to Near Buyout Deal at About $210 Per Share

Video game maker Electronic Arts Inc. is in advanced talks to go private at about $210 a share, according to people familiar with the matter.

DeepSeek Debuts ‘Sparse Attention’ Method in Next-Gen AI Model

DeepSeek updated an experimental AI model Monday in what it called a step toward next-generation artificial intelligence.

Fed’s Waller Says Technology Can Deliver More Choice in Payments

US regulators ought to welcome the ways new technologies could benefit financial firms as well as consumers, while imposing guardrails that promote safety, Federal Reserve Governor Christopher Waller said.

OpenAI Floats the Promise of a More Useful Personal AI

Welcome to Tech In Depth, our daily newsletter about the business of tech from Bloomberg’s journalists around the world. Today, Austin Carr looks at OpenAI’s effort to offer ChatGPT users a personal daily briefing — and all-knowing assistant.

US to See $350 Billion Nuclear Boom to Power AI, Report Says

Soaring demand for electricity will drive a $350 billion nuclear spending boom in the US, boosting output from reactors by 63% by 2050, according to Bloomberg Intelligence.

Peloton Is Set to Kick Off Turnaround with Hardware, AI Product Launch

Peloton Interactive Inc. is preparing to kick off its second comeback attempt in three years, following a previous effort that fell short of expectations and lacked any major consumer-facing upgrades.

Xiaomi Says New IPhone Rival Outpacing Previous Model at Launch

Xiaomi Corp. said it’s confident its new iPhone rival will exceed the sales of its previous model, saying the phone saw record demand immediately after its debut on Saturday.

OpenAI Launches Parental Controls for ChatGPT After Teen’s Death

OpenAI is launching parental controls for ChatGPT, which it announced following a lawsuit alleging a teenager who died by suicide this spring relied on the popular chatbot as a coach.

YouTube to pay $22 million to Trump to settle post-January 6 ban case

In total, YouTube will pay $24.5 million to settle the suit brought by President Donald Trump.

EA's boss won't have to answer to Wall Street. That doesn't mean the pressure's over.

Andrew Wilson has helmed the video-game company since 2013 and will remain CEO after the deal closes.

US Navy techs are stripping parts from fighter jets and subs to keep other ones working

Long delays to get parts for F/A-18 fighters and Virginia-class submarines have forced maintainers to strip from other aircraft and subs.

I made Ina Garten's 'outrageous brownies,' the most popular dessert at her famous store. They tasted incredible.

Ina Garten said these "outrageous brownies" flew off the shelves at her famous Barefoot Contessa store. Her easy recipe is the perfect dessert.

Move over, doodles. There's a new 'It' dog in town.

Dachshunds could break into the American Kennel Club's top five most popular breeds this year — and weiner-dog influencers might help them do it.

How to get Ariana Grande tickets: Chicago, Atlanta, Los Angeles, and more

Though it won't kick off until the summer of 2026, Ariana Grande's coming tour is already sold out. Here's where to find tickets from resellers.

I paid $300 to spend 2 nights in an Airstream trailer for the first time. I'll never do it again in a city.

I stayed in a luxury Airstream trailer in Vienna. It had a bathtub and a comfy bed, but it was also noisy and lacked storage hacks.

Charlie Javice sentenced to 7 years in prison for JPMorgan Chase fraud

Charlie Javice got a 7-year prison sentence for defrauding JPMorgan Chase in the $175M sale of her fintech startup Frank.

The Army and Marine Corps are paying more to repair vehicles that are less ready to fight, watchdog says

Costs for vehicle repairs have soared, but the services are getting less availability and mission readiness in return.

Vintage photos show how dangerous railways, mills, and other workplaces used to be

Working in railways, mines, and mills caused thousands of deaths in the early 20th century and before. Photos show the dangerous workplaces.

Microsoft is buying millions of carbon credits. Meet the investor working to make them real.

Big power users like Microsoft and the Mercedes F1 team are buying carbon credits despite the questions surrounding the practice.

The Tiny Team era is here

AI-powered startups are proving the Tiny Team era is here. Five founders and employees share the pros and cons of working alongside AI agents.

I expected the Ryder Cup to be one big frat party. The level of corporate polish caught me by surprise.

There was light rowdiness on Friday — boos, drinks, porta potties — but the contrast to corporate America struck me more than the chaos itself.

What Kamala Harris' style has looked like throughout her career, from suits to sparkling gowns

Kamala Harris usually wears pantsuits, Converse sneakers, and pearl jewelry. Over the years, though, she's incorporated gowns and jeans.

I was sick of spending my travel budget on weddings, so now I turn every invitation into a vacation

Over the past few years, I've been invited to lots of weddings that required me to travel. Now, I like to turn every invite into a mini vacation.

Marissa Mayer's shuttered photo app was just too beautiful for this cruel world

Former Yahoo CEO Marissa Mayer's photo-sharing app never really caught on. So she's reportedly moving on — to a new AI personal assistant.

My family of 5 went on our first cruise. We had fun, but there are 4 things I wish we'd known before getting on the ship.

There are a few things I wish I had known before my first cruise with my family. Next time, I'll turn my phone on airplane mode and pack formal wear.

I ate dinner at one of Ina Garten's favorite restaurants. It was worth every penny.

Nick & Toni's, a favorite of Ina Garten, is a cozy restaurant that offers delicious dishes. You might even spot some A-listers while dining there.

22 musicians we'd love to see perform the Super Bowl halftime show

Bad Bunny is officially the 2026 Super Bowl halftime show headliner. Here are other musicians who deserve a shot, too.

Prologis exec explains why the company has an edge in the red-hot data center space

During Business Insider's "The Resiliency Playbook" event, a Prologis executive explained why the company is primed to develop data centers.

OpenAI Is Preparing to Launch a Social App for AI-Generated Videos

The platform appears to closely resemble TikTok and is powered by Sora 2, OpenAI's latest video generation model.

Marissa Mayer Is Dissolving Her Sunshine Startup Lab

After seven rocky years, the company’s assets will be sold to Dazzle, a new AI firm that Mayer founded.

Are H-1B Changes ‘A Strategic Opening’ For Startup Creation Or An ‘Insurmountable Founder Tax’?

Will the new $100,000 fee for H-1B applications help or hurt the U.S. startup ecosystem? Investors and an immigration attorney who spoke with Crunchbase News were divided over the impact of the changes.

Exclusive: Trucker’s Son Bucks Logistics Funding Decline with $40M Raise For Startup Alvys

Alvys, an AI-powered logistics software provider, has raised $40 million in an RTP Global-led Series B funding, the company tells Crunchbase News exclusively.

Biotech Share Of US Funding Hits Lowest Point In Crunchbase History

The share of U.S. startup investment going to biotech companies has hit the lowest level in more than 20 years, as an ever-growing proportion of funding goes to AI upstarts in other sectors. We take a look at what else we found in a data dive.

Timing The Market Is A Myth: Here’s How To Stay Ahead Of It

Mistimed market entry is one of the top five reasons startups fail, writes guest author Ashish Kakran of Sierra Ventures. While the perfect time to go to market is hard to calculate, he explains how just a slight edge ahead of the pack can make all the difference.

Claude Sonnet 4.5 Is Here

Customers process exabytes of data daily on Databricks, and generative AI is already...

How AI Agents Transform the Healthcare Sector

TL;DR AI agents are autonomous, goal-driven systems that perceive, reason, and act – handling multi-step clinical workflows with human-like adaptability (e.g., diagnosing and offering mental health support) Unlike rule-based automation, […]

The post How AI Agents Transform the Healthcare Sector appeared first on Datafloq.

Disconnected Demographic Data? Here’s How Data Appending Can Fix It

B2B marketing strategies suffer from blind spots due to incomplete demographic data. Decision-makers cannot tailor their approaches effectively without vital client demographic information. When B2B enterprise leaders use poor-quality demographic […]

The post Disconnected Demographic Data? Here’s How Data Appending Can Fix It appeared first on Datafloq.

The Role of Synthetic Data in AI Model Training

Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing industries with smart automation, predictive analytics, and larger scale AI-enabled decisions, but any AI production deployment is only as good as […]

The post The Role of Synthetic Data in AI Model Training appeared first on Datafloq.

Data is at the Center of Scientific Discovery Inside MIT’s New AI-Powered Platform

AI-powered tools have become more common in scientific research and development, especially for predicting outcomes or suggesting possible experiments using datasets. However, most of these systems only work with limited Read more…

The post Data is at the Center of Scientific Discovery Inside MIT’s New AI-Powered Platform appeared first on BigDATAwire.

Practical ways to use MCP at work

🧠 Market analysis, create design-grade prototypes, test your product’s APIs, build your own Chief of Staff and more…

✨ New on Code-Tree.dev — Discover Suggested Profiles!

We’ve just rolled out a small but mighty new feature on code-tree.dev

: profile suggestions! 🎉

Now, when you visit someone’s Code Tree profile, you’ll see a couple of recommended profiles just below their links — complete with avatars, names, and clickable handles.

At its core, Code Tree is about helping developers and makers showcase what they build, what they love, and what they stand for — in one clean, beautiful profile.

But we’re also about discovery.

- Find other devs building in the same stack.

- Explore profiles with similar tools or interests.

- Get inspired by how others present their work.

Build your profile in minutes, link your projects, tools, and stack — and join a growing community of makers.

Got feedback or feature ideas? We’d love to hear from you! Drop a comment or reach out on GitHub 💬

Happy building!

— The Code Tree team 🌱

Automating AWS Lambda Deployment using GitHub Actions

Why Teams Are Automating Lambda Deployments

With the growing adoption of serverless architectures, AWS Lambda has become a core compute solution for running event-driven workloads. However, manually deploying Lambda functions introduces the risk of inconsistency, downtime, and human error. DevOps teams are increasingly automating these processes using CI/CD pipelines.

GitHub Actions provides a powerful platform to integrate automation directly into the version control system. This empowers developers to trigger deployments automatically on code pushes, PR merges, or manually through workflow dispatches.

Core Components of the Automation Workflow

Preparing Your Lambda Function Code

Organize your function code in a directory structure that’s easy to zip and upload. Make sure to include only necessary dependencies. If your code relies on external Python packages, use a requirements.txt and deploy with dependencies zipped in a package directory.GitHub Actions Workflow File (.github/workflows/deploy.yml)

Create a GitHub Actions workflow YAML file to define your deployment pipeline. A basic Python example looks like this:

name: Deploy Lambda

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9'

- name: Install dependencies

run: |

pip install -r requirements.txt -t package

cd package

zip -r ../function.zip .

cd ..

zip -g function.zip lambda_function.py

- name: Deploy to Lambda

uses: aws-actions/aws-lambda-deploy@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1

function-name: myLambdaFunction

zip-file: function.zip

- AWS IAM Permissions and Secrets Configuration Create an IAM user with permissions to update Lambda functions via lambda:UpdateFunctionCode and store its credentials in GitHub Secrets. Make sure to avoid environment variable leakage by reviewing workflow logs with temporary output disabled.

Secret: AWS_ACCESS_KEY_ID

Secret: AWS_SECRET_ACCESS_KEY

Advanced Features for Production Pipelines

Branch Filtering: Deploy only on specific branches like main or release/*.

Workflow Dispatch: Trigger manual deploys using workflow_dispatch:.

Environment Promotion: Deploy to dev, staging, and prod using environment protection rules and matrix builds.

Monitoring: Integrate Slack, Datadog, or Amazon CloudWatch for post-deployment notifications.

Conclusion

Automating AWS Lambda deployments using GitHub Actions leads to faster delivery cycles, reproducible builds, and minimized manual tasks. By defining a clear release workflow, setting up the right permissions, and using environment configurations, engineering teams can streamline serverless development at scale.

CTA: Explore GitHub Actions Marketplace for more Lambda integration tools.

Building a Full Stack Web App from scratch: First Steps

I am Constanza Díaz, Web developer, industrial designer, UX/UI enthusiast, aspiring entrepreneur, and self-taught digital product creator — currently building my own web application and documenting the process.

I've been working as a front end developer for the last 6 years and now I'm planning to develop my own web application named HandyFEM, a digital platform designed to connect women professionals in skilled trades with clients.

I am doing this as a way to learn by doing, as artificial intelligence is evolving so quickly and becoming a must for every developer and digital product creator and owner. At the same time, I will build my own personal project, which I am developing as a business project at Innoemprèn Sostenible, a program for entrepreneurs at Tecnocampus University in Barcelona, Spain.

Building this app will be a good way to create a very complete application where multiple features can be added — this is a very big challenge as a designer-developer-entrepreneur and a true one-woman band, but also a great way to show all my multiple skills in one place :D.

After a few attempts of "vibe coding" without a plan using Vercel v0 and Lovable, I realized my app is too ambitious to be done in only one prompt :D. So I decided to create a professional approach and document it, so that I can learn everything during the process and get feedback from other devs and designers.

I'm documenting every step — from mapping the app structure to experimenting with AI-driven prototyping.

For this project, I will start with Vercel v0 as an AI assistant for generating frontend components, with Supabase as the backend layer. This blog series will serve both as a technical journal and a record of decisions that shape the MVP.

AI tools like Vercel v0 can generate impressive amounts of code quickly. The risk? Losing track of fundamentals if the product lacks a clear structure.

To avoid gaps, I started by outlining the navigation map of HandyFEM: a high-level diagram of screens, user flows, and connections. This ensures that all the important features won’t be overlooked later.

Here’s the first structural map created in FigJam. It defines the scope of the MVP and keeps development aligned:

Caption: HandyFEM Navigation Map (MVP) — Yellow: buttons | Green: components (will develop more detail later).

Key sections:

- Landing Page: entry point with signup, login, and directory access.

- Auth: client and professional signup, plus login.

- Role-Based Dashboards: tailored panels for clients and professionals.

- Public Directory: searchable list of professionals with filters.

- Professional Profiles: detailed pages with contact options.

- Messaging System: list of conversations and direct chat.

Dashboard ≠ Profile

Dashboards serve as control centers. Profiles are just editable data. Keeping them separate opens room for metrics, history, or verification in the future.Roles must be explicit

Defining client vs. professional flows early makes the product scalable without major refactors.Planning is leverage

One diagram clarified priorities and prevented the common trap of coding first and restructuring later.

The next step is translating this map into Jira epics and tasks, preparing a Sprint 0 focused on:

- Setting up Supabase tables and relationships.

- Configuring authentication (email + password).

- Establishing the initial Next.js + Tailwind architecture.

This structured approach allows me to integrate AI tools without sacrificing control or long-term scalability.

👉 This post opens the series on building HandyFEM from scratch. The upcoming entries will cover Jira planning, Sprint 0 setup, and the integration of Vercel v0 into the workflow. Follow the hashtag #HandyFEMapp if you don’t want to miss the progress!

📎 For those who want to dive deeper or leave comments on my board, the full navigation map is available here: Open FigJam Board

🔗 Previous: none (this is the first post)

🔗 Next: Coming soon: From Diagrams to Tickets (Organizing HandyFEM with Jira)

Zero to Cloud: 12 Weeks of AWS re/Start + Free AWS CCP Exam

I graduated on the AWS re/start program under Ajira Digital as part of Cohort 12, where we had an intensive 12-week program that gave the basics to those interested in joining cloud and nailing the practitioner examinations. Here is all you need to know about the program.

AWS re/Start is a free, full-time training program created by Amazon Web Services to help individuals from diverse backgrounds launch careers in the cloud.

The program runs across the African continent through regional partners (with Ajira serving as the delivery partner in Kenya). In just three months, the learners would have gone through an intensive mix of:

1.Cloud Fundamentals — AWS services, security, databases, networking.

2.Technical Skills — Linux, Python scripting, version control.

3.Professional Skills — communication, teamwork, resume coaching, and interview preparation.

- Certification Prep — structured guidance for the AWS Certified Cloud Practitioner exam.

The program is designed for anyone curious about cloud technology, whether you’re a recent graduate, career switcher, or someone looking to upskill.

The 12 weeks are highly structured and immersive. A typical week includes:

- Morning sessions for theory and new concepts.

- Hands-on labs where you practice AWS services in real time.

- Afternoon sessions for deep dives and collaborative problem-solving.

- Career labs dedicated to employability training — CV building, interview prep, and workplace skills.

The schedule is full-time, Monday to Friday, and demands complete commitment. After the 12 weeks, the aws practitioner examination is waived 100 % which is an extra bonus.

To get into the program, here is a pathway I followed:

- Apply via the AWS re/Start portal or through a local partner (Ajira in Kenya, other organizations across Africa).

- Demonstrate motivation and availability — you’ll need to commit to all 12 weeks, full-time.

- Complete the selection process, which includes interviews and assessments by the training partner.

- Join a cohort — Here, you are immersed in a community of learners guided by experienced trainers.

Why AWS re/Start Matters

Cloud adoption across Africa is accelerating, but the demand for skilled professionals far outpaces supply. AWS re/Start directly addresses this gap by giving learners not just knowledge, but also career-readiness and connections to employers.

As a Cohort 12 graduate, I can confidently say AWS re/Start is more than a training program, it is a career launchpad. If you’re passionate about tech and looking for a way into cloud computing, this program is worth considering. Applications run periodically, so check the Ajira AWS re/Start program linkedin page and see if the next cohort could be your gateway to the cloud.

CSS Pseudo-Elements: Enhance Your Projects with Style!

This article explores CSS pseudo-elements, which enable easy styling and content modification without additional HTML elements, and how I used them to create eyes for the ghosts in my JS Pac-Man game!

CSS Before & After Pseudo-Elements

CSS pseudo-elements allow you to insert content before or after an element's actual content without adding extra HTML elements. The ::before and ::after pseudo-elements are popular and can help you style and modify content easily.

Some of the other commonly used pseudo-elements include:

::first-line: Applies styles to the first line of a block-level element.::first-letter: Styles the first letter of a block-level element.::selection: Styles the portion of an element that is selected by the user.::placeholder: Styles the placeholder text in an input or textarea element.::marker: Styles the marker box of a list item, such as bullets or numbers.

⚠ Important: Pseudo-elements can't be targeted in JavaScript as they aren't part of the DOM. They serve decorative purposes, but you can indirectly manipulate their styles by altering their parent elements' styles or modifying CSS rules dynamically.

Why Is This a Valuable Coding Concept?

Understanding and utilizing CSS pseudo-elements is valuable because they enhance styling capabilities by allowing you to add decorative content and style specific parts of an element without altering the HTML structure. This leads to improved code maintainability, as it keeps HTML clean and semantic, reducing the need for additional markup.

JS Pac-Man Project Links:

Note: Since I’m following team development practices and pushing updates to the develop branch, some of the changes I describe in this article may not be live in the deployed version of the game yet.

Ghost’s Eye Direction

This article explains how I used CSS pseudo-elements to add eyes to the ghosts in my JavaScript Pac-Man game and simulate their eye movements!

For my JavaScript Pac-Man game, I created each ghost's eye using the before and after CSS pseudo-elements: the before pseudo-element for the left eye and the after pseudo-element for the right eye. Each eye is simply a boxed div with a 50% border radius, a black background, and a thick white border.

Taking creative liberty, I reposition the eyes to create the effect, differentiating it from the original arcade, where the pupils move. In my game, the left and right eyes remain grouped, and I slightly shift them in the direction they are moving. While in action, I believe it is pretty convincing!

First, I created a main ghost class. Using a black background, I rounded out the ghost’s head with the top left and right border radius, and I used a clip-path to create the jagged bottom of the ghost.

.ghost {

border: 1px solid white;

background-color: black;

position: relative;

width: 200px;

height: 200px;

margin:10px;

border-top-left-radius: 50%;

border-top-right-radius: 50%;

clip-path: polygon(50% 0%, 100% 0, 100% 100%, 81% 91%, 66% 100%, 49% 91%, 31% 100%, 15% 91%, 0 100%, 0 0);

}

Next, I create the ghost’s left eye using the ::before CSS pseudo-element. I create a box and use a thick white border with a black background, rounding it out with a 50% border radius to complete the effect. The eye is positioned absolutely, sitting 100 pixels from the bottom and slightly shifted to the right with a margin, giving it the appearance of a floating eye on the ghost.

.ghost::before {

border: 12px solid white;

border-radius: 50%;

background-color: black;

content: "";

width: 30px;

height: 30px;

position: absolute;

bottom: 100px;

margin: 0 0 0 50px;

}

For the ghost's right eye, the ::after CSS pseudo-element is used. It mirrors the left eye by creating another circular shape with a thick white border and a black background, using a 50% border radius for the round look. This eye is also positioned absolutely, 100 pixels from the bottom, but it's shifted further to the right with a margin, making it appear as the ghost's second floating eye.

.ghost::after {

border: 12px solid white;

border-radius: 50%;

background-color: black;

content: "";

width: 30px;

height: 30px;

position: absolute;

bottom: 100px;

margin: 0 0 0 100px;

}

Ghosts’ Colors

I created a separate class for each ghost color so that I can easily use them in conjunction with the main ghost class.

/* Ghost colors */

.ghost-red {

background-color: rgb(255,12,14);

}

.ghost-pink {

background-color: rgb(255,165,166);

}

.ghost-blue {

background-color: rgb(147,255,255);

}

.ghost-orange {

background-color: rgb(253,123,13);

}

Scared Ghost Color

For a scared ghost, I changed the color of the ghost to blue and the eyes to white.

/* Scared */

.scared {

background-color: blue;

}

.scared::before,

.scared::after {

background-color: white;

}

Combining Ghost Classes

Now that there are classes for a main ghost, a ghost color, and a scared ghost, I can combine them to create a single ghost with the ability to change its eye direction!

<div class="flex">

<div class="ghost ghost-red ghost-look-left"></div>

<div class="ghost ghost-blue ghost-look-up"></div>

<div class="ghost ghost-pink ghost-look-down"></div>

<div class="ghost ghost-orange ghost-look-right"></div>

<div class="ghost scared"></div>

<div class="ghost"></div>

</div>

JavaScript Ghost Eye Direction

In my JavaScript Pac-Man game, I created a Ghost class that includes an eyes property and other properties essential for the ghost's functionality.

Within the function that moves the ghosts, there is code to determine a valid direction—left, right, down, or up—and assign it. Once a direction is assigned, I update the CSS ghost's eye direction class by removing the current class and applying the new one.

// Eye direction

if (direction === -1) {

squares[ghost.currentIndex].classList.remove(ghost.eyes);

ghost.eyes = `ghost-look-left-${eyeSize}`;

} else if (direction === 1) {

squares[ghost.currentIndex].classList.remove(ghost.eyes);

ghost.eyes = `ghost-look-right-${eyeSize}`;

} else if (direction === 28) {

squares[ghost.currentIndex].classList.remove(ghost.eyes);

ghost.eyes = `ghost-look-down-${eyeSize}`;

} else if (direction === -28) {

squares[ghost.currentIndex].classList.remove(ghost.eyes);

ghost.eyes = `ghost-look-up-${eyeSize}`;

}

To make trial-and-error creation easier, I initially styled all the ghosts' eye directions on CodePen, using a ghost size of 200px for both width and height. In my game, the size of the ghost is either 20px or 10px in width and height, depending on full-screen or mobile view. With GitHub's CoPilot, I was able to easily resize the CSS values by letting the AI handle the calculations.

My other related articles

What Is “State” in JavaScript, and How to Use It in Your Projects

Mastering JavaScript Fundamentals: Unleashing Your Framework Readiness

Mastering JavaScript: The Power of forEach and Ternary Operators

JavaScript: Understanding the Set and Clear Interval Methods

Be sure to listen to the HTML All The Things Podcast!

📝 I also write articles for the HTML All The Things Podcast, which you can read on their website: https://www.htmlallthethings.com/.

Be sure to check out HTML All The Things on socials!

Affiliate & Discount Links!

With CodeMonkey, learning can be all fun and games! CodeMonkey transforms education into an engaging experience, enabling children to evolve from tech consumers to creators. Use CodeMonkey's FREE trial to unlock the incredible potential of young tech creators!

With a structured learning path tailored for various age groups, kids progress from block coding to more advanced topics like data science and artificial intelligence, using languages such as CoffeeScript and Python. The platform includes features for parents and teachers to track progress, making integrating coding into home and classroom settings easy.

Through fun games, hands-on projects, and community interaction, CodeMonkey helps young learners build teamwork skills and receive recognition for their achievements. It fosters a love for coding and prepares children for future career opportunities in an ever-evolving tech landscape.

To learn more about CodeMonkey, you can read my detailed review article!

Affiliate Links:

Advance your career with a 20% discount on Scrimba Pro using this affiliate link!

Become a hireable developer with Scrimba Pro! Discover a world of coding knowledge with full access to all courses, hands-on projects, and a vibrant community. You can read my article to learn more about my exceptional experiences with Scrimba and how it helps many become confident, well-prepared web developers!

Important: This discount is for new accounts only. If a higher discount is currently available, it will be applied automatically.

How to Claim Your Discount:

Click the link to explore the new Scrimba 2.0.

Create a new account.

Upgrade to Pro; the 20% discount will automatically apply.

Disclosure: This article contains affiliate links. I will earn a commission from any purchases made through these links at no extra cost to you. Your support helps me continue creating valuable content. Thank you!

Conclusion

CSS pseudo-elements are great for styling projects without the need for additional HTML. You can easily style a first letter or line with pseudo-elements, markers like bullets, placeholder text, entire sections, and more. I used the before and after pseudo-elements for enhanced styling in my project.

Additional benefits include improved code maintainability by keeping HTML clean and semantic, performance optimization as the browser efficiently renders them, the ability to create responsive designs that adapt to various screen sizes, and the potential for dynamic visual effects through CSS transitions and animations.

In my Pac-Man game, I stylistically designed pseudo-element classes to represent the eyes of the ghosts looking in the left, right, up, and down directions. Using JavaScript, I change the direction of the ghosts' eyes based on their movement, producing a realistic effect that makes it seem like their eyes are looking in the direction they are moving.

Can you think of other unique styling ideas for your projects that utilize pseudo-elements? Experiment with them to unleash your creativity and transform your designs into something remarkable!

Let’s connect! I’m active on LinkedIn and Twitter.

Do you now feel confident in using CSS pseudo-elements to enhance your projects? Have you tried creating unique styles with them in your own work? Please share the article and comment!

Adam Savage's Tested: Advice on Weathering Today's Uncertain, Hostile Times

TL;DR:

Adam Savage says the best way to navigate these uncertain, often hostile times is with empathy, kindness and respect—values he wouldn’t change in his own past because every experience shaped who he is today.

He explains the “scarcity model” as the mindset that resources or opportunities are limited (which just fuels needless competition), and draws a clear line between gatekeeping—unfairly shutting people out—and asking folks to “pay their dues,” i.e. genuinely earn skills and prove competence before moving on to the next level.

Watch on YouTube

KEXP: Cory Hanson - Texas Weather (Live on KEXP)

Cory Hanson Brings “Texas Weather” to KEXP’s Studio

Cory Hanson delivers a scorching live version of “Texas Weather” at KEXP, recorded on August 4, 2025. Backed by Evan Backer on drums and Hazel Rigby on bass, Hanson handles guitar, piano, and vocals with that signature blend of raw energy and introspective flair.

Hosted by Jewel Loree, the session was engineered by Julian Martlew and Jon Roberts, mastered by Matt Ogaz, and captured on camera by Jim Beckmann, Carlos Cruz, Scott Holpainen, and Luke Knecht (with Scott Holpainen also editing). Check it out on KEXP.org or swing by Rosali Music’s site—and don’t forget to join the YouTube channel for extra perks!

Watch on YouTube

Rick Beato: Escape the Pentatonic Trap in One Lesson

Join today’s livestream to uncover the very scales top pros actually use—say goodbye to that overplayed pentatonic box and level up your fretboard knowledge.

Hurry on the Scale Matrix deal: get the full 3.5-hour system covering 25+ scales and modes for 50% off, but act fast—this early access price vanishes at midnight ET!

Watch on YouTube

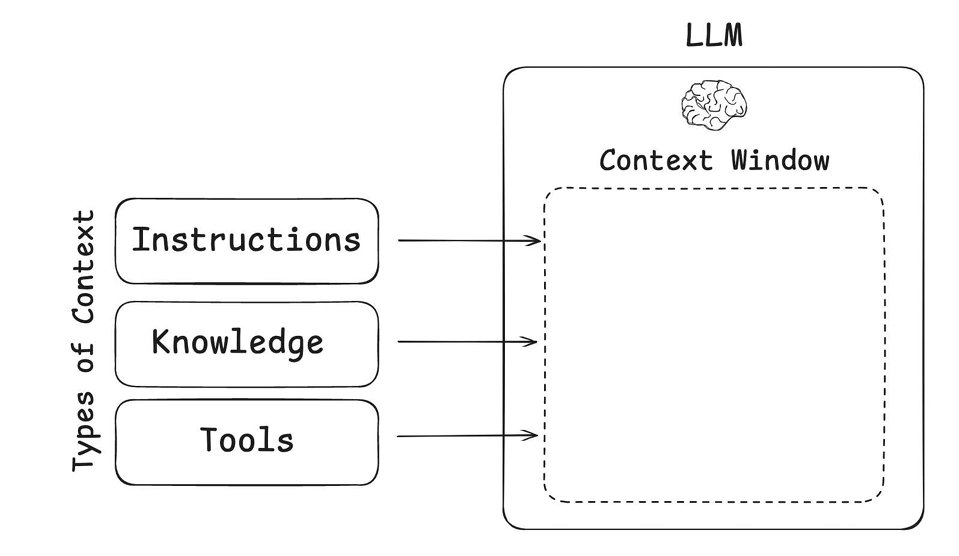

Sparse Models and the Future of Efficient AI

Modern AI has followed a simple rule for progress: bigger is better. Scaling up the number of parameters and training data has consistently led to performance gains. But this approach comes with steep costs in compute, energy, and accessibility. Sparse models represent a different path forward, one that prioritizes efficiency without sacrificing capability.

The principle is straightforward. Most parameters in a large neural network contribute little to a given task at any moment. Instead of activating every weight, sparse models selectively engage only the most relevant connections. This mimics the brain, where neurons fire sparsely rather than all at once.

Implementing sparsity can take several forms. Static sparsity involves pruning redundant weights after training, reducing memory and computation needs. Dynamic sparsity, on the other hand, selects a different subset of active weights on the fly for each input. Mixture-of-Experts (MoE) models go further by partitioning the network into multiple expert subnetworks, routing each input through only a small fraction of them. Google’s Switch Transformer is a prime example, achieving massive scale while keeping per-example computation manageable.

The benefits are clear. Sparse models allow trillion-parameter architectures to be trained and deployed without proportional increases in compute. They also open possibilities for edge deployment, where hardware constraints make dense models impractical. By lowering the energy and hardware demands of AI, sparsity has the potential to democratize access to powerful systems.

Challenges remain in optimizing hardware and software for sparse computation. GPUs are built for dense matrix multiplications, and sparse operations often underutilize them. New accelerators and libraries are being developed to exploit sparsity more effectively. Ensuring that pruning or routing does not harm accuracy is another ongoing area of research.

Sparsity offers a vision where AI continues to grow more powerful without growing unsustainable. If dense scaling defined the last decade of AI, sparse scaling may define the next.

References

https://arxiv.org/abs/2007.03085

https://arxiv.org/abs/2101.03961

https://arxiv.org/abs/2209.10655

Building Web Apps & Exploring Research – About Sain Bux

I’m Sain Bux, a Full Stack Developer passionate about building scalable web apps and exploring research in AI, APIs, and emerging tech.

👨💻 What I Do

Develop with WordPress, React, PHP, and MySQL

Work on themes, plugins, and custom web solutions

Contribute to research projects, including publications on Zenodo

✨ What I’m Learning

Next.js & TypeScript for modern front-end development

Tailwind CSS for faster UI design

Basics of AI & ML to connect research with real-world applications

⚡ What I’m Currently Hacking On

New WordPress themes & plugins with better UX

API-driven projects integrating open data

🌐 Portfolio → sainbux.github.io

🤝 Open To

Collaborations on web projects

Research discussions in AI & APIs

Networking with devs, researchers, and startups

Thanks for reading! Looking forward to connecting with this awesome community 💡

I had fun making this video :) If anyone wants to shoot me ideas for future Defang videos, I'm all ears!

Struggling to figure out what to learn next in tech?

Check out roadmap.sh

Visual roadmaps for any role, from Frontend to AI Engineer.

Plan your path, track your progress, and never get lost in tutorials again!

AI Drives PCIe Aspirations

The packet transfer requirements of AI will keep pushing PCIe to double the data rate every three years.

The post AI Drives PCIe Aspirations appeared first on EE Times.

Cybersecurity Expectations Peak as GenAI Lands in the Trough

CSCOs, navigating an increasingly complex digital landscape, are facing stark contrasts in technology maturity.

The post Cybersecurity Expectations Peak as GenAI Lands in the Trough appeared first on EE Times.

AI Applications, Innovation Empowered! The Fourth GMIF2025 Innovation Summit Successfully Concluded

Co-hosted by the Shenzhen Memory Industry Association and the School of Integrated Circuits, Peking University, and organized by JWinsights (Shanghai) Technology Co., Ltd., the Fourth GMIF2025 Innovation Summit (Global Memory Innovation Forum) was successfully concluded on September 25, 2025. Under the theme “AI Applications, Innovation Empowered”, the GMIF2025 brought together leading representatives from across the […]

The post AI Applications, Innovation Empowered! The Fourth GMIF2025 Innovation Summit Successfully Concluded appeared first on EE Times.

Drones are a Key Part of the Smart Farming Revolution

Drones became an indispensable tool in modern agriculture, ensuring food security and sustainable farming practices for a growing global population.

The post Drones are a Key Part of the Smart Farming Revolution appeared first on EE Times.

YouTuber reports battery swelling caused Galaxy Ring to get stuck on his finger

Sometimes when a device fails, it fails in spectacular fashion. Daniel Rotar, who leads the ZONEofTECH YouTube channel, posted on X today that the battery on his Samsung Galaxy Ring began swelling while he was wearing it. "I cannot take it off and this thing hurts," he wrote. A few hours later, Rotar posted again that he had gone to a hospital and received help to remove the smart ring. He also shared photos of the device that showed the swelling on the interior surface.

Update:

— Daniel (@ZONEofTECH) September 29, 2025

- I was denied boarding due to this (been travelling for ~47h straight so this is really nice 🙃). Need to pay for a hotel for the night now and get back home tomorrow👌

- was sent to the hospital, as an emergency

- ring got removed

You can see the battery all… https://t.co/SRPfYI92Zg pic.twitter.com/ob8uUp5BeW

We reached out to Samsung for comment and received this statement from a spokesperson: "The safety of our customers is a top priority and we are in direct contact with this user to learn more about their concerns. While experiences like this are extremely rare, there are a few ways to attempt removing a stuck ring, including soap and water – or submerging your hand in cold water. If these methods do not work, additional information is provided on our Samsung Support page."

Current technologies aren't perfect and a device's battery can fail. When that happens, trapped gasses and heat can cause the battery to swell. It's not a common problem, but it is a known one. There's even a whole Reddit community dedicated to the phenomenon of these "spicy pillows." So here’s a recurring reminder that if you’ve got old gadgets you aren’t using, consider recycling them before they potentially reach this sort of fail state.

This article originally appeared on Engadget at https://www.engadget.com/wearables/youtuber-reports-battery-swelling-caused-galaxy-ring-to-get-stuck-on-his-finger-223749424.html?src=rss

How to follow Amazon's big hardware event tomorrow

Tomorrow, Amazon will host an event in New York City to unveil some new hardware. The showcase kicks off on September 30 at 10AM ET. Based on what we can see in the invitation and the most common cadence of product news, we are expecting to hear about developments to the Echo line of smart speakers, at least one new addition to the Kindle collection and an update for the Fire TV.

Normally this is where we'd have an embed of a YouTube link so you could watch along on Tuesday, but this event is a little unusual in that Amazon doesn't provide a stream for those of us at home. However, Engadget will have reporters at the event, and they will be liveblogging all the details as Amazon announces them. The liveblog will be up and running around 8AM ET.

In past years, this fall showcase has also been when Amazon shares news from the Ring, Blink and Eero companies, so we might have more than just its own-brand products being discussed tomorrow. And with AI the buzzword of the day, there's also a good chance that Amazon execs will spend some time discussing the Alexa+ voice assistant.

This article originally appeared on Engadget at https://www.engadget.com/how-to-follow-amazons-big-hardware-event-tomorrow-211105336.html?src=rss

OpenAI will reportedly release a TikTok-like social app alongside Sora 2

In a development that should surprise no one, OpenAI is preparing to release a standalone social app powered by its upcoming Sora 2 video model, Wired reports. The app reportedly "closely resembles" TikTok, with a vertical video feed and swipe-to-scroll navigation. The catch? It will only feature AI-generated content; there's apparently no option for the user to upload photos or videos from their phone's camera roll.

Wired adds OpenAI will limit Sora 2 to generating clips that are 10 seconds long or shorter for use inside of the app. It's unclear what the model's limit will be outside of the app. TikTok, following an original limit of 15 seconds, allows people to upload clips that are up to 10 minutes long. The new app is also said to include an identity verification tool. Should a user take advantage of the feature, Sora 2 will be able to use their likeness in videos it generates. In turn, that means other people will be able to tag those users and use their likeness when they go to remix one of their videos. As a safety precaution, OpenAI will push a notification to users whenever their likeness is used by someone else, even in situations where someone makes a video but never posts it to the app's feed.

According to Wired, the software will refuse to generate some videos due to copyright restrictions. However, it's not clear just how robust these protections will be, with The Wall Street Journal reporting OpenAI will require rights holders to opt out of their content appearing in videos Sora 2 generates.

As for why OpenAI would release a social media app, Wired suggests the company saw an opportunity after President Trump repeatedly extended the deadline for ByteDance to bring TikTok's US business under American control. By adding a social component to Sora, OpenAI may also be hoping to dissuade people from trying other models since leaving its new app would mean abandoning whatever community forms around it.

This article originally appeared on Engadget at https://www.engadget.com/ai/openai-will-reportedly-release-a-tiktok-like-social-app-alongside-sora-2-205842527.html?src=rss

Disney reportedly lost 1.7 million paid subscribers in the week after suspending Kimmel

Reporter Marisa Kabas, founder of The Handbasket, posted on Bluesky today that more than 1.7 million subscribers canceled their paid Disney streaming plans between September 17 and September 23. The total allegedly includes subscriptions to Disney+, Hulu and ESPN. That falloff reportedly marked a 436 percent increase over the usual churn rate for the service. We’ve reached out to Disney+ for comment on this claim.

Disney also chose to increase subscription prices last week, which could prolong the wave of cancellations. Kabas' source also claimed that Disney sped the return of the late night show because it had planned to announce the price hike last Tuesday.

In case you need a reminder of the entertainment news that dominated headlines for the past two weeks, Disney-owned ABC suspended the late night talk show Jimmy Kimmel Live on September 17 after President Donald Trump and members of his administration accused the host of making inappropriate comments regarding the assassination of right-wing influencer Charlie Kirk. Although Disney then announced on September 22 that the program would be reinstated the following night, it seems a lot of viewers were not impressed with the company's actions.

This article originally appeared on Engadget at https://www.engadget.com/entertainment/streaming/disney-reportedly-lost-17-million-paid-subscribers-in-the-week-after-suspending-kimmel-201615937.html?src=rss

What to expect from Amazon's event on Tuesday, September 30

Apple isn't the only big tech company that's launching hardware this month. Amazon is having its fall hardware event on Tuesday, September 30 at 10AM ET in New York City, and — as it does most years — the company is expected to debut new devices across its myriad product categories. While the event will not be livestreamed, Engadget staff will be in attendance and liveblogging it in real-time. Our pre-show coverage will begin around 8AM ET.

So what will Amazon be sharing? Using the images in the invitation that went out to members of the press as a jumping-off point, here are our best educated guesses as to what we can expect.

In February, Amazon introduced some improvements to its voice assistant, promising that Alexa+ would be more conversational and more helpful thanks to generative AI. It seems likely that the fall event will put hardware for interacting with Alexa+ front and center, so expect to hear about new models or new software updates for the Echo line of smart speakers. It's been a couple years since the Echo Show got an update, and it's been even longer for the standard Echo. Those two products are the most likely to star in the upcoming announcements.

The invite also includes a photo of a Kindle that depicts a color illustration, so there may be more in store for the ereaders. The Kindle Scribe 2 just came out earlier this year, as did the Kindle Colorsoft, so maybe there's a new option in the works that combines the best features of both. As with the Echo, there also hasn't been much new for the basic model Kindle, so that may also be getting some updates.

There's also what appears to be the corner of a television in the invitation, so news about the Fire TV also seems promising. This event is also where Amazon-owned Ring and Eero brands may unveil new products. Any announced items may not be available right away, but the timing does set the stage for some substantial discounts on older generations of Amazon gear. In fact, many of Amazon's current devices are already on sale — including Blink, Kindle and Ring — as more early October Prime Day deals pop up ahead of the sale's official start on October 7.

Update, September 29, 2025, 3:20PM ET: This story has been updated to note Engadget's liveblog plans for the Amazon event.

This article originally appeared on Engadget at https://www.engadget.com/big-tech/what-to-expect-from-amazons-event-on-tuesday-september-30-210035284.html?src=rss

Meta is bringing new facial recognition tools to the UK, EU and South Korea

Meta is expanding its use of facial recognition in Europe, the UK and South Korea to crack down on accounts that impersonate public figures. The new facial recognition-powered safety features are now live on Facebook in the regions and will expand to Instagram in the coming months.

The technology was initially put to use last year starting in the US, helping to identify ads that fraudulently use a celebrity's likeness as well as to help people regain access to hacked accounts. Public figures opt in to this program in Europe, which is also being rolled out in South Korea alongside the new protections against impersonation. This new use case is aimed at scammers who pose as public figures to trick unsuspecting users into sending money or other scams of that nature.

"We’ll now use facial recognition technology to compare the profile picture on the suspicious account to the real public figure’s Facebook and Instagram profile pictures. If there’s a match, we will remove the impostor account," said a Meta spokesperson.

In addition to the US rollout, the company’s facial recognition technology has been used to aid account recovery in the UK, EU and South Korea since March. This came three years after Facebook decided to shut down its facial recognition system on Facebook, due in large part to public backlash against the technology.

The social media giant touts the benefits of these tools, reporting that in the first half of 2025, user reports of "celebrity bait" ads dropped by 22 percent globally. Facial recognition remains a controversial technology, with differing public opinion on its use in law enforcement and the workplace.

This article originally appeared on Engadget at https://www.engadget.com/big-tech/meta-is-bringing-new-facial-recognition-tools-to-the-uk-eu-and-south-korea-185303065.html?src=rss

Tile trackers reportedly have a security flaw that can let stalkers track your location

Researchers have discovered major security flaws with Tile tracking tags, according to a report by Wired. These flaws could allow both the company itself and tech-savvy stalkers to track a user's location. The security issue could also let a malicious actor falsely frame a Tile owner for stalking, as the flaw can make it appear as if a particular tag is constantly in the vicinity of somebody else's tag.

The issue pertains to how Tile tags transmit data during use. Tile tags transmit a lot of data beyond that of other trackers, including the static MAC address and the rotating ID. According to reporting, none of this stuff is encrypted. The rotating ID changes all of the time, but a MAC address doesn't.

Researchers believe that all of this information is stored in cleartext, making it easy for hackers to get ahold of. This also would theoretically give Tile itself the ability to track its users, though the company says it doesn't have this capability.

It gets worse. Anyone with a radio frequency scanner can allegedly intercept all of this information as it's being transmitted, creating another potential security hole. Also, this problem might not even be solved if Tile decides to stop transmitting the MAC address. This is because the company generates its rotating ID in such a way that future codes can be reliably predicted from past ones.

“An attacker only needs to record one message from the device," one of the researchers behind the findings said, adding that a single recorded message will "fingerprint it for the rest of its lifetime." The researcher said this creates a risk of systemic surveillance.

The security researchers, who are involved with the Georgia Institute of Technology, reached out to Tile's parent company Life360 in November of last year to report the findings. Wired said the company stopped communicating with the researchers in February. The company did say it has made a number of improvements to its security but didn't elaborate further.

This article originally appeared on Engadget at https://www.engadget.com/big-tech/tile-trackers-reportedly-have-a-security-flaw-that-can-let-stalkers-track-your-location-183949165.html?src=rss

Claude Sonnet 4.5 is Anthropic's safest AI model yet

In May, Anthropic announced two new AI systems, Opus 4 and Sonnet 4. Now, less than six months later, the company is introducing Sonnet 4.5, and calling it the best coding model in the world to date. Anthropic's basis for that claim is a selection of benchmarks where the new AI outperforms not only its predecessor but also the more expensive Opus 4.1 and competing systems, including Google's Gemini 2.5 Pro and GPT-5 from OpenAI. For instance, in OSWorld, a suite that tests AI models on real-world computer tasks, Sonnet 4.5 set a record score of 61.4 percent, putting it 17 percentage points above Opus 4.1.

At the same time, the new model is capable of autonomously working on multi-step projects for more than 30 hours, a significant improvement from the seven or so hours Opus 4 could maintain at launch. That's an important milestone for the type of agentic systems Anthropic wants to build.

Perhaps more importantly, the company claims Sonnet 4.5 is its safest AI system to date, with the model having undergone "extensive" safety training. That training translates to a chatbot Anthropic says is "substantially" less prone to "sycophancy, deception, power-seeking and the tendency to encourage delusional thinking" — all potential model traits that have landed OpenAI in hot water in recent months. At the same time, Anthropic has strengthened Sonnet 4.5's protections against prompt injection attacks. Due to the sophistication of the new model, Anthropic is releasing Sonnet 4.5 under its AI Safety Level 3 framework, meaning it comes with filters designed to prevent potentially dangerous outputs related to prompts around chemical, biological and nuclear weapons.

With today's announcement, Anthropic is also rolling out quality of life improvements across the Claude product stack. To start, Claude Code, the company's popular coding agent, has a refreshed terminal interface, with a new feature called checkpoints included. As you can probably guess from the name, they allow you to save your progress and roll back to a previous state if Claude writes some funky code that isn't quite working like you imagined it would. File creation, which Anthropic began rolling out at the start of the month, is now available to all Pro users, and if you joined the waitlist Claude for Chrome, you can start using the extension today.

API pricing for Sonnet 4.5 remains at $3 per one million input tokens and $15 for the same amount of output tokens. The release of Sonnet 4.5 caps off a strong September for Anthropic. Just one day after Microsoft added Claude models to Copilot 365 last week, OpenAI admitted its rival offers the best AI for work-related tasks.

This article originally appeared on Engadget at https://www.engadget.com/claude-sonnet-45-is-anthropics-safest-ai-model-yet-170000161.html?src=rss

This Roomba robot vacuum is on sale for only $150 ahead of Prime Day

The iRobot Roomba 104 robot vacuum is on sale for $150 just ahead of October's Prime Day. That's a nice little discount of 40 percent, which represents a savings of $100.

This is a newer version of the unit that topped our list of the best budget robot vacuums. It's an entry-level robovac that gets the job done. The cleaning motor is fairly powerful and it ships with a multi-surface brush and an edge-sweeping brush. The vacuum uses LiDAR to map a home and to help it avoid obstacles when cleaning.

It's also been equipped with specialized sensors to prevent falling down stairs. Steps are the natural enemy of all robot vacuums, except maybe this one. The Roomba 104 integrates with the company's proprietary app, which allows for custom cleaning schedules and the like. The robot can also be controlled via voice assistant and boasts compatibility with Siri, Alexa and Google Assistant.

The vacuum will automatically head to the charger for some juice when running low, which is nice. The battery lasts around 200 minutes per charge, which is a decent enough metric for a budget-friendly robovac. The only downside here? This is just a vacuum. It doesn't mop and it doesn't come with a dedicated debris canister.

This article originally appeared on Engadget at https://www.engadget.com/deals/this-roomba-robot-vacuum-is-on-sale-for-only-150-ahead-of-prime-day-164953406.html?src=rss

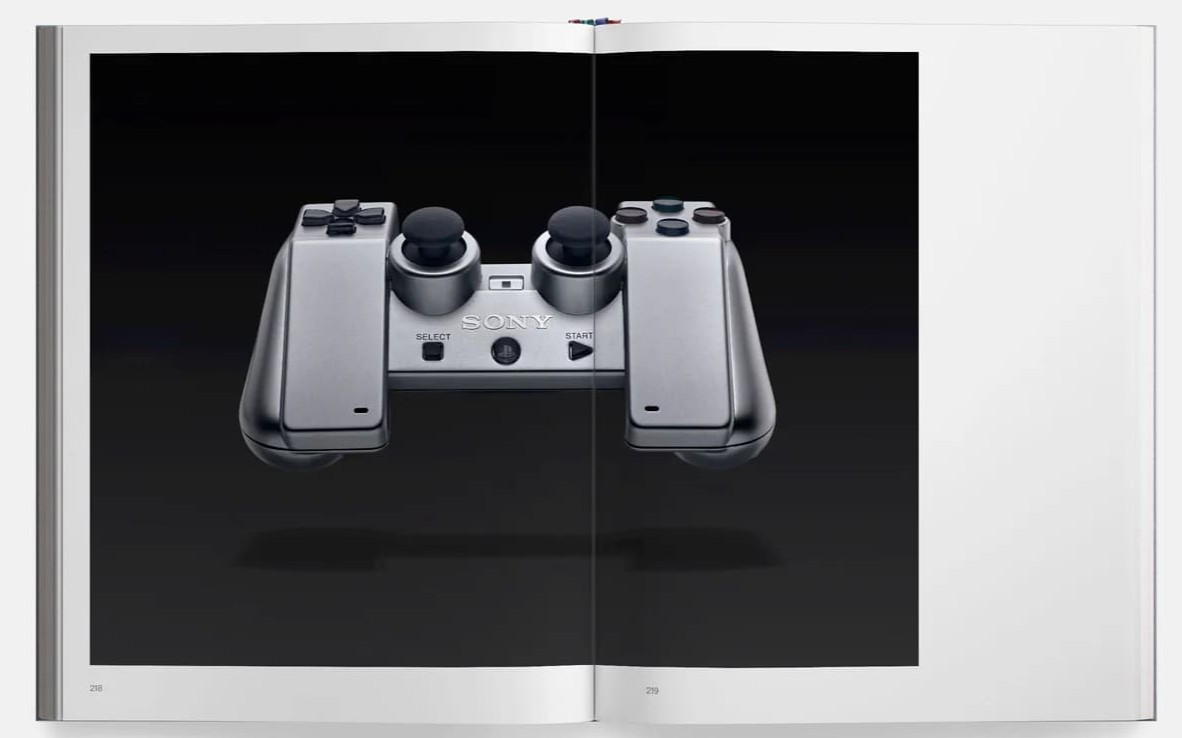

A PlayStation photography book featuring never-before-seen design concepts is on the way

Sony has been marking the 30th anniversary of PlayStation by selling you stuff, like PS5 consoles and accessories styled after the PS1. The company has something else lined up to mark the occasion: a photography book showcasing "never-before-seen prototypes, concept sketches and design models that shaped hardware development" from the early days through to the current PS5 era.

PlayStation: The First 30 Years is a 400-page hardback book printed on heavyweight matt art stock. You better hope your coffee table is sturdy, since this book is a chonkster. It weighs in at 5kg (11lbs).

Sony worked with publisher Read-Only Memory on PlayStation: The First 30 Years. The collaborators have offered a peek at what's inside the book. It's shaping up to be a fascinating glimpse at some of the designs Sony tried for its hardware over the last few decades.

For instance, the original PlayStation could have looked much different, more directly exemplifying designer Teiyu Goto's "vision of simple squares and circles coming to life." (For what it's worth, Engadget deputy editor Nathan Ingraham said this design looked like a proton pack):

Some of the controller concepts are pretty out there too. Some don't look all that comfortable to hold or use for extended gaming sessions. This one — which appears to be for the PS3 at the earliest, given the inclusion of the PS button — is truly bonkers. Thank goodness Goto landed on the SNES-style design with grips that has proven so successful (and comfortable) over the years:

The book isn't entirely limited to hardware concepts, as it features photos of Sony's design labs. The tome seems like a very cool item for PlayStation fans and those who love gaming history to have, but there might be an element of sticker shock.

The book is available via Read-Only Memory's website for $182. A deluxe edition with exposed binding, a foil-stamped clamshell presentation box and a photographic print signed by Goto and photographer Benedict Redgrove will run you $467. The fancier edition has a limited run of 1994 copies. Coincidentally, that's the year the PS1 debuted in Japan. Both editions of the book will ship in spring 2026.

Meanwhile, Sony has teamed up with Reebok for a collection of 30th anniversary sneakers styled after — you guessed it — the PS1. The kicks will be available in October and the three designs are linked to the PS1's launch regions. They include the InstaPump Fury 94 for Japan, Pump Omni Zone II for the US and Workout Plus for the UK.

This article originally appeared on Engadget at https://www.engadget.com/gaming/playstation/a-playstation-photography-book-featuring-never-before-seen-design-concepts-is-on-the-way-164859020.html?src=rss

Microsoft is trying to make 'vibe working' a thing

Microsoft is taking inspiration from the AI-driven workflows of "vibe coding" and has now set out to make "vibe working" a thing (yes, those are the words the company chose.) Does AI in the workplace even lead to worthwhile outputs? Does it mortgage our brains' ability to learn? There are many seemingly critical question unanswered. But in the meantime, sure: vibe working it is.

Using Office Agent within Office apps or Copilot chat, users can begin a document with a single prompt and then work iteratively alongside Copilot to develop a finished product. Microsoft says this is the "new pattern of work for human-agent collaboration." The Agent Mode tool supports Excel and Word workflows, and Microsoft says PowerPoint support is coming soon; Office Agent works with PowerPoint and Word, with Excel coming soon.

The company waxes poetic about the "full power of Excel" being available only to expert users and promises that an Agent Mode that can "speak Excel" will change all that. In data shared as part of the announcement, Microsoft said that Copilot Agent Mode in Excel achieved 57.2 percent accuracy on the SpreadsheetBench benchmark. This is compared to a 71.3 percent human score, though it's not clear if that's for average users, Excel power users or how many human users that score is derived from. Still — not great numbers!

Agent Mode also works in Word to summarize, edit and of course help to create entire drafts (though its unclear what those relative accuracy rates are.) Both the Excel and Word Agent Modes are powered by OpenAI's latest models. Office Agent in Copilot chat is powered by Anthropic models and can create PowerPoint presentations and Word documents in what Microsoft calls a "chat-first experience."

Agent Mode for Excel and Word, as well as Office Agent, are available today through the Frontier program. Agent Mode is currently limited to the web-based versions of Word and Excel and is coming to desktop soon.

This article originally appeared on Engadget at https://www.engadget.com/ai/microsoft-is-trying-to-make-vibe-working-a-thing-163334367.html?src=rss

FCC accidentally leaked iPhone schematics, potentially giving rivals a peek at company secrets

The Federal Communications Commission (FCC) recently published a 163-page PDF showing the electrical schematics for the iPhone 16e, despite Apple specifically requesting them to be confidential. This was most likely a mistake on the part of the FCC, according to a report by AppleInsider.

The agency also distributed a cover letter from Apple alongside the schematics, which is dated September 16, 2024. This letter verifies the company's request for privacy, indicating that the documents contain "confidential and proprietary trade secrets." The cover letter asks for the documents to be withheld from public view "indefinitely." Apple even suggested that a release of the files could give competitors an "unfair advantage."

To that end, the documents feature full schematics of the iPhone 16e. These include block diagrams, electrical schematic diagrams, antenna locations and more. Competitors could simply buy a handset and open it up to get to this information, as the iPhone 16e came out back in February, but this leak would eliminate any guesswork. However, Apple is an extremely litigious company when it comes to stuff like patent infringement.