BLOG POST

AI 日报

30 years later, I’m still obliterating planets in Master of Orion II—and you can, too

There's an unparalleled purity to MOO2's commitment to the fantasy.

150 million-year-old pterosaur cold case has finally been solved

The storm literally snapped the bones in their wings.

More people are using AI in court, not a lawyer. It could cost you money – and your case

Researchers found more than 80 cases of generative AI use in Australian courts so far – mostly by people representing themselves. That comes with serious risks.

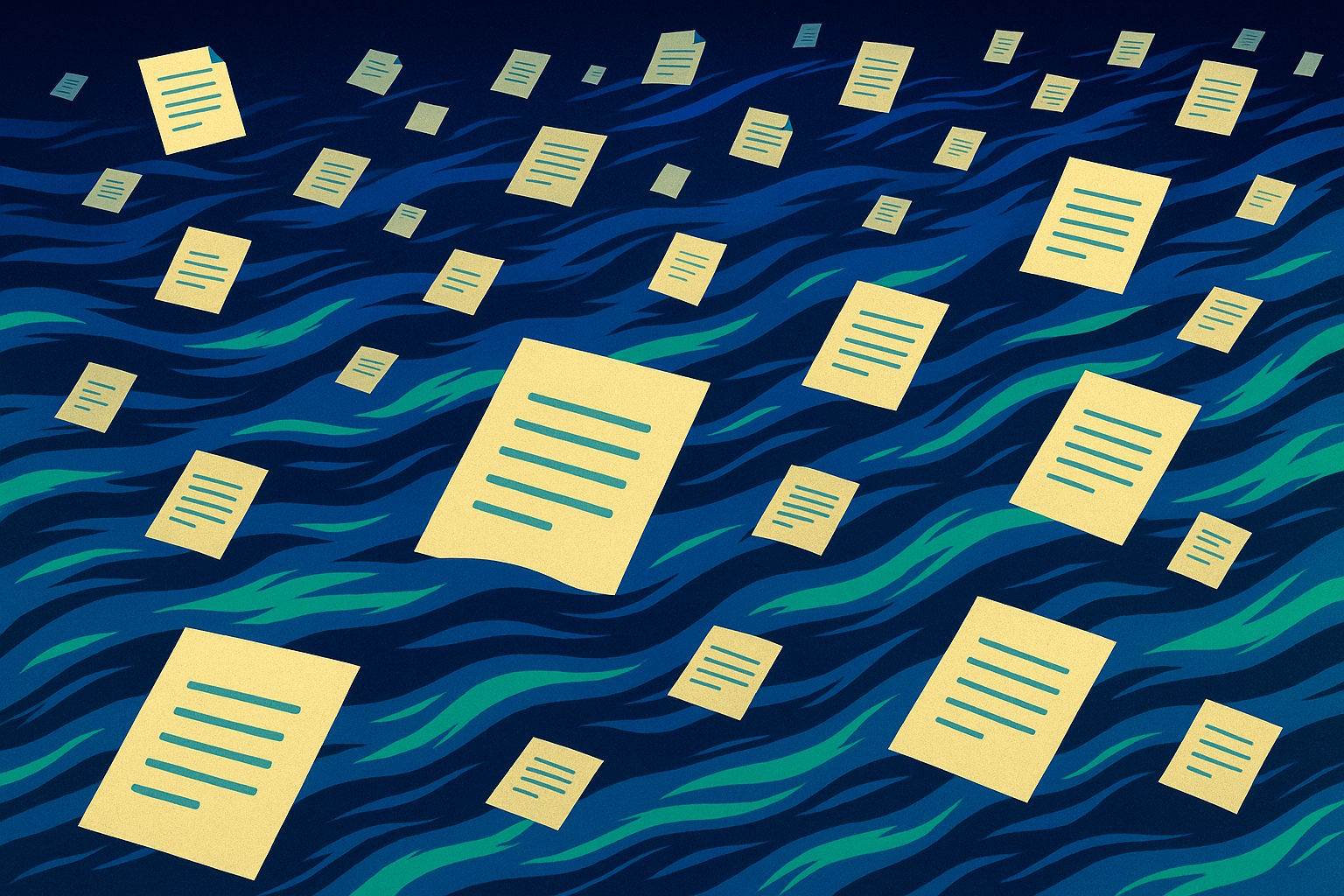

Generative AI might end up being worthless — and that could be a good thing

GenAI does some neat, helpful things, but it’s not yet the engine of a new economy — and it might not ever be.

The Guardian view on AI and jobs: the tech revolution should be for the many not the few | Editorial

Britain risks devolving its digital destiny to Silicon Valley. As a TUC manifesto argues, those affected must have a greater say in shaping the workplace of the future

In The Making of the English Working Class, the leftwing historian EP Thompson made a point of challenging the condescension of history towards luddism, the original anti-tech movement. The early 19th-century croppers and weavers who rebelled against new technologies should not be written off as “blindly resisting machinery”, wrote Thompson in his classic history. They were opposing a laissez-faire logic that dismissed its disastrous impact on their lives.

A distinction worth bearing in mind as Britain rolls out the red carpet for US big tech, thereby outsourcing a modern industrial revolution still in its infancy. Photographers, coders and writers, for example, would sympathise with the powerlessness felt by working people who saw customary protections swept away in a search for enhanced productivity and profit. Unlicensed use of their creative labour to train generative AI has delivered vast revenues to Silicon Valley while rendering their livelihoods increasingly precarious.

‘To them, ageing is a technical problem that can, and will, be fixed’: how the rich and powerful plan to live for ever

When Xi Jinping and Vladimir Putin were caught on mic talking about living for ever, it seemed straight out of a sci-fi fantasy. But for some death is no longer considered an inevitability …

Imagine you’re the leader of one of the most powerful nations in the world. You have everything you could want at your disposal: power, influence, money. But, the problem is, your time at the top is fleeting. I’m not talking about the prospect of a coup or a revolution, or even a democratic election: I’m talking about the thing even more certain in life than taxes. I’m talking about death.

In early September, China’s Xi Jinping and Russia’s Vladimir Putin were caught on mic talking about strategies to stay young. “With the development of biotechnology, human organs can be continuously transplanted, and people can live younger and younger, and even achieve immortality,” Putin said via an interpreter to Xi. “There’s a chance,” he continued, “of also living to 150 [years old].” But is this even possible, and what would it mean for the world if the people with power were able to live for ever?

NBA Coach JJ Redick Says He Spends Hours Talking to His “Friend” ChatGPT

"I'm the type of person who, y'know, spends an hour and a half going down a deep, deep rabbit hole on ChatGPT."

The post NBA Coach JJ Redick Says He Spends Hours Talking to His “Friend” ChatGPT appeared first on Futurism.

OpenAI’s New Data Centers Will Draw More Power Than the Entirety of New York City, Sam Altman Says

"Ten gigawatts is more than the peak power demand in Switzerland or Portugal."

The post OpenAI’s New Data Centers Will Draw More Power Than the Entirety of New York City, Sam Altman Says appeared first on Futurism.

Elon Musk Is Fuming That Workers Keep Ditching His Company for OpenAI

His blood feud with Sam Altman rages on.

The post Elon Musk Is Fuming That Workers Keep Ditching His Company for OpenAI appeared first on Futurism.

Residents Shut Down Google Data Center Before It Can Be Built

Google Fail

The post Residents Shut Down Google Data Center Before It Can Be Built appeared first on Futurism.

AI Coding Is Massively Overhyped, Report Finds

"The results haven’t lived up to the hype."

The post AI Coding Is Massively Overhyped, Report Finds appeared first on Futurism.

SAP Exec: Get Ready to Be Fired Because of AI

"I will be brutal."

The post SAP Exec: Get Ready to Be Fired Because of AI appeared first on Futurism.

First Responders Are Being Overwhelmed by Data Center Fires

"We're not a huge fan of the data centers."

The post First Responders Are Being Overwhelmed by Data Center Fires appeared first on Futurism.

Quantum chips just proved they’re ready for the real world

Diraq has shown that its silicon-based quantum chips can maintain world-class accuracy even when mass-produced in semiconductor foundries. Achieving over 99% fidelity in two-qubit operations, the breakthrough clears a major hurdle toward utility-scale quantum computing. Silicon’s compatibility with existing chipmaking processes means building powerful quantum processors could become both cost-effective and scalable.

BYD Brings Price War to Japan in Bid to Win Over Customers

More than two years after BYD Co.’s high-profile foray into the Japanese market, the Chinese electric vehicle maker is still struggling to win over drivers.

EA Buyout Talk Highlights Gaming Struggles as Growth Slows

The gaming market has matured and analysts see slower growth moving forward

Apple’s ChatGPT-Style Chatbot App Deserves a Public Release

Apple should release its internal ChatGPT-like app publicly to give its revamped AI system more credibility. Also: New MacBooks and external Mac monitors get closer; more on the iPhone 17 Pro’s “scratchgate” controversy; and Tim Cook’s latest memo to employees.

I don't tell my kids I'll miss them when I travel without them. It's the truth.

I love my kids but also enjoy time without them. I'm a better parent when I come back because I'm reminded of who I am besides mom.

Eric Adams drops out of the New York mayor's race weeks ahead of the election

Adams announced his departure on Sunday in an eight-minute video on X, saying media speculation and lack of funding influenced his decision.

Ex-Twitch CEO's advice for leaders: Don't over-delegate or forget you can override your experts

Ex-Twitch CEO Emmett Shear said a CEO's job is not only to delegate but also to discern: "Is this the kind of decision that we have to get right?"

I've stayed in all kinds of places across 100 countries, but there's still one type of accommodation I never book

During my travels to over 100 countries, I've stayed in hotels, hostels, and unique spots. But there are reasons I never book all-inclusive resorts.

I made pumpkin bread with just 2 ingredients. It reminded me of my favorite Starbucks treat.

The recipe calls for just one box of spice cake mix and one 15-ounce can of 100% pumpkin purée.

My job offers little chance for career growth, but I'm sticking with it. It gives me the flexibility I need as a parent.

As a parent, I've had to choose between looking for a job that pays more or staying at a job that offers flexibility.

My son moved home because of the high cost of living and a low-paying, entry-level job. I never got to be an empty nester.

My son just graduated from college and landed a great entry-level job, but the pay isn't great. He decided to move back home to save money.

My family moved from Vancouver to Toronto for a few months to live with my parents. There have been pros and cons.

Living with my parents in Toronto for the summer rent-free has given them priceless time with their granddaughter. The pros have outweighed the cons.

I don't get the whole Costco craze

In this Sunday edition of Business Insider Today, we're talking about America's Costco craze. BI's Steve Russolillo doesn't get the hype.

I'm a morning show contributor, and my husband is a firefighter. My daughter's grandparents make our nontraditional careers work.

My daughter isn't "watched" while my husband and I are working; she's played with, cared for, and loved on a level that can't be described.

What will Charlie Javice's sentence be for her $175M defrauding of JPMorgan Chase? Much depends on the word 'loss.'

Charlie Javice says she deserves a low sentence and restitution because JPMC gained some value from its otherwise fraud-based purchase of Frank.

We're first-time hybrid homeschoolers. We receive a stipend and spend more time with our kids — being adaptable is what makes it work.

Hybrid homeschoolers Marcus and Hannah Ward save money and spend more time with family with charter school classes for their daughter.

I've worked in global banking for 25 years. These are the 6 most important pieces of financial advice I tell family and friends.

Racquel Oden, US head of wealth and private banking at HSBC, shares how to start saving immediately and prioritize investments over student loans.

I flew sitting in a windowless window seat, and was surprised to find it might be the best spot on the plane for a power nap

A windowless window seat might sound like one of the worst places on a plane, but I was surprised to find it made for a decent in-flight nap.

I've interned at IBM since high school. It's taught me 3 key lessons about building a career in tech.

Gogi Benny shares his experience in tech, living with neurofibromatosis, and advancing as an IBM intern after starting in high school.

Leading computer science professor says 'everybody' is struggling to get jobs: 'Something is happening in the industry'

UC Berkeley professor Hany Farid said the advice he gives students is different in the AI world.

I went to the Ryder Cup and calculated the eye-watering cost of spending a single day there

Between the $32 cocktail, the seemingly endless merch options, and the temptation of an Uber, the cost of attending the Ryder Cup can add up.

Welcome to the Great Silencing

CEOs were already cautious about speaking their minds. Now, they're becoming even more tight-lipped.

3 reasons the US can't count on wealthy Americans to keep the economy going strong

Wealthy Americans may not be able to power the economy with spending as much as some people think, BCA Research says.

Goldman's tech boss discusses the future of AI on Wall Street — and how it will reshape careers

Goldman Sachs' chief information officer, Marco Argenti, discusses his vision for AI and its impact on his 12,000-person engineering team.

Flip Samurai – Learn Anything with Flashcards

This is a submission for the KendoReact Free Components Challenge.

For the KendoReact Free Components Challenge, I built Flip Samurai, a flashcard learning app that helps you master any subject through spaced repetition.

👉 Live Demo: flipping-samurai.vercel.app

👉 Source Code: GitHub Repo

Even though I don’t have much frontend experience, building with KendoReact made the process surprisingly smooth. Its free components gave me everything I needed to create a polished, responsive, and accessible UI without getting stuck on small details.

- Collections – Group flashcards by topic.

- Folders – Organize collections into folders.

- Favorites – Mark your most important collections.

- AI-Generated Collections – Instantly create flashcards with AI (powered by Fastify + Google Gemini).

- Import/Export – Back up and share your study sets.

- Dashboard – Track progress, study sessions, and mastery levels.

- Cards to Review – Stay on top of spaced repetition.

All data is stored in LocalStorage on the frontend, making it simple and lightweight to use.

- React + Vite + TypeScript

- KendoReact Free Components

- Bootstrap

- Fastify (backend for AI generation)

- Google Gemini API

I made heavy use of KendoReact’s free UI components to make the interface clean, fast, and user-friendly. Some of the key components include:

- Buttons (start studying, reviewing, AI generation, etc.)

- Dialogs (confirmation modals and AI generation flow)

- Notifications (success/error feedback)

- Cards & Layout (collection previews and dashboard)

- Inputs & Labels (create/edit collections and cards)

- Grid (statistics and progress overview)

- Indicators & ProgressBars (study progress)

- Tooltip, Skeleton, Dropdowns, ListBox, Popup, and SVG Icons for extra polish.

This variety of components really helped me design a smooth user experience quickly.

Manage and explore your flashcard collections.

Study cards with progress tracking.

Track your learning journey with detailed stats.

Group your collections with folders

I integrated AI-powered collection generation: users can input a topic, and the backend (Fastify + Gemini) generates a full flashcard set automatically. This feature saves time and makes the app more dynamic.

Even as someone with little frontend background, I felt productive and creative with KendoReact. The ready-to-use components removed a lot of friction and let me focus on building features, not fighting the UI.

👉 Try it here: flip-samurai.vercel.app

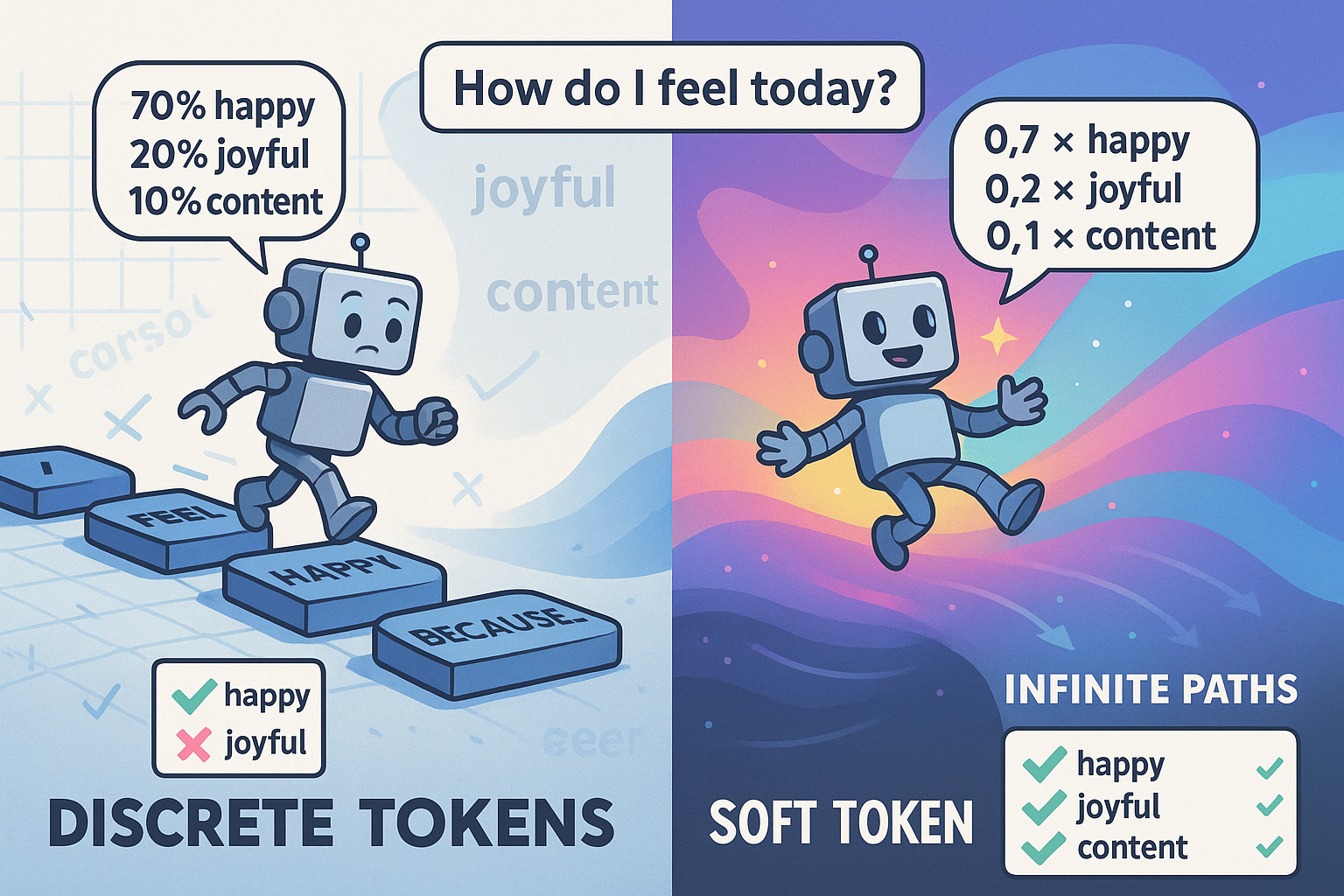

MLZC25-01. Introducción al Aprendizaje Automático: ¿Qué es y por qué importa?

Cuando escuchamos "Machine Learning" o "Aprendizaje Automático", muchas veces pensamos en robots inteligentes o sistemas que parecen tener vida propia. Pero la realidad es mucho más fascinante y accesible de lo que imaginamos.

El aprendizaje automático es una rama de la inteligencia artificial que permite a las computadoras aprender y tomar decisiones a partir de datos, sin ser programadas explícitamente para cada tarea específica.

Piénsalo así: en lugar de escribir miles de líneas de código para que una computadora reconozca un gato en una foto, le mostramos miles de fotos de gatos y otros animales, y la computadora "aprende" por sí misma a distinguirlos.

En nuestra vida cotidiana:

- Recomendaciones de Netflix: ¿Te has preguntado cómo Netflix sabe exactamente qué película te va a gustar? ML analiza tus patrones de visualización.

- Navegación GPS: Los mapas inteligentes que predicen el tráfico y encuentran las rutas más rápidas.

- Detección de spam: Tu correo electrónico filtra automáticamente los mensajes no deseados.

- Asistentes virtuales: Siri, Alexa y Google Assistant entienden y responden a tus comandos.

En la industria:

- Medicina: Diagnóstico de enfermedades a través de imágenes médicas.

- Finanzas: Detección de fraudes en transacciones bancarias.

- Agricultura: Optimización de cosechas y predicción de plagas.

- Transporte: Vehículos autónomos que navegan por las calles.

1. Datos

Sin datos, no hay aprendizaje. Los datos son el "alimento" que permite a los algoritmos aprender patrones y tomar decisiones.

2. Algoritmos

Son las "recetas" matemáticas que procesan los datos para encontrar patrones y hacer predicciones.

3. Modelos

Son el resultado del proceso de aprendizaje: una representación simplificada de la realidad que puede hacer predicciones sobre datos nuevos.

1. Demanda creciente

El mercado laboral busca desesperadamente profesionales con habilidades en ML. Es una de las profesiones mejor pagadas del sector tecnológico.

2. Accesibilidad

Herramientas como Python, scikit-learn y TensorFlow han democratizado el acceso al ML. Ya no necesitas ser un genio matemático para empezar.

3. Impacto real

Puedes crear soluciones que realmente mejoren la vida de las personas, desde diagnósticos médicos más precisos hasta sistemas que optimicen el consumo energético.

Al comenzar este viaje, es importante entender que el Machine Learning no es magia. Es una herramienta poderosa, pero también requiere:

- Pensamiento crítico: Los datos pueden estar sesgados, los modelos pueden ser injustos.

- Curiosidad: Siempre preguntarse "¿por qué funciona esto?" y "¿qué podría salir mal?"

- Ética: Recordar que nuestras decisiones algorítmicas afectan vidas reales.

En los siguientes posts exploraremos:

- Los diferentes tipos de aprendizaje automático

- Las herramientas que necesitas para empezar

- Cómo Python se convirtió en el lenguaje favorito para ML

- La importancia del análisis exploratorio de datos

- Técnicas de preprocesamiento

- Y reflexionaremos sobre nuestra primera tarea práctica

Pregunta para ti: Piensa en tu día a día. ¿Qué actividades realizas que podrían beneficiarse del Machine Learning? ¿Qué problemas te gustaría resolver usando datos y algoritmos?

¿Te emociona este viaje? ¡Estamos apenas comenzando! En el próximo post exploraremos los diferentes tipos de aprendizaje automático y cuándo usar cada uno.

Image Flow Editor

This is a submission for the KendoReact Free Components Challenge.

I am not a designer nor an image editor. However, I find myself going to Google every now and then, searching on background remover, light enhancing, etc. I go to Figma as well to add some effects, or apply masks or whatever.

As a casual image editor, I need what works right a way with the fewest clicks possible. I am not willing to invest my time on learning something I need few times a month.

Figma is great. However, when any tool start to get traction. The team behind it start to seek growth and domination. Figma and Canvas started as simple tools that do one or two things. Now, they require a learning curve to achieve things.

These days, I find myself going to Excalidraw to be honest to draw my creatives for posts or what not. Why? I believe on the saying: "With greater power comes greater responsibilities." Apply to this field and it becomes: "With more options, comes more distractions." For a simple remove background task and add stroke to make stump like. You start exploring the never ending plugins ecosystem searching for one who does both tasks. A task of three minutes max turns to a full day of trying different options, signing to countless services for an API key. Of course, days after that, you still didn't edit your image and forget about the project all over.

The Concept

I imagine myself as the main end user:

- I can upload multiple images.

- Add some tasks or editing flow as drag and drop.

- Hit compile or run.

- Get the result files (preview, download, download all).

It should be as simple as it was described.

Demo Video:

You can test the app from these two domains:

You can also find the repository for this project here.

The components used in this project are:

- kendo-react-common

- kendo-react-intl

- kendo-react-buttons

- kendo-react-inputs

- kendo-react-dropdowns

- kendo-react-dialogs

- kendo-react-notification

- kendo-react-indicators

- kendo-react-animation

- kendo-popup-common

- kendo-react-popup

- kendo-react-layout

- kendo-react-labels

- kendo-react-data-tools

- kendo-react-charts

I don't have much time for the challenge. Even though I have ten years of experience with the Kendo UI and Telerik. I mainly used jQuery version for dashboard related work. Still, five days is not enough time for me to come with an idea, code it and write about it while doing my work.

Since the trend this year is all around Vibe Coding, Coding Assistants, Agents, AI Editors. Why not go this road and Vibe Code my idea for this challenge?

I tried a couple of these Vibe Coding platforms before. However, the main problem I usually face is their limitations. Meaning, if you let the agent code the way it knows how. It will give you working prototypes fast and almost perfect. However, when you ask them to use a certain library or framework, they struggle with that and only bring you blood pressure up.

So, I started with Google AI Studio Build. It proved itself as reliable when it comes to using libraries with React and Angular. However, this time it wasn't efficient. It kept showing errors that it couldn't fix. I even deleted the project and started from scratch. The Kendo MCP configuration doesn't seem to work at first in this environment neither.

So, I downloaded the code base and opened my ZED editor. I configured the Kendo MCP and started prompting.

The first iteration was easy. I prompted Zed to fix the issues. It did very quickly. The problem was in importing react in multiple places from different CDNs. I updated the AI Studio code base and it worked.

Later on, things started to get very nasty. Both Zed & Ai Studio were needed for different tasks. I tried to stop using AI Studio, but the API key calls from my local version kept preventing me from doing anything claiming that I exceeded the limits or whatever.

At the end, I continued on AI Studio. I added the Kendo MCP configuration in its settings. Luckily for me, it started using it to access the latest documentation and recognizes my prompts with ease. It even fixed an issue related to license while using a premium feature of the dropdown component. Same happened with the button at certain point. I wonder if the MCP can pinpoint the paid features in free components without making a mistake and I had to point to it. Of course, I didn't point to the component causing the issue. I only gave my feedback about the licensing strips showing in the dialog form.

I have to say. I never felt cold adding a feature like in this project. The AI Studio ruined my app two times while adding a simple button. It's unfortunate that the GitHub integration isn't working correctly. So I had to download a version every now and then.

Let's be honest. Kendo UI shines in dashboards. In my previous job, we decided to buy it because of how straightforward building dashboards was with the Data Grid, Charts, Editors and so on.

After the first prompt about the dashboard. I confirmed all my fears. It crashed and I had to track errors and fix them. The same error keeps happening whenever I add a new component. Ai Studio uses ESM CDN for its packages management. The problem in versioning. So I had to check the right version for each component or package.

I was hoping to use the tiling layout and the data grid components. Unfortunately,the little I am doing with them shows the licensing strips. So I removed them all together.

Even though I prefer Cloudflare these days. I chose Vercel to host this project. However, to deploy it outside of AI Studio I have to do some changes.

The first thing I did was removing adding a new option for the user to add his own Gemini API KEY. This way, anyone can use the app without me worrying about credits and so on. Of course, this will change if the app gets to a certain point.

Once again, I used only free components. However, the dialog for the API configuration shows the license strips!!! I was confused, so I prompted Claude in Zed to use the MCP and investigate the issue. Unfortunately, the investigation didn't lead to a good result. Since Claude went and deleted the Kendo theme and did other unnecessary stuff. Then he went out of service because I exceeded the limit of using it.

I switched the model to ChatGPT 5 mini through GitHub Copilot and prompted:

in the @ApiKeyConfig.tsx file there something that make Kendo add the license strips. Please use the kendo react mcp to investiage this. Don't do anything until you ask

This model did a better job with the Kendo MCP. It asked for a list of all premium components. Then it asked for free components with premium features. Compiled a plan for me on how to fix the issue. After executing the plan the license strips gone.

I started this project for fun. After spending almost five days working on it. I am planning to continue enhancing it to see how it turns out. I added a todo list to the README file of this project. But that's just the starting point. But first, let's see how it perform in this challenge.

Data Analyses — Wizard

This is a submission for the KendoReact Free Components Challenge.

Data Analyses — Automate Wizard (KendoReact Challenge submission)

I built Data Analyses — Automate Wizard, a small React app that helps users import tabular files (CSV/XLSX), automatically analyzes the dataset, and generates polished, accessible charts and dashboard cards using KendoReact Free Components.

The app’s goal is to let non-technical users get immediate insights from an uploaded spreadsheet:

- auto-detects numeric / date columns,

- suggests the most useful charts and small dashboard cards (totals, averages, top categories),

- aggregates and prepares data for charts (bar, line, pie, donut, area),

- offers a Chart Wizard for manual column-to-chart mapping,

- accepts exported charts (PDF, PNG, SVG) and lets users pin them to the dashboard.

I focused on usability (one-click suggestions + preview), accessibility, and making the analysis pipeline safe (AI only receives a compact column summary / sample, not full PII).

- Demo video (walkthrough): https://www.loom.com/share/1382adaea79e41e09406bc76caecb3a7?sid=6bc8ff72-ec35-4f9a-b8fa-a67471f236c4

- Source code (GitHub): https://github.com/MatheusDSantossi/data-analyses-automate

Screenshots and the Loom video are in the repo README. The video demonstrates uploading a CSV, the AI suggestions panel, auto-generated charts/cards, and the Chart Wizard flow.

- Upload CSV / XLSX files and preview sample rows.

- Automatic dataset analysis (sample-based) using a generative AI assistant (Gemini) that recommends charts and cards.

- Auto-aggregation helpers (sum/avg/count, time-series aggregation with month-year/year granularity).

- Interactive Chart Wizard integration so users can pick fields and build charts manually if desired.

- Chart gallery: Bar, Line, Area, Pie, Donut (all generated from the same pipeline).

- Upload/export support for images/PDFs (pin exported charts to dashboard).

- Skeletons + progressive UI while parsing/aggregating.

- Lightweight theme scoping so Kendo styling is applied only where needed.

I used multiple free KendoReact components to build the UI and charts. Key components used in the project:

-

Input— file picker UI (from@progress/kendo-react-inputs) -

Chartand chart subcomponents (from@progress/kendo-react-charts):-

Chart(root) -

ChartSeries/ChartSeriesItem -

ChartCategoryAxis/ChartCategoryAxisItem -

ChartValueAxis/ChartValueAxisItem ChartTitleChartSeriesLabels-

ChartTooltip/ChartNoDataOverlay

-

ChartWizard(from@progress/kendo-react-chart-wizard) — interactive mapping wizard for users to create charts from table dataSkeleton(from@progress/kendo-react-indicators) — loading placeholders while files parseTooltip(from@progress/kendo-react-tooltip) — contextual help and quick actions(plus some Kendo “intl” helpers where needed for formatting)

Note: many Chart subcomponents are imported from the charts package (e.g.,

ChartSeriesItem,ChartCategoryAxisItem, etc.). Together they provide the full charting capabilities used across the app.

-

I used Google Generative AI (Gemini) via a small client wrapper to analyze a sample of the uploaded data (column names + a few sample values and summary stats). The prompt instructs the model to return JSON only with:

- recommended charts (type, groupBy, metric, aggregation, topN, granularity)

- recommended small dashboard cards (specs only: field, aggregation, label, format).

The frontend validates the AI response, then computes actual numbers locally (e.g., sums/averages/top categories) to avoid trusting the model for numeric calculations and to keep the source-of-truth on the client data.

This approach lets the AI do lightweight analysis and suggestions while the app remains in control of real computations (privacy and correctness).

(If you prefer, this submission is not entered for the Kendo AI Coding Assistant prize — I used Gemini instead. No Nuclia RAG integration is included.)

# clone

git clone https://github.com/MatheusDSantossi/data-analyses-automate.git

cd data-analyses-automate

# install

npm install

# start dev server

npm run dev

# open http://localhost:5173 (Vite default)

Environment

- Add your Gemini key to

.env:

VITE_GEMINI_API_KEY=<your-gemini-key>

Notes

- The app uses

ExcelJSto read xlsx files and a CSV parser helper for CSV. It also uses Kendo’s default theme — I included an optional scoped theme option so Kendo styles only apply inside the dashboard container. - For the AI assistant I only send column summaries (no raw rows). If you plan to demo with real data containing PII, please exercise caution.

- Use of underlying technology — Chart/wizard powered by KendoReact Charts & ChartWizard; Kendo components used for inputs/UX; dataset parsing with ExcelJS; AI-assisted recommendations.

- Usability & User Experience — immediate suggestions, skeletons while parsing, Chart Wizard for manual adjustments, export previews (PDF/Image), responsive grid layout.

-

Accessibility — charts have labels and legends, skeletons are presentational only, and UI controls use semantic elements (and

aria-*where appropriate). - Creativity — combining a generative model to suggest charts/cards + a Chart Wizard for manual exploration makes analysis quick while preserving user control.

-

src/pages/Dashboard.tsx— main dashboard, AI integration, aggregation orchestration, generated-charts renderer -

src/utils/wizardData.ts— helpers to transform rows into wizard-ready{ field, value }arrays and aggregation helpers -

src/utils/aiAnalysis.ts— build prompt, call AI wrapper (getResponseForGivenPrompt) and safe JSON parsing -

src/components/charts/*—BarChart,LineChart,DonutChart,PieChart,AreaChartcomponents (Kendo-powered) -

src/components/Wizard.tsx— ChartWizard integration

- GitHub: https://github.com/MatheusDSantossi/data-analyses-automate

- Demo video (walkthrough): https://www.loom.com/share/1382adaea79e41e09406bc76caecb3a7?sid=6bc8ff72-ec35-4f9a-b8fa-a67471f236c4

Augmented Intelligence (AI) Coding using Markdown Driven-Development

TL;DR: Deep research the feature, write the documentation first, go YOLO, work backwards... Then magic. ✩₊˚.⋆☾⋆⁺₊✧

I my last post, I outlined how I was using Readme-Driven Development. In this post, I will outline how I implemented a 50-page RFC over the course of a weekend.

My steps are:

Step 1: Design the feature documentation with an online thinking model

Step 2: Export a description-only "coding prompt"

Step 3: Paste to an Agent in YOLO mode (--dangerously-skip-permissions)

Step 4: Force the Agent to "Work Backwards"

Open a new chat with an LLM that can search the web or do "deep research". Discuss what the feature should achieve. Do not let the online LLM write code. Create the user documentation for the feature you will write (e.g., README.md or a blog page). I start with an open-ended question to research the feature. That will prime the model. Your exit criteria is that you like the documentation or promotional material enough to want to write the code.

To exit this step, have it create a "documentation artefact" in markdown (e.g. the README.md or blog post). Save that to disk so that you can point the coding agent at it.

If you don't want to pay for a subscription for an expensive model can install Dive AI Desktop and use pay-as-you-go models of much better value. Here is a video on setting up Dive AI to do web research with Mistral:

Next, tell the online model to "create a description only coding prompt (do not write the code!)". Do not accept the first answer. The more effort you put into perfecting both the markdown feature documentation and the coding prompt, the better.

If the coding prompt is too long, then the artefact is too big! Start a fresh chat and create something smaller. This is Augmented Intelligence ticket grooming in action!

Please paste in the groomed coding prompt and the documentation, and let it run. I always use a git branch so that I can let the agent go flat out. Cursor background agents, Copilot agents, OpenHands are all getting better.

I only restrict git commit and git push. I ask it first to make a GitHub issue using the gh cli and tell it to make a branch and PR.

The models love to dive into code, break it all, get distracted, forget to update the documentation, hit compaction, and leave you with a mess. Do not let them be a caffeine-fuelled flying squirrel!

The primary tool I am using now prints out a Todos list. This is usually the opposite of the correct way to do things safely!

⏺ Update Todos

⎿ ☐ Remove all compatibility mode handling

☐ Make `{}` always compile as strict

☐ Update Test_X to expect failures for `{}`

☐ Add regression test Test_Y

☐ Add INFO log warning when `{}` is compiled

☐ Update README.md with Empty Schema Semantics section

☐ Update AGENTS.md with guidance

That list is in a perilous order. Logically, it is this:

- Delete logic (broken code, invalid tests)

- Change logic (more broken code, more invalid tests)

- Change one test (which is mostly to what you are doing)

- Add one test (finally! the objective!)

- Change the README.md and AGENTS.md

If the agent context compacts, things go sideways, you get distracted, and you will end up with a bag of broken code.

I set it to "plan mode", else immediately interrupted it, to reorder the Todo list:

- Change the README.md and AGENTS.md first

- Add one test (insist the test is not run yet!)

- Change one test (insist the test is not run yet!)

- Add/Change logic

- Now run the tests

- Delete things last

I am not actually a big fan of the built-in Todos list of the two big AI labs. The models really struggle with any changes to the plan. The Kimi K2 Turbo seems more capable of pivoting. I have a few tricks for that, but I will save them for another post.

This past weekend I decided to write an RFC 8927 JSON Type Definition validator based on the experiemental JDK java.util.json parser. The PDF of the spec is 51 pages. There is a ~4000-line compatibility test suite. A jQwik generates 1000 random JTDs, which would cause several bugs. The total set of unit test written was 509.

Using a single model family is a Bad Idea (tm). For online research I alternate between full-fat ChatGPT Desktop, Claude Desktop and Dive Desktop to use each of GPT5-High, Opus 4.1 or Kimi K2 Turbo turn.

For Agents I have used all the models and many services. Microsoft kindly allows me to use full-fat Copilot with Agents for an open-source projects for free ❤️ I have a cursor sub to use their background agents. I use Codex, Code, and Gemini. The model seems less important than writing the documentation first and writing tight prompts. I am currently using an open weights model at $3 per million tokens for the heavy lifting as pay-as-you-go yet cross check its plans with GPT5 and Sonnet.

Rewrite History: Your Omniscient View of the Past

This is a submission for the KendoReact Free Components Challenge.

Hello Dev Community! 👋

Teammate: @sri_charan_5b9c2e5e77b8d4

We are thrilled to share Rewrite History: Your Omniscient View of the Past, an interactive game where history isn’t just read—it’s lived, shaped, and rewritten. Built using Kendo React components, Node.js, and the Nuclia RAG model, the game blends learning, storytelling, and strategy into a fully dynamic experience.

🕹️ Game Concept

• Choose an era: Ancient Rome, Renaissance, 20th century, and more

• Pick a historical character to play

• Make decisions that affect stats like influence, wealth, relationships, and health

• Every journey is dynamically generated and can follow history or diverge into alternate outcomes

• At the end, your story is compiled into a personalized book

Example choices generated by the game:

• “What if Napoleon chose peace instead of war?”

• “Could a scientist share a discovery earlier and change history?”

📖 Key Features

Dynamic Timeline Representation

Visualizes the main historical timeline alongside branches created by your choices, so you can track both actual history and your alternate paths.

Summary Book

Automatically collects all events of a particular timeline. You don’t have to dig through pages—you can read your journey as a narrative or explore others’ stories.

Nuclia RAG Model Integration

• Historical events and character data are uploaded to Nuclia RAG.

• When generating the next event, the model fetches contextual historical data.

• Combined with your character’s current stats and attributes, this data is sent to an LLM that outputs three dynamic choices, making the gameplay unpredictable and engaging.

Youtube video link: https://youtu.be/e_5GR61W2pI?si=5VxDBaPLJ9BDqtMu

📸 Screenshots

Homepage

Era Selection

Character Selection

Game Dashboard / Stats Overview

Timeline & Choices

Story Summary / Alternate History

Other Pages

• Player Books Page

• Input (from @progress/kendo-react-inputs)

• TextArea (from @progress/kendo-react-inputs)

• Rating (from @progress/kendo-react-inputs)

• AppBar (from @progress/kendo-react-layout)

• AppBarSection (from @progress/kendo-react-layout)

• Avatar (from @progress/kendo-react-layout)

• Button (from @progress/kendo-react-buttons)

• Card (from @progress/kendo-react-layout)

• CardHeader (from @progress/kendo-react-layout)

• CardBody (from @progress/kendo-react-layout)

• ProgressBar (from @progress/kendo-react-progressbars)

• Fade (from @progress/kendo-react-animation)

• Badge (from @progress/kendo-react-indicators)

How Nuclia Rag model is used

Our interactive historical storytelling game is powered end-to-end by Nuclia’s Retrieval-Augmented Generation (RAG) model. Instead of writing static branching storylines, we give the model structured historical data and let it retrieve and generate content on the fly.

Here’s our process:

Building the Knowledge Base

We upload detailed, real-world historical data about each character:

– Chronological events

– Age, stats, and personality traits

– Political, social, and cultural contexts

This information becomes our searchable knowledge base inside Nuclia.

Retrieval + Generation as a Single Step

When the player reaches a new point in the story, we send the player’s current state (age, stats, personality, current event) to Nuclia’s RAG endpoint.

The RAG model automatically:

Retrieves the most relevant context from our uploaded knowledge.

Generates historically grounded yet dynamic choices.

The Nuclia RAG model returns three plausible next events or actions the player can take. These aren’t pre-coded. They’re dynamically generated based on:

The player’s previous decisions

The character’s evolving stats and personality

Authentic historical information from the knowledge base

Replayability and Scalability

Because the RAG model merges retrieval with generation, our game can scale infinitely. It also ensures that each playthrough is different while still staying faithful to history.

⚙️ Tech Stack

Frontend:

• React + Kendo UI components (menus, dashboards, timeline visualizations)

• Vite, Tailwind, and custom CSS for styling

Backend:

• Node.js + Express

• Nuclia RAG model for AI-generated storylines

• JSON-based storage for player books

Features:

• Dynamic, AI-generated branching storylines

• Personalized books for each journey

• Replayable history experiences

💡 Why It’s Different

• Every playthrough is unique thanks to AI-powered story generation

• History becomes interactive and immersive, not static

• Players can learn, explore, and create their own alternate histories

• Fully polished and responsive UI thanks to Kendo React components

🔗 Links

• Live Demo: https://kendohack.onrender.com/

• Source Code: https://github.com/SriCharan-616/kendohack

🚀 Next Steps

• Add more eras and historical characters

• Enhance AI storytelling for richer, more diverse narratives

• Mobile-first interface and accessibility improvements

• Social features for sharing and exploring player-created histories

History is no longer just facts in a book—it’s something you can live, shape, and share.

Arquitetura Monolítica em Startups Contemporâneos

Com o avanço das tecnologias, o desenvolvimento de sistemas vem se tornando cada vez mais acessível e ágil. Ao mesmo tempo, cresceu a complexidade das estruturas necessárias para acompanhar as demandas e expectativas cada vez maiores do mercado. No entanto, surge a reflexão sobre como essa complexidade se comporta em sistemas de menor porte, especialmente no contexto de startups.

Diante disso, surge o questionamento: deve-se adotar uma arquitetura mais sofisticada apenas para acompanhar padrões tecnológicos impostos pela indústria, ou manter uma estrutura monolítica simples pode ser o caminho mais eficiente no estágio inicial de um negócio? Segundo Fowler (2015), a arquitetura monolítica ainda se mostra uma alternativa viável em projetos emergentes, pois oferece simplicidade, rapidez de implementação e menor custo de manutenção inicial.

A palavra monólito tem origem grega, que possui as palavras soltas monos (único) e lithos (pedra), e está associada a algo sólido e concreto, indivisível e formado por um único bloco. Esse significado foi utilizado no campo do desenvolvimento de software para se referir à arquitetura monolítica, um modelo tradicional em que todas as funções do sistema são centralizadas em uma única estrutura (WIKIPÉDIA, 2025a). Na prática, isso quer dizer que os diferentes módulos da aplicação são interligados e compilados em um só executável, funcionando de maneira compartilhada e única.

Esse tipo de arquitetura não foi desenhada e desenvolvida por uma única pessoa, pois surgiu de forma gradual, consolidando-se como o padrão inicial de construção de sistemas. Com isso, por muito tempo, o discurso predominante foi sobre a migração de sistemas monolíticos para microsserviços, ressaltando a implementação de modelos arquiteturais mais atuais e eficientes. Contudo, essa narrativa mudou quando se percebeu que a escolha da arquitetura deve estar alinhada ao modelo de negócio e ao contexto da empresa, e não apenas às "modas" das arquiteturas em alta (WIKIPÉDIA, 2025b).

O desenvolvimento de um sistema com arquitetura monolítica é considerado mais rápido devido à não necessidade de trabalhar em uma comunicação complexa entre os componentes, tendo em vista que todos estão alocados na mesma base. Outra característica marcante é o nível de facilidade de monitoramento e manutenção no que diz respeito à infraestrutura, pois, com apenas uma única aplicação para gerenciar, os times de operação não precisam lidar com a complexidade de múltiplos serviços. De forma geral, também há uma redução de custos com servidores, comunicação entre sistemas e controle de monitoramento.

Considerando as vantagens apresentadas, pode-se concluir que esse modelo de arquitetura é mais indicado para o desenvolvimento de sistemas menores e menos complexos, sendo uma ótima escolha em cenários de empresas em fase inicial, como startups. Nesses casos, a simplicidade do monolítico garante agilidade, reduz custos e permite que o time concentre seus esforços na evolução do produto sem precisar se preocupar, desde o início, com estruturas mais sofisticadas e complexas.

Apesar de sua facilidade de implementação, a longo prazo o modelo monolítico apresenta desvantagens quando comparado a outras arquiteturas de software. Como exemplo, a arquitetura de micro-serviços, de acordo com pesquisa realizada pela Amazon AWS (2025a), “fornece uma base de programação robusta para sua equipe e suporta sua capacidade de adicionar mais recursos de forma flexível”. Isso demonstra que, para startups de rápido crescimento — os chamados “unicórnios” — a segmentação do código torna-se inevitável para que a empresa consiga escalar com eficiência.

Ainda assim, “desmembrar o monolito” não é uma tarefa simples. Estudos de caso, como o da Netflix, evidenciam que a migração para micro serviços exige planejamento estratégico, altos investimentos e mudanças culturais profundas dentro da organização (AMAZON AWS, 2025b). Inicialmente, a empresa enfrentou graves problemas de indisponibilidade e limitações de escalabilidade em sua arquitetura monolítica, o que impulsionou a decisão pela reestruturação. Contudo, a transição não ocorreu de forma imediata: foi um processo gradual, que envolve a decomposição de serviços, reconfiguração de pipelines de entrega contínua e adoção massiva de práticas de automação. Esse movimento, além de resolver gargalos técnicos, permitiu à Netflix atender milhões de usuários simultâneos ao redor do mundo com maior estabilidade e agilidade.

De forma semelhante, outras grandes empresas como Amazon, Uber e Spotify também documentaram desafios durante a jornada de modernização de suas arquiteturas, revelando que a adoção de micro serviços não é apenas uma decisão tecnológica, mas também organizacional (LIMMA, 2019; DREAMFACTORY, 2025). Isso reforça a ideia de que, embora complexa, a mudança tende a ser inevitável para negócios que buscam escalabilidade em ambientes digitais altamente competitivos.

A arquitetura monolítica se mostra uma escolha estratégica para startups em fase inicial, pois proporciona simplicidade, rapidez no desenvolvimento e menor custo de manutenção. Essa abordagem permite validar o produto e ajustar-se ao mercado sem a necessidade de estruturas complexas, possibilitando que os recursos da equipe sejam direcionados ao crescimento do negócio.

Entretanto, com a expansão da base de usuários e a diversificação de funcionalidades, o monólito tende a se tornar um entrave à escalabilidade, dificultando a manutenção e a adoção de novas tecnologias. Casos como os da Netflix, Amazon e Uber demonstram que a transição para micro-serviços, embora desafiadora, é frequentemente inevitável em cenários de alto crescimento. Assim, o modelo monolítico deve ser entendido não como ultrapassado, mas como um ponto de partida pragmático, que precisa ser acompanhado de um planejamento para futuras evoluções arquiteturais.

- AMAZON AWS. The difference between monolithic and microservices architecture. Disponível em: https://aws.amazon.com/pt/compare/the-difference-between-monolithic-and-microservices-architecture. Acesso em: 28 set. 2025a.

- AMAZON AWS. Netflix case study. Disponível em: https://aws.amazon.com/pt/solutions/case-studies/netflix-case-study. Acesso em: 28 set. 2025b.

- DREAMFACTORY. Microservices examples. Disponível em: https://blog.dreamfactory.com/microservices-examples. Acesso em: 28 set. 2025.

- FOWLER, Martin. Patterns of enterprise application architecture. Boston: Addison-Wesley, 2015.

- LIMMA, Math. Microsserviços: um estudo de caso Amazon e Netflix. Medium, 2019. Disponível em: https://mathlimma.medium.com/microsserviços-um-estudo-de-caso-amazon-e-netflix-3582648540a0. Acesso em: 28 set. 2025.

- WIKIPÉDIA. Arquitetura monolítica. Disponível em: https://pt.wikipedia.org/wiki/Arquitetura_monol%C3%ADtica. Acesso em: 28 set. 2025a.

- WIKIPÉDIA. Sistema operacional monolítico. Disponível em: https://pt.wikipedia.org/wiki/Sistema_operacional_monol%C3%ADtico. Acesso em: 28 set. 2025b.

IA Generativa en 2025: Cómo sobrevivir y prosperar en la nueva era tecnológica

La inteligencia artificial generativa (IA generativa) dejó de ser un concepto de ciencia ficción.

Hoy escribe textos, crea ilustraciones, compone música e incluso programa código en segundos. Lo que antes parecía magia, ahora está en la oficina, la universidad y hasta en el celular.

En este artículo te contaré qué es, cómo funciona, qué oportunidades trae y cuáles son los riesgos que debemos considerar para aprovecharla de forma responsable.

💡 Este artículo está pensado tanto para quienes programan como para quienes coordinan, diseñan o simplemente sienten curiosidad por la tecnología.

- ¿Qué es la IA generativa?

Es una rama de la IA que no solo analiza datos, sino que genera contenido nuevo.

Solo basta con escribir una instrucción (un prompt) para obtener un artículo, una imagen o un bloque de código.

👉 En 2025 ya no hablamos de un experimento, sino de una herramienta de trabajo y creatividad en múltiples industrias.

- Cómo funciona: la magia detrás del contenido

Los modelos se entrenan con millones de ejemplos y aprenden patrones. Gracias a un mecanismo llamado “atención” (attention), el sistema elige la información más relevante para dar una respuesta coherente.

Ejemplo sencillo: si escribes “hazme una lista de tareas para organizar un evento”, el modelo no copia de internet, sino que predice las palabras más probables y crea un plan nuevo.

- Modalidades y multimodalidad

Hasta hace poco teníamos modelos especializados (texto → texto, texto → imagen). La tendencia actual son los modelos multimodales: entienden y combinan texto, imágenes, audio y video en la misma interacción.

🎬 Imagina describir una idea en palabras y recibir un video corto como resultado.

- Limitaciones y riesgos

Aunque suene increíble, la IA generativa tiene problemas:

Alucinaciones: puede inventar datos que parecen ciertos.

Conocimiento limitado: no sabe lo ocurrido después de su fecha de entrenamiento.

Sesgos: repite prejuicios presentes en los datos.

Costos ambientales: entrenar modelos consume mucha energía.

Mal uso: desde noticias falsas hasta fraudes visuales.

💡 Consejo: úsala como herramienta, no como única fuente de verdad.

- El rol humano en la era de la IA

La IA no viene a reemplazarnos, sino a exigir que potenciemos lo que nos hace únicos: creatividad, pensamiento crítico, ética y empatía.

Los profesionales que prosperen serán los que aprendan a trabajar con la IA, no contra ella.

- Aplicaciones prácticas

Algunos ejemplos actuales:

🎓 Educación: asistentes que resumen lecturas o crean ejercicios personalizados.

💼 Negocios: borradores de contratos, reportes o ideas de marketing.

🎨 Arte y diseño: generación de imágenes, música y videos.

👩💻 Tecnología: ayuda en la programación y prototipos rápidos.

- Agentes autónomos: el siguiente paso

Más allá de “responder preguntas”, están surgiendo los agentes autónomos: programas que encadenan tareas, interactúan con aplicaciones y toman decisiones limitadas sin supervisión constante.

Ejemplo: un asistente que recibe un correo, analiza el pedido, busca información en internet y genera una respuesta automática.

- IA en las organizaciones

El verdadero cambio ocurre cuando una empresa integra la IA en sus procesos y cultura, no solo en tareas individuales. Esto requiere:

Liderazgo con visión.

Capacitación constante.

Políticas éticas claras.

Espacios de experimentación.

- El arte de preguntar: prompt engineering

El resultado depende de la instrucción. Aprender a dar contexto, ejemplos y objetivos claros es fundamental.

Ejemplo: en vez de escribir “haz un informe”, prueba con:

“Escribe un informe de una página, con viñetas y un resumen final para directivos no técnicos”.

- Mirando al futuro

El debate está abierto: ¿liberará nuestro potencial creativo o generará nuevas desigualdades?

Lo único seguro es que la IA generativa ya forma parte de nuestra vida digital y seguirá transformando la forma en que trabajamos y nos relacionamos.

Conclusión

La IA generativa no es una moda: es una revolución en marcha. Nuestro reto no es temerle, sino aprender a usarla con criterio, ética y creatividad.

🔑 Adaptarse es obligatorio, prosperar es la gran oportunidad.

How I Built a Web Vulnerabilty Scanner - OpenEye

Creating secure web applications is no easy feat. Vulnerabilities like SQL injection, XSS, or CSRF are still among the most common attack vectors, yet not every developer has the time or the skills to run deep security scans.

So I decided to solve this problem by building OpenEye; a modern, cloud-hosted, and user-friendly web vulnerability scanner that leverages OWASP ZAP under the hood but wraps it with a clean Django-based interface, making vulnerability scanning accessible to both non-technical users and professionals who just want clear, concise output.

This blog covers the concept, architecture, implementation, security considerations, and deployment of the project.

The idea was simple. What if anyone not just security experts could run a reliable vulnerability scan against their own websites, and instantly see a structured report highlighting risks by severity?

With OpenEye, users log in, enter a target URL, and initiate a scan. The system then spins up a dedicated ZAP container, performs both active and passive scanning, and outputs results grouped by severity levels — critical, high, medium, and low.

Users can also revisit past scans through their personal history panel, ensuring that important findings aren't lost in a sea of logs.

The frontend is built with Tailwind CSS + JavaScript and Django templates. It's simple and intuitive even a grade 3 student wouldn't get lost.

The goal was to reduce friction so whether you're a developer or someone without a technical background, you can quickly run a scan and make sense of the findings.

Initially, I considered building my own scanning engine but that would be reinventing the wheel. OWASP ZAP is an industry-standard DAST (Dynamic Application Security Testing) tool, and it already:

- Detects SQLi, XSS, CSRF, authentication/session flaws, and misconfigurations

- Provides a JSON API for integration

- Has a robust active and passive scanning mechanism

By embedding ZAP inside Docker containers, each scan runs in isolation, preventing cross-contamination and ensuring resource efficiency.

Here's how the system fits together:

The app has a frontend and backend built with Django, PostgreSQL via Supabase for the database to store scan history, authentication with AWS Cognito, and our core functionality OWASP ZAP running in Docker containers. After testing locally to enable users to easily access the app, I hosted it on an AWS EC2 instance.

I've said a lot, deep breathes. Now let's take it slowly and peel out the implementation step by step.

Django Project Setup

I'll assume you already know how to set up a Django project and run it. If not, no worries at all just do a quick Google search and you'd find a ton of resources. Using Django isn't compulsory; use any framework that works for you. For me, I wanted to learn a little more Django, hence why.

If you'd be working totally locally, then you could just spin up a PostgreSQL database locally. Otherwise, use a cloud-hosted database. Supabase was my ideal choice because why not, it's free and easy to use. Create the necessary tables and fields to store your scan information. It's all up to you; you're free to store whatever you like.

OWASP ZAP Integration

One of the best parts about OWASP ZAP is that it exposes a REST API out of the box. That means instead of manually interacting with the ZAP desktop client, you can programmatically control scans from your own application. This was perfect for me because I wanted OpenEye to feel like a standalone platform.

At a high level, here's what I needed to do:

- Trigger a spider scan – to crawl the target application and discover URLs/endpoints

- Run an active scan – to test those discovered endpoints for vulnerabilities

- Fetch alerts – to retrieve all the issues ZAP found so I could parse, rank, and display them in OpenEye's dashboard

ZAP's REST API makes these tasks surprisingly straightforward. For example, here's a simplified version of the wrapper functions I built:

def start_spider_scan(self, target_url: str) -> str:

return self._make_request(

"/JSON/spider/action/scan/",

{'url': target_url}

).get('scan', '')

def get_alerts(self, target_url: str) -> Dict[str, Any]:

return self._make_request(

"/JSON/core/view/alerts/",

{'baseurl': target_url}

)

All I'm really doing here is sending HTTP requests to ZAP's REST API endpoints:

-

/JSON/spider/action/scan/→ tells ZAP to start crawling a target -

/JSON/core/view/alerts/→ retrieves all alerts (vulnerabilities, misconfigurations, etc.) for that target

The _make_request() helper I wrote under the hood is just an HTTP client method that talks to ZAP running inside its Docker container. So instead of re-inventing the wheel and writing my own vulnerability scanner from scratch, I leverage ZAP's proven scanning logic but wrap it in my own backend API layer.

This approach gave me two big advantages:

Abstraction & Control – My backend only needs to call simple Python functions like

start_spider_scan()orget_alerts(). I don't have to expose raw ZAP API calls directly to the frontend.Custom Processing – Once I had the JSON responses, I could parse them, group issues by severity, and feed them into my own database and dashboard instead of relying on ZAP's default reporting.

So when a user runs a scan in OpenEye, they're really triggering these wrappers in my backend, which in turn communicate with ZAP's REST API inside Docker. Also, by default, ZAP listens on port 8080 inside the container.

For example, if I start ZAP in Docker like this:

docker run -u zap -p 8080:8080 -i ghcr.io/zaproxy/zaproxy:stable zap.sh -daemon -host 0.0.0.0 -port 8080

Where:

-

-daemonruns ZAP headlessly (no GUI) -

-host 0.0.0.0makes it listen on all interfaces -

-port 8080exposes the API at http://localhost:8080 -

-p 8080:8080maps the container port to the host, so my backend can reach it

Once ZAP is running, the API is always accessible at endpoints like:

http://localhost:8080/JSON/spider/action/scan/http://localhost:8080/JSON/core/view/alerts/

Managing authentication securely is one of those things that looks simple on the surface "just add login/signup" but in reality it's full of pitfalls: password storage,token lifetimes, OAuth2 flows, etc. And I had a bit of a headache with setting the auth callback (side-eye to AWS for this).

Either way, Cognito gave me:

- Secure defaults — password policies, account recovery, MFA

- OAuth2.0 compliance — standard flows (authorization code, implicit, etc.)

- Scalability — I don't need to worry about user pools or scaling login endpoints

Here's what happens when a user logs in to OpenEye:

1. Redirect to Cognito

When the user clicks "Login," they're redirected to my Cognito hosted login page. Cognito provides a default UI, which saved me time.

2. Authorization Code Returned

After the user enters their credentials, Cognito redirects them back to my Django app with an authorization code in the URL query string.

3. Backend Exchanges Code for Tokens

My Django app then takes that code and makes a server-to-server POST request to Cognito's /oauth2/token endpoint. This returns:

- An ID Token basically a JWT containing user identity claims like email, sub, etc.

- An Access Token and refresh token

4. User Session Established

Django decodes the ID token, extracts the user info, and establishes a session. From the app's perspective, the user is now authenticated.

One thing Cognito enforces for good reasons is that OAuth2 redirects must happen over HTTPS (except for localhost during development). This meant when I hosted my app on an EC2 instance, I couldn't just serve HTTP to Cognito,I had to set up SSL.

First, I created a free subdomain for my app using FreeDNS(you could check them out) after I set it up on an AWS EC2 instance, then ran this command:

sudo certbot --nginx -d openeye.chickenkiller.com

Certbot automatically provisioned and installed free SSL certificates. Nginx handled HTTPS termination and forwarded requests to Django running on Gunicorn.

Then finally, the entire OAuth2 flow worked securely end-to-end...

Relax, continue reading I'll elaborate more on this.

This is not entirely synchronous because I had set up the app on the EC2 instance before creating a subdomain name and registering it with SSL, which was a series of back and forth. But to make it easier for you to follow, I mentioned this in my earlier step with setting up the auth, so please don't get confused.

Once I had the core pieces (ZAP API, Django backend, Cognito authentication), I needed a place to run everything in the cloud. For this, I chose an AWS EC2 t2.micro instance (Ubuntu 22.04) small, cheap, and well within the AWS Free Tier.

The very first thing I did after launching the instance was update packages and install the essentials; Python, Docker, Nginx, etc.

At this point, I had a clean Ubuntu server with the tools needed to run both my app and ZAP.

Django doesn't serve production traffic directly, so I used Gunicorn as the WSGI HTTP server. Gunicorn is lightweight and designed specifically for running Python web apps in production. It basically handles the load and spins up more instances of my app when necessary.

Then I put Nginx in front of Gunicorn for two reasons:

- Static files: Nginx serves static assets (CSS, JS, images) much faster than Gunicorn

- Reverse proxy: Nginx terminates HTTPS and forwards requests to Gunicorn on port 8000

So when clients hit https://openeye.chickenkiller.com:

- Nginx terminates SSL, then proxies the request to Gunicorn running Django on port 8000

- Static files are served directly by Nginx for speed

For the scanning engine, I didn't want to install ZAP directly on the host. Running it in Docker gave me isolation, portability, and easy lifecycle management (start, stop, update). So I just downloaded the image and ran it detached (-d means it stays up as a background service).

Now, my Django backend can talk to the ZAP API via http://localhost:8080.

So that's it! Not so intimidating as you thought, huh? Oh yeah, and why the name OpenEye, you ask? Well, why not ,don't you think it's the perfect name for a vulnerability scanner? OpenEye - it's literally a wide-opened eye for finding vulnerabilities(whatever that means). So that's it feel free to replicate this and add your own spice!

Tangible India - A journey through numbers

This is a submission for the KendoReact Free Components Challenge.

I built Tangible India – A journey through numbers, an interactive web app that blends facts about India with numbers in a fun and engaging way.

The idea is simple yet impactful:

- Numbers (0, 1, 2, 3, 1947, …) are the foundation of computer science and daily life.

- For each number, the app presents a fascinating fact related to India — ranging from history, culture, geography, science, to modern-day achievements.

- As an additional category, memes have been added as categories since a lot of the knowledge movement and news is propagated through such measures.

This turns abstract digits into tangible insights, helping users learn something new about India while exploring numbers sequentially or randomly.

It’s a learning + curiosity app designed for students, educators, trivia lovers, and anyone interested in India.

🔗 Live Project: https://himanshuc3.github.io/tangible-india/

💻 Source Code: Tangible India - repository

Screenshots:

| Title/Remarks | Screenshot |

|---|---|

| Homepage (light theme) |  |

| Homepage (dark theme) |  |

| Icons & tabbed navigation |  |

I leveraged KendoReact's components as atomic components for creating the layout for Tangible India from scratch. The usage was restricted to the following components due to a lack of

- Button – Used across the whole website for actions of triggering search, random fact generation etc.

- SVG Icons (plusOutlineIcon, minusOutlineIcon, etc.) – The icon set offers a wide selection of custom icons along with the flexibilty to modify style according to the theme. Used in conjunction with buttons for accessibility and improving UX

- Card — This acts as a fundamental building block or a Poster in layman terms, for showing the Facts linked to each number.

- Input – Used for getting user input for searching/filtering through available list of facts.

- Default Theme – Used for importing the base theme used as design system and styles for each of the imported components.

- TabStrip, TabStripTab - Useful for displaying multiple facts across linked to the same number.

- AppBar, AppBarSection, AppBarSpacer – The header used in the website is using this component as a building block for layout.

- Popover – Showing keyboard shortcuts via popover

- Slider, SliderLabel – The slider is a critical component giving a bird's eye view of the progression of number based facts.

- Tooltip – Used in defining unknown behavior, like "Want to contribute?" button in header

- ChipList, Chip - Categories displayed like "historical", "cultural" etc. are leveraging Chips and Chiplist

I used an AI assistant to:

- Suggest initial draft UI layouts to help avoid referencing API and start from 20-30% setup instead of 0%.

- Generating the header using AppBar.

- The code for generating Slider for showing number progression.

- Exploration for best component fit for the UI and UX required.

- Describing the UX for: functionality to select among different categories and getting relevant components present under the free tier of KendoReact and going ahead with ChipList.

Challenges Faced using the AI Assistant

While KendoReact AI Assistant (MCP server) does help in a couple of scenarios described above, it still feels in a nascent stage with retaining and differentiating between contexts. As an example, consider the following progression of events:

- Creating Header using AppBar component with relevant context for the features and how the UX should look like.

- Improving a Search button component in Filter Component with an appropriate icon.

- Add a tabbed navigation to facts card for multiple facts linked to the same number. MCP hallucination — While all of these prompts are given as different chats and make modifications to different components, the code suggested by the Coding Assistant merges all of them to outputs a single component which is an aggregation of all the previous request outputs + the most recent code suggestion.

Components like Animation don't work very intuitively and infact, I skipped using it since the API is consuming and using it out of the box with the help of examples wasn't working.

✨ With Tangible India, I wanted to show how numbers can tell stories — and how React + KendoReact makes it easy to turn that vision into reality.

Talos Kubernetes in Five Minutes

Original post: https://nabeel.dev/2025/09/28/talos-in-five

Talos Linux is an OS designed specifically for running Kubernetes.

It is locked down with no SSH access. All operations are done through a secured API.

The documentation is (understandably) catered to setting up multi-node Kubernetes clusters that are resilient to failure.

But what if you want the cheapest possible Kubernetes cluster, for testing purposes for example, where reliability isn't super important?

In this article I'll show you how to set up a simple single-node Talos cluster in less than five minutes.

By following these instructions, you can have a full Kubernetes cluster running on a single VM,

without the extra costs of control planes and load balancers that cloud providers normally add onto their Kubernetes services.

The basic outline of steps to create a single-node cluster is:

- Get a Talos ISO image

- Create a blank Talos VM instance

- Update your config to allow workloads on control plane nodes

- Initialize the Talos VM and bootstrap the cluster

- Install MetalLB

- Install Envoy Gateway

Okay, this is where I cheat a little. I'm not counting the time it takes to download and upload a Talos VM image as part of the 5 minutes.

This step depends on which cloud provider (or home lab setup) you have.

The good news is that the official documentation is quite good.

Find the section that matches your setup and follow those instructions.

Essentially, you are going to be downloading a Talos Linux ISO.

If you are using a cloud provider (Azure, AWS, OCI, DigitalOcean, etc.),

you will then need to upload that image so that VMs can be created from that image.

I have done this on DigitalOcean and Oracle Cloud. It takes a bit of time, maybe 10-15 minutes,

but it's not hard and you only need to do it once to create as many VMs as you like going forward.

Next you will need to create a Talos Linux VM (or server if you're installing on bare metal).

As with the previous section, you will need to follow the instructions based on the infrastructure you are using.

I've been most recently using DigitalOcean and automating everything with PowerShell.

For me, creating a new blank Talos VM looks like this:

doctl compute droplet create --region sfo3 --image $talosImageId --size s-2vcpu-4gb --enable-private-networking --ssh-keys $sshKeyId $vmName --wait

After creating your blank VM, DO NOT follow any other instructions from the documentation!

Specifically, do not execute any of the talosctl commands described there.

This is where we will diverge from the official documentation.

Once your VM or machine is created, make note of its IP address for the following steps.

Now we are going to initialize our Talos Kubernetes cluster.

Do this with the following commands:

talosctl gen config $vmName "https://${VM_IP}:6443" --additional-sans $VM_IP -o $CONFIG_DIR

export TALOSCONFIG="$CONFIG_DIR/talosconfig"

talosctl config endpoint $VM_IP

talosctl config node $VM_IP

This will create a directory and populate it with an auto-generated cert and some default configuration files.

Note the following:

-

--additional-sansensures that the certificate is valid for the VM's public IP address - Set the

TALOSCONFIGenvironment variable so you don't have to add--talosconfig mydir/talosconfigevery time you usetalosctl

Talos normally configures separate control plane and worker nodes.

This is good practice for production clusters, but is expensive when you just want to test or kick the tires.

Instead, we want to create a single control plane VM that will also be our worker.

To do this, edit controlplane.yaml in the Talos config directory.

Scroll to the end of the file and uncomment (remove the #) the line # allowSchedulingOnControlPlanes: true.

Now we are ready to initialize the VM. By default, a freshly created VM waits for someone to configure it.

Once you run this command, the VM is locked down to only work with the certificate that you generated with the talosctl gen config command.

Technically, there's a risk that someone could randomly beat you to configuring the VM and take ownership.

The likelihood of this happening is very low, but if it did, you would see a failure in the apply-config command,

and you would simply delete the VM.

There are more secure ways to do this, specifically generating an ISO that is preconfigured to only respond to your cert.

However, that is beyond the scope of this simple tutorial.

talosctl apply-config --insecure --nodes $VM_IP --file "$CONFIG_DIR/controlplane.yaml"

Give the VM a few seconds (I wait 10) to apply the configuration, then run talosctl bootstrap.

You can then run talosctl health or talosctl dashboard to watch the cluster come alive in real-time.

At this point, your Kubernetes cluster is alive and you just need to generate the kubeconfig to use it:

talosctl kubeconfig $CONFIG_DIR

export KUBECONFIG=$CONFIG_DIR/kubeconfig

You should now be able to run commands like kubectl get pods --all-namespaces or k9s.

Have you ever created a LoadBalancer type service in AKS, EKS, etc., to create a load balancer that routes traffic to your cluster?

MetalLB will give that same functionality, but for free on your bare VM.

You can install MetalLB as the documentation prescribes with:

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.15.2/config/manifests/metallb-native.yaml

kubectl wait --timeout=5m --for=condition=available --all deployments -n metallb-system

After that, we need to configure an IPAddressPool so MetalLB is aware of the IP address we want it to use:

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: lab-pool

namespace: metallb-system

spec:

addresses:

- 192.168.1.100/32 # Replace with your VM's public IP

Replace 192.168.1.100 with the public IP address of your VM, save the YAML to metallb-ipaddresspool.yaml and then run kubectl apply -f metallb-ipaddresspool.yaml.

Congratulations, you now have MetalLB installed and ready to work with your Gateway Controller.

Finally, you will probably want to use the Kubernetes Gateway API

to route traffic through the public IP address to services running in your cluster.

I found that Envoy Gateway was the easiest solution to achieve this.

The quick start documentation worked flawlessly, but in summary:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v1.5.1 -n envoy-gateway-system --create-namespace

kubectl wait --timeout=5m -n envoy-gateway-system deployment/envoy-gateway --for=condition=Available

You can test that everything works as it should with the following:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/v1.5.1/quickstart.yaml -n default

curl --verbose --header "Host: www.example.com" http://$VM_IP/get

And there you have it! A simple single-node Kubernetes cluster in less than the time it took to read this article.

You can create as many as you like, tear them down, and create more when you need them.

I ended up automating all of this in a PowerShell script, and the time to run is 3-4 minutes.

This script likely won't work right out of the box for you, but it should be fairly easy to adapt it if you like:

echo "Getting VM parameters..."

$sshKey = (doctl compute ssh-key list -o json | ConvertFrom-Json | where {$_.name.Contains('dummy')}).id

$imageId = (doctl compute image list -o json | ConvertFrom-Json | where {$_.name.Contains('Talos')}).id

$timestamp = Get-Date -Format "yyyyMMdd-HHmmss"

$vmName = "kcert-test-$timestamp"

mkdir $vmName | Out-Null

echo "Creating droplet..."

$vmJson = (doctl compute droplet create --region sfo3 --image $imageId --size s-2vcpu-4gb --enable-private-networking --ssh-keys $sshKey $vmName --wait -o json)

$vm = $vmJson | ConvertFrom-Json

$vmIp = $vm[0].networks.v4 | where {$_.type -eq 'public'} | Select-Object -ExpandProperty ip_address

echo "VM created with IP address: $vmIp"

echo $vmIp > $vmName/ip.txt

echo "Initializing Talos cluster at $vmIp"

talosctl gen config $vmName "https://${vmIp}:6443" --additional-sans $vmIp -o $vmName

$env:TALOSCONFIG = (Resolve-Path "$vmName/talosconfig").Path

talosctl config endpoint $vmIp

talosctl config node $vmIp

$yaml = Get-Content -Path "${vmName}/controlplane.yaml"

$yaml = $yaml -replace '# allowSchedulingOnControlPlanes:', 'allowSchedulingOnControlPlanes:'

Set-Content -Path "${vmName}/controlplane.yaml" -Value $yaml

talosctl apply-config --insecure --nodes $vmIp --file "${vmName}/controlplane.yaml"

echo "Sleeping for 10 seconds to allow the node to initialize..."

Start-Sleep -Seconds 10

talosctl bootstrap

echo "Sleeping for 10 seconds to allow the cluster to stabilize..."

Start-Sleep -Seconds 10

talosctl health

talosctl kubeconfig $vmName

$env:KUBECONFIG = (Resolve-Path "$vmName/kubeconfig").Path

echo "Setting up MetalLB"

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.15.2/config/manifests/metallb-native.yaml

kubectl wait --timeout=5m --for=condition=available --all deployments -n metallb-system

@"

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: lab-pool

namespace: metallb-system

spec:

addresses:

- $vmIp/32

"@ | kubectl apply -f -

echo "Setting up Envoy"

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v1.5.1 -n envoy-gateway-system --create-namespace

kubectl wait --timeout=5m -n envoy-gateway-system deployment/envoy-gateway --for=condition=Available

echo "Here are your environment variables:"

$envVars = @(

"`$env:KUBECONFIG = '$env:KUBECONFIG'",

"`$env:TALOSCONFIG = '$env:TALOSCONFIG'",

"`$env:VMIP = '$vmIp'"

)

$envVars | ForEach-Object { echo $_ }

$envVars | Out-File -FilePath "$vmName/env.txt" -Encoding utf8

Arquitetura Monolítica

1 INTRODUÇÃO